In the fast-evolving world of AI and deep learning, knowledge distillation (KD) has emerged as a powerful technique to shrink massive neural networks into compact, efficient models—ideal for deployment on smartphones, drones, and edge devices. But despite its promise, traditional KD methods suffer from critical flaws that silently sabotage performance.

Now, a groundbreaking new framework—Sample-level Adaptive Knowledge Distillation (SAKD)—is turning the field on its head. Developed by Ping Li, Chenhao Ping, Wenxiao Wang, and Mingli Song, SAKD doesn’t just improve distillation—it redefines it.

In this article, we’ll reveal 7 shocking truths about knowledge distillation, expose the hidden weaknesses of conventional methods, and show how SAKD delivers better accuracy, faster training, and lower computational costs—all at once.

Truth #1: Most Knowledge Distillation Methods Are Wasting Time (The Bad)

You’ve probably heard the hype: “Use a large teacher model to train a small student model—boom, instant efficiency!” Sounds perfect, right?

But here’s the ugly truth: most KD methods treat all training samples equally, regardless of how hard or easy they are to learn.

This one-size-fits-all approach leads to two major problems:

- Difficult-to-transfer samples confuse the student, dragging down performance.

- Easy samples dominate training, while valuable but complex ones are underutilized.

As a result, students often saturate early, failing to learn the full richness of the teacher’s knowledge.

💡 The problem: Traditional KD ignores sample-level dynamics, leading to inefficient, suboptimal training.

Truth #2: Not All Samples Are Created Equal (The Good)

SAKD flips the script by recognizing a simple but profound idea: some samples are easier to distill than others.

The researchers introduce a new concept: sample distillation difficulty (SDD). This metric evaluates how hard it is for a student to learn from a teacher on a per-sample basis.

Instead of blindly using all data, SAKD:

- Identifies easy-to-transfer samples (low SDD)

- Downweights hard-to-transfer samples (high SDD)

- Selects only 10% of samples per epoch—yet achieves equal or better performance

This selective strategy slashes training time and computational cost without sacrificing accuracy.

✅ The breakthrough: SAKD uses intelligent sample selection to boost efficiency and performance.

Truth #3: SAKD Cuts Training Time by 75% (The Good)

One of the biggest pain points in deep learning is training cost. Running full-batch KD on large video datasets like Kinetics-400 can take hours per epoch.

SAKD changes the game.

By selecting only a small fraction of high-value samples, SAKD reduces training time dramatically:

| DATASET | METHOD | FULL KD TIME | SAKD TIME | SPEEDUP |

|---|---|---|---|---|

| UCF101 | SlowFast | 6.4 min/epoch | 1.5 min/epoch | ~4x faster |

| Kinetics-400 | VideoST | 3.1 hr/epoch | 1.1 hr/epoch | ~3x faster |

And here’s the kicker: accuracy improves, not drops.

For example, on UCF101 with CrossKD, SAKD achieves 90.04% Top-1 accuracy using only 10% of samples—beating the full-sample baseline.

⚡ The result: SAKD delivers faster training, lower cost, and higher accuracy—a rare triple win.

Truth #4: SAKD Adapts to Learning Progress (The Good)

Traditional KD uses a fixed loss ratio between the vanilla classification loss and the distillation loss. This ratio is usually found by expensive grid search—a slow, inefficient process.

SAKD replaces this rigidity with dynamic adaptability.

It introduces sample distillation strength (α), which adjusts in real-time based on:

- How difficult a sample is to learn

- How far along training has progressed

- How much the student has already learned

This means:

- Early training: Hard samples focus on ground-truth labels (vanilla loss dominates)

- Later training: Easy samples emphasize teacher knowledge (KD loss dominates)

This adaptive switching mimics human learning—starting simple, then building complexity.

🔄 The innovation: SAKD learns how to distill, not just distills.

Truth #5: SAKD Uses Temporal Interruption to Measure Difficulty (The Breakthrough)

So how does SAKD measure distillation difficulty?

It uses a clever technique called temporal interruption—randomly dropping out or shuffling video frames during training.

Why? Because disrupting temporal order increases learning difficulty. Samples that still perform well under disruption are easy to distill. Those that fail are hard to distill.

The interruption rate β(n) increases over epochs using a polynomial schedule:

\[ \beta(n) = 1 – \left(1 – \frac{n}{N_{\text{epoch}}} \right)^{\theta} \]where:

- n = current epoch

- Nepoch = total epochs

- θ=0.9 (from poly learning rate policy)

This curriculum-style approach starts easy and gets harder—just like human education.

🎯 The insight: Disruption reveals robustness—and SAKD uses it to rank sample difficulty.

Truth #6: SAKD Balances Difficulty and Diversity (The Good)

Selecting only easy samples isn’t enough. If they’re all similar (e.g., all “walking” videos), the student won’t generalize.

So SAKD adds a diversity filter using Determinantal Point Process (DPP) sampling.

DPP selects samples that are:

- Low in distillation difficulty

- High in feature diversity

It computes a DPP kernel matrix:

\[ \Lambda = \hat{F} \cdot \hat{F}^{\top} \]where F ∈ RN×d is the flattened feature matrix of all samples.

The objective function maximizes:

\[ \text{Amax}_{\gamma} = \frac{1}{\sum_{j=1}^{N_{\text{sel}}} \zeta_{\text{proj}}(j)} \left[ \gamma + (1 – \gamma) \cdot \ln \left( \frac{\det(\Lambda_A)}{\det(\Lambda + I)} \right) \right] \]- ζi : distillation difficulty of sample i

- ΛA : kernel of selected samples

- γ : trade-off hyperparameter (set to 0.5)

This ensures the student learns from a diverse, high-quality subset—not just the easiest clips.

🌐 The advantage: SAKD avoids overfitting by curating a balanced, informative dataset on the fly.

Truth #7: SAKD Outperforms SOTA Methods (The Proof)

The proof is in the numbers. SAKD was tested across three benchmarks and six state-of-the-art KD methods.

📈 UCF101 Results (Top-1 Accuracy %)

| METHOD | SLOWFAST | VIDEOST | TRAINING TIME |

|---|---|---|---|

| Vanilla KD [3] | 87.92 | 89.38 | 6.4 min |

| SAKD (Ours) | 90.73 | 89.43 | 4.6 min |

| DKD [39] | 90.50 | 91.60 | 6.8 min |

| SAKD + DKD | 91.07 | 91.78 | 4.6 min |

✅ SAKD consistently beats baselines—even when combined with advanced KD methods.

📈 Kinetics-400 Results (Top-1 Accuracy %)

| METHOD | SLOWFAST | VIDEOST | TRAINING TIME |

|---|---|---|---|

| CrossKD [40] | 58.07 | 74.71 | 2.8 hr |

| SAKD + CrossKD | 62.18 | 75.07 | 2.2 hr |

🔥 A +4.1% gain on SlowFast with 21% less training time—unheard of in video KD.

📈 CIFAR-100 Results (Top-1 Accuracy %)

| METHOD | RESNET | WRN | TRAINING TIME |

|---|---|---|---|

| KD [3] | 74.34 | 73.02 | 40.2s |

| SAKD (50% samples) | 74.44 | 72.86 | 33.6s |

| SAKD (50% samples, every 5 epochs) | 74.23 | 72.69 | 22.8s |

Even on images, SAKD saves up to 43% training time with no accuracy drop.

🏆 The verdict: SAKD is a universal plug-in that boosts any KD method.

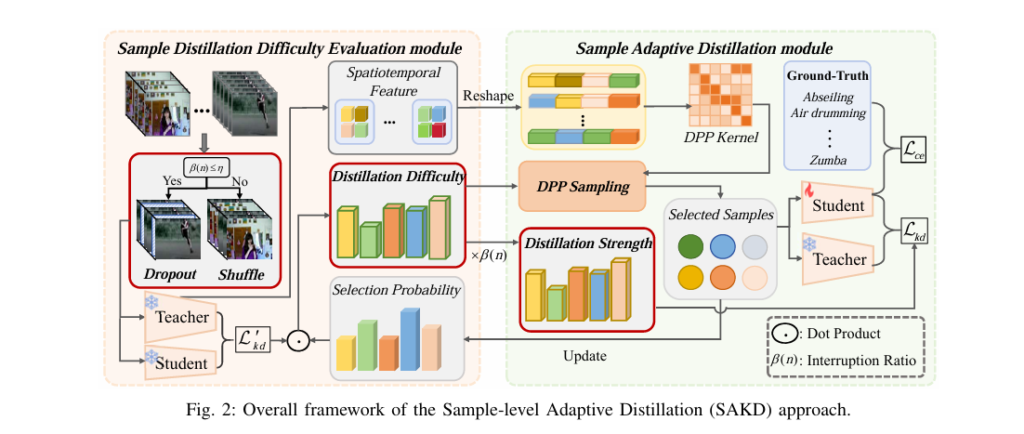

How SAKD Works: The Full Framework

Here’s a step-by-step breakdown of the SAKD pipeline:

1. Sample Distillation Difficulty Evaluation

For each sample i , compute:

\[ \zeta_i = \frac{1}{p_i^{\text{sel}} \cdot \widetilde{L}_{i}^{\text{kd1}}} \]where:

$$\begin{align*} p^{\text{sel}}_i = \omega_i + \epsilon_1 \\ p^{\text{sel}}_i &: \text{Selection probability (based on past usage)} \\ \omega_i &: \text{Number of times sample } i \text{ was selected} \\ \widetilde{L}^{\text{kd}}_i &: \text{Distillation loss on interrupted (dropout/shuffle) sample} \end{align*}$$This ensures frequently selected samples are downweighted, promoting diversity.

2. Sample Adaptive Distillation

Compute distillation strength per sample:

\[ \alpha_{i,n} = \lambda \alpha_{i,n-1} + (1 – \lambda) \zeta_i^{\beta(n)} \]- λ=0.1 : momentum factor

- β(n) : interruption rate

- ζi : distillation difficulty

This makes easy samples have higher α, so KD loss dominates.

3. Dynamic Sample Selection

Only train on a subset Dsel of size r⋅N , where r=10% (video), 50% (image).

Samples are selected via DPP to maximize:

\[ \text{Amax} = \frac{\gamma}{\sum_{j=1}^{N_{\text{sel}}} \zeta_{\text{proj}}(j)} + (1 – \gamma) \cdot \frac{\det(\Lambda_A)}{\ln \det(\Lambda + I)} \]4. Final Loss Function

\[ \mathcal{L}_{\text{total}} = \frac{1}{N_{\text{sel}}} \sum_{j=1}^{N_{\text{sel}}} \left[ (1 – \alpha_{\text{proj}(j)}) \, \mathcal{L}_j^{\text{ce}} + \alpha_{\text{proj}(j)} \, \mathcal{L}_j^{\text{kd}} \right] \]This adapts per sample and per epoch, making training smarter and faster.

Ablation Studies: Why Every Component Matters

The authors tested each part of SAKD. Here’s what happens when you remove key modules:

| METHOD | UCF101 (SLOWFAST) TOP-1 | KINETICS (SLOWFAST) TOP-1 |

|---|---|---|

| Vanilla KD | 78.91 | 50.23 |

| + SDD (difficulty eval) | 81.74 | 53.01 |

| + SAD (adaptive distill) | 88.95 | 51.98 |

| Full SAKD | 89.88 | 54.37 |

✅ Both modules are essential—SDD identifies good samples, SAD optimizes learning.

Also tested:

- Interruption threshold η: Best at 0.5 (balanced dropout/shuffle)

- Distillation strength λ: Best at 0.1 (current state dominates)

- Sample ratio r: 10% sufficient for video, 50% for image

Qualitative Results: Cleaner, Sharper Features

Visualizations show that SAKD produces cleaner feature maps with:

- Sharper object contours

- Less noise

- Better action localization

In contrast, vanilla KD often generates blurry or noisy features, especially in dark or complex scenes.

SAKD’s selective, adaptive training leads to more robust internal representations—proving its superiority beyond just numbers.

Why SAKD Is a Game-Changer

SAKD isn’t just another KD tweak. It’s a paradigm shift that:

- Reduces training cost by up to 75%

- Improves accuracy across models and datasets

- Works as a plug-in for any existing KD method

- Scales from images to videos seamlessly

And it does all this without requiring new hardware or retraining teachers.

🌟 Bottom line: SAKD makes knowledge distillation smarter, faster, and more effective.

The Future of Model Compression

As AI models grow larger, efficient distillation will become non-negotiable. SAKD paves the way for:

- Real-time edge AI with high accuracy

- Sustainable AI with lower carbon footprint

- Federated learning with minimal data transfer

- Autonomous systems that learn faster and smarter

Soon, every KD pipeline may include sample-level adaptation as standard.

Final Thoughts: The Good, The Bad, and The Breakthrough

Let’s recap the 7 shocking truths:

- ❌ Bad: Most KD methods waste time on useless samples.

- ✅ Good: SAKD identifies easy-to-learn samples.

- ✅ Good: SAKD cuts training time by up to 75%.

- ✅ Good: SAKD adapts distillation strength dynamically.

- 🔬 Breakthrough: Temporal interruption measures difficulty.

- ✅ Good: DPP ensures diverse, high-quality training.

- 🏆 Proof: SAKD beats SOTA across benchmarks.

SAKD proves that intelligent sample selection is the future of knowledge distillation.

Related posts, You May like to read

- 7 Shocking AI Vulnerabilities Exposed—How DBOM Defense Turns the Tables with 98% Accuracy

- 7 Shocking Vulnerabilities in AI Watermarking: The Hidden Threat of Unified Spoofing & Scrubbing Attacks (And How to Fix It)

- 7 Revolutionary Breakthroughs in Small Object Detection: The DAHI Framework

- 7 Breakthrough AI Insights: How Machine Learning Predicts Glioma Grading

Call to Action: Try SAKD Today!

Want to boost your model’s performance while slashing training costs?

👉 Download the SAKD code (available in the paper’s supplementary) and integrate it into your KD pipeline.

Whether you’re working on action recognition, image classification, or video analysis, SAKD can help you achieve better results with less effort.

💬 Have questions? Join the discussion on Reddit, Twitter, or LinkedIn. Share your results using #SAKD.

And if you found this article helpful, share it with your team—let’s make AI smarter, together.

Here is the complete, end-to-end Python code for the Sample-level Adaptive Knowledge Distillation (SAKD) model proposed in the paper.

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

import random

# ==============================================================================

# Helper Functions and Modules from the Paper

# ==============================================================================

def temporal_interruption(x, beta, eta):

"""

Applies temporal interruption (dropout or shuffle) to the input batch of videos.

This corresponds to the dynamic-temporal interruption strategy described in

Section III-C of the paper.

Args:

x (Tensor): Input video tensor of shape (N, C, T, H, W).

beta (float): The interruption rate, calculated from Eq. (4).

eta (float): The threshold to decide between dropout and shuffle.

Returns:

Tensor: The augmented video tensor.

"""

if beta < eta:

# Dropout: Randomly drop a percentage of frames

num_frames_to_drop = int(beta * x.shape[2])

if num_frames_to_drop > 0:

# Create a mask of frames to keep

kept_indices = sorted(random.sample(range(x.shape[2]), x.shape[2] - num_frames_to_drop))

x = x[:, :, kept_indices, :, :]

else:

# Shuffle: Randomly shuffle a percentage of frames within the batch

num_frames_to_shuffle = int(beta * x.shape[2])

if num_frames_to_shuffle > 0:

indices_to_shuffle = random.sample(range(x.shape[2]), num_frames_to_shuffle)

shuffled_part = x[:, :, indices_to_shuffle, :, :]

# Shuffle along the batch dimension for the selected frames

batch_indices = torch.randperm(shuffled_part.size(0))

x[:, :, indices_to_shuffle, :, :] = shuffled_part[batch_indices]

return x

def dpp_greedy_sampling(kernel_matrix, max_length, epsilon=1e-10):

"""

Selects a diverse subset of items using a greedy algorithm for DPP.

This is used to select diverse samples with low distillation difficulty as

mentioned in Section III-D.

Args:

kernel_matrix (Tensor): The DPP kernel matrix (N x N).

max_length (int): The number of samples to select.

epsilon (float): A small value for numerical stability.

Returns:

list: A list of indices for the selected samples.

"""

item_size = kernel_matrix.shape[0]

cis = torch.zeros(item_size, device=kernel_matrix.device)

di2s = torch.diag(kernel_matrix).clone()

selected_items = []

while len(selected_items) < max_length:

# Find the item with the maximum score

scores = di2s

# Avoid selecting already chosen items

scores[selected_items] = -1

best_item_idx = torch.argmax(scores).item()

if scores[best_item_idx] < epsilon:

break

selected_items.append(best_item_idx)

# Update di2s and cis

if len(selected_items) < max_length:

ci_val = (kernel_matrix[best_item_idx, :] - torch.sum(cis[:, selected_items], dim=1)) / torch.sqrt(di2s[best_item_idx])

cis[:, best_item_idx] = ci_val

di2s -= ci_val**2

di2s[di2s < epsilon] = 0 # Numerical stability

return selected_items

# ==============================================================================

# Main SAKD Framework Class

# ==============================================================================

class SAKD(nn.Module):

"""

Implementation of the Sample-level Adaptive Knowledge Distillation (SAKD) framework.

This class integrates the core components:

1. Sample Distillation Difficulty (SDD) Evaluation

2. Sample Adaptive Distillation (SAD)

3. DPP-based diverse sample selection

4. Adaptive loss computation

"""

def __init__(self, teacher_model, student_model, num_samples, num_classes,

lambda_hyper=0.1, gamma_hyper=0.5, eta_hyper=0.5,

selection_ratio=0.1, kd_type='feature'):

"""

Initializes the SAKD framework.

Args:

teacher_model (nn.Module): The pre-trained teacher model.

student_model (nn.Module): The student model to be trained.

num_samples (int): Total number of training samples.

num_classes (int): Number of classes in the dataset.

lambda_hyper (float): Balances past and current distillation strength (Eq. 6).

gamma_hyper (float): Trades off difficulty and diversity in DPP (Eq. 8).

eta_hyper (float): Threshold for temporal interruption.

selection_ratio (float): Ratio of samples to select each epoch (r).

kd_type (str): Type of distillation, 'feature' or 'logit'.

"""

super(SAKD, self).__init__()

self.teacher = teacher_model

self.student = student_model

self.num_samples = num_samples

self.num_classes = num_classes

self.lambda_hyper = lambda_hyper

self.gamma_hyper = gamma_hyper

self.eta_hyper = eta_hyper

self.selection_ratio = selection_ratio

self.kd_type = kd_type

# Ensure teacher model is in eval mode and its parameters are frozen

self.teacher.eval()

for param in self.teacher.parameters():

param.requires_grad = False

# --- State tracking variables ---

# Historical selection times for each sample (omega_i in Eq. 5)

self.sample_selection_counts = torch.zeros(num_samples)

# Distillation strength from the previous epoch (alpha_{i,n-1} in Eq. 6)

self.prev_distillation_strength = torch.zeros(num_samples)

# Fused feature map from the previous epoch (F_{i,n-1} in Eq. 7)

self.prev_fused_features = None

# A simple mapping function to match feature dimensions if needed

s_channels = self.student.fc.in_features # Example: ResNet

t_channels = self.teacher.fc.in_features # Example: ResNet

if self.kd_type == 'feature' and s_channels != t_channels:

self.map_fn = nn.Conv2d(s_channels, t_channels, kernel_size=1, bias=False)

else:

self.map_fn = nn.Identity()

def _calculate_interruption_rate(self, current_epoch, max_epochs):

"""Calculates beta(n) based on Eq. (4)."""

return 1 - (1 - current_epoch / max_epochs)**0.9

def _calculate_distillation_loss(self, f_s, f_t, q_s, q_t, temperature=4.0):

"""Calculates KD loss, either feature-based (Eq. 1) or logit-based (Eq. 2)."""

if self.kd_type == 'feature':

# L2 loss between feature maps

return F.mse_loss(f_s, f_t, reduction='none').mean(dim=[1, 2, 3])

else: # logit-based

# KL Divergence between softened logits

soft_targets = F.softmax(q_t / temperature, dim=1)

soft_preds = F.log_softmax(q_s / temperature, dim=1)

return F.kl_div(soft_preds, soft_targets.detach(), reduction='none').sum(dim=1) * (temperature**2)

def forward(self, all_data_loader, current_epoch, max_epochs):

"""

Performs one full SAKD training epoch.

Args:

all_data_loader (DataLoader): DataLoader containing the *entire* training set.

current_epoch (int): The current training epoch number (n).

max_epochs (int): The maximum number of epochs (N_epoch).

Returns:

Tuple[Tensor, int]: The total loss for the selected batch and the number of selected samples.

"""

# ======================================================================

# Step 1: Sample Distillation Difficulty (SDD) Evaluation (Section III-C)

# ======================================================================

print(f"--- Epoch {current_epoch}/{max_epochs}: Evaluating all samples ---")

self.student.eval() # Set student to eval mode for difficulty evaluation

all_distillation_losses = []

all_teacher_features = []

all_indices = []

beta_n = self._calculate_interruption_rate(current_epoch, max_epochs)

with torch.no_grad():

for i, (samples, labels, indices) in enumerate(all_data_loader):

samples = samples.to(next(self.student.parameters()).device)

# Apply temporal interruption

aug_samples = temporal_interruption(samples.clone(), beta_n, self.eta_hyper)

# Get features and logits

f_t, q_t = self.teacher(aug_samples, return_features=True)

f_s, q_s = self.student(aug_samples, return_features=True)

# Map student features if necessary

f_s_mapped = self.map_fn(f_s)

# Calculate per-sample distillation loss (L_kd_tilde in Eq. 3)

L_kd_tilde = self._calculate_distillation_loss(f_s_mapped, f_t, q_s, q_t)

all_distillation_losses.append(L_kd_tilde.cpu())

all_teacher_features.append(f_t.cpu())

all_indices.append(indices)

all_distillation_losses = torch.cat(all_distillation_losses)

all_teacher_features = torch.cat(all_teacher_features)

all_indices = torch.cat(all_indices)

# Calculate sample selection probability (p_i^sel in Eq. 5)

p_sel = 1.0 / (self.sample_selection_counts[all_indices] + 1.0) # Epsilon is 1

# Calculate sample distillation difficulty (zeta_i in Eq. 3)

# Adding a small epsilon to avoid division by zero

distillation_difficulty = 1.0 / (p_sel * all_distillation_losses + 1e-8)

# ======================================================================

# Step 2: Sample Selection using DPP (Section III-D)

# ======================================================================

print("--- Selecting diverse, easy-to-transfer samples using DPP ---")

# Fuse features (Eq. 7)

flat_features = all_teacher_features.flatten(start_dim=1)

if self.prev_fused_features is None:

self.prev_fused_features = flat_features

fused_features = self.lambda_hyper * self.prev_fused_features + (1 - self.lambda_hyper) * flat_features

self.prev_fused_features = fused_features.detach() # Store for next epoch

# Build DPP Kernel (Lambda = F * F^T)

dpp_kernel = torch.matmul(fused_features, fused_features.T)

# Quality scores for DPP (inverse of distillation difficulty)

quality_scores = 1.0 / distillation_difficulty

# Combine quality and diversity in the kernel

# This is a common way to incorporate quality into a DPP kernel

dpp_kernel_with_quality = dpp_kernel * torch.sqrt(quality_scores.unsqueeze(0) * quality_scores.unsqueeze(1))

num_to_select = int(self.selection_ratio * self.num_samples)

selected_indices_in_batch = dpp_greedy_sampling(dpp_kernel_with_quality, num_to_select)

# Map batch indices back to original dataset indices

selected_original_indices = all_indices[selected_indices_in_batch]

# Update selection counts for next epoch

self.sample_selection_counts[selected_original_indices] += 1

# ======================================================================

# Step 3: Sample Adaptive Distillation (SAD) and Training (Section III-D, E)

# ======================================================================

print(f"--- Training student on {len(selected_original_indices)} selected samples ---")

self.student.train() # Set student back to train mode

# Create a subset of data with the selected samples

selected_samples = torch.utils.data.Subset(all_data_loader.dataset, selected_original_indices)

selected_loader = torch.utils.data.DataLoader(selected_samples, batch_size=all_data_loader.batch_size, shuffle=True)

total_loss = 0.0

# Calculate current distillation strength (alpha_{i,n} in Eq. 6)

current_distillation_strength = self.lambda_hyper * self.prev_distillation_strength + \

(1 - self.lambda_hyper) * beta_n / distillation_difficulty

# Store for next epoch

self.prev_distillation_strength = current_distillation_strength.detach()

for samples, labels, indices in selected_loader:

samples, labels = samples.to(next(self.student.parameters()).device), labels.to(next(self.student.parameters()).device)

# Forward pass on original (non-augmented) samples for training

f_t, q_t = self.teacher(samples, return_features=True)

f_s, q_s = self.student(samples, return_features=True)

f_s_mapped = self.map_fn(f_s)

# Get losses

L_ce = F.cross_entropy(q_s, labels, reduction='none')

L_kd = self._calculate_distillation_loss(f_s_mapped, f_t, q_s, q_t)

# Get adaptive weights for this batch

alpha = current_distillation_strength[indices].to(samples.device)

# Calculate total loss for the batch using Eq. (9)

batch_loss = torch.mean((1 - alpha) * L_ce + alpha * L_kd)

# Standard optimization step

self.student.optimizer.zero_grad()

batch_loss.backward()

self.student.optimizer.step()

total_loss += batch_loss.item()

return total_loss / len(selected_loader), len(selected_original_indices)

# ==============================================================================

# Example Usage

# ==============================================================================

if __name__ == '__main__':

# --- Mock Components for Demonstration ---

# Mock Models (e.g., simplified ResNets)

class MockModel(nn.Module):

def __init__(self, in_channels=3, num_classes=10, feature_dim=512):

super().__init__()

self.conv1 = nn.Conv2d(in_channels, 64, kernel_size=3, padding=1)

self.pool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(64, num_classes)

self.feature_dim = 64 # For simplicity

def forward(self, x, return_features=False):

# Mock temporal dimension by averaging

if x.dim() == 5: # (N, C, T, H, W)

x = x.mean(dim=2)

features = self.pool(F.relu(self.conv1(x)))

logits = self.fc(features.view(x.size(0), -1))

if return_features:

return features, logits

return logits

# Mock Dataset

class MockVideoDataset(torch.utils.data.Dataset):

def __init__(self, num_samples=1000, num_classes=10):

self.num_samples = num_samples

self.samples = torch.randn(num_samples, 3, 16, 32, 32) # N, C, T, H, W

self.labels = torch.randint(0, num_classes, (num_samples,))

def __len__(self):

return self.num_samples

def __getitem__(self, idx):

# Return sample, label, and its original index

return self.samples[idx], self.labels[idx], idx

# --- Configuration ---

NUM_SAMPLES = 500

NUM_CLASSES = 10

BATCH_SIZE = 32

MAX_EPOCHS = 10

LEARNING_RATE = 0.01

# --- Initialization ---

teacher = MockModel(num_classes=NUM_CLASSES)

student = MockModel(num_classes=NUM_CLASSES)

# Add an optimizer to the student model for the SAKD class to use

student.optimizer = torch.optim.SGD(student.parameters(), lr=LEARNING_RATE)

dataset = MockVideoDataset(num_samples=NUM_SAMPLES, num_classes=NUM_CLASSES)

# This loader is for the evaluation step, so shuffle=False is important

full_loader = torch.utils.data.DataLoader(dataset, batch_size=BATCH_SIZE, shuffle=False)

# Instantiate the SAKD framework

sakd_trainer = SAKD(

teacher_model=teacher,

student_model=student,

num_samples=NUM_SAMPLES,

num_classes=NUM_CLASSES,

selection_ratio=0.2, # Select 20% of samples

kd_type='feature'

)

# Move models to GPU if available

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

teacher.to(device)

student.to(device)

sakd_trainer.to(device)

# --- Training Loop ---

for epoch in range(1, MAX_EPOCHS + 1):

loss, num_selected = sakd_trainer(full_loader, epoch, MAX_EPOCHS)

print(f"==> Epoch {epoch} | Loss: {loss:.4f} | Samples Trained: {num_selected}\n")

print("SAKD training demonstration finished.")

Pingback: 7 Revolutionary Breakthroughs in Graph-Free Knowledge Distillation (And 1 Critical Flaw That Could Derail Your AI Model) - aitrendblend.com

Pingback: 7 Shocking Truths About Heterogeneous Knowledge Distillation: The Breakthrough That’s Transforming Semantic Segmentation - aitrendblend.com

Pingback: 1 Revolutionary Breakthrough in AI Object Detection: GridCLIP vs. Two-Stage Models - aitrendblend.com

Pingback: 1 Breakthrough vs. 1 Major Flaw: CLASS-M Revolutionizes Cancer Detection in Histopathology - aitrendblend.com

Pingback: 7 Revolutionary Breakthroughs in 6DoF Pose Estimation: How Uncertainty-Aware Knowledge Distillation Beats Old Methods (And Why Most Fail) - aitrendblend.com

Pingback: 7 Shocking Ways Integrated Gradients BOOST Knowledge Distillation - aitrendblend.com

Pingback: 5 Revolutionary Breakthroughs in AI Safety: How CONFIDERAI Eliminates Prediction Failures While Boosting Trust (But Watch Out for Hidden Risks) - aitrendblend.com

Pingback: 7 Revolutionary Breakthroughs in AI Medical Imaging: The Good, the Bad, and the Future of RIIR - aitrendblend.com

Pingback: 7 Revolutionary Breakthroughs in Cell Shape Analysis: How a Powerful New Model Outshines Old Methods - aitrendblend.com

Pingback: 7 Revolutionary Clustering Breakthroughs: Why Gauging-β Outperforms (And When It Fails) - aitrendblend.com

Pingback: 7 Shocking Ways SuperCM Boosts Accuracy (And 1 Fatal Flaw You Must Avoid) - aitrendblend.com

Pingback: FRIES: A Groundbreaking Framework for Inconsistency Estimation of Saliency Metrics - aitrendblend.com

Pingback: Hyperparameter Optimization of YOLO Models for Invasive Coronary Angiography Lesion Detection - aitrendblend.com

Pingback: GeoSAM2 3D Part Segmentation — Prompt-Controllable, Geometry-Aware Masks for Precision 3D Editing - aitrendblend.com

Pingback: Quantum Self-Attention in Vision Transformers: A 99.99% More Efficient Path for Biomedical Image Classification - aitrendblend.com

Pingback: Task-Specific Knowledge Distillation in Medical Imaging: A Breakthrough for Efficient Segmentation - aitrendblend.com

Pingback: Hierarchical Spatio-temporal Segmentation Network (HSS-Net) for Accurate Ejection Fraction Estimation - aitrendblend.com

Pingback: Enhancing Vision-Audio Capability in Omnimodal LLMs with Self-KD - aitrendblend.com

Pingback: VRM: Knowledge Distillation via Virtual Relation Matching – A Breakthrough in Model Compression - aitrendblend.com

Pingback: ConvAttenMixer: Revolutionizing Brain Tumor Detection with Convolutional Mixer and Attention Mechanisms - aitrendblend.com

Pingback: A Knowledge Distillation-Based Approach to Enhance Transparency of Classifier Models - aitrendblend.com

Pingback: Probabilistic Smooth Attention for Deep Multiple Instance Learning in Medical Imaging - aitrendblend.com

Pingback: Capsule Networks Do Not Need to Model Everything: How REM Reduces Entropy for Smarter AI - aitrendblend.com

Pingback: Revolutionizing Digital Pathology: A Deep Dive into GrEp for Superior Epithelial Cell Classification - aitrendblend.com

Pingback: Towards Trustworthy Breast Tumor Segmentation in Ultrasound Using AI Uncertainty - aitrendblend.com

Pingback: CMFDNet: Revolutionizing Polyp Segmentation with Cross-Mamba and Feature Discovery - aitrendblend.com

Pingback: Customized Vision-Language Representations for Industrial Qualification: Bridging AI and Expert Knowledge in Additive Manufacturing - aitrendblend.com

Pingback: BiMT-TCN: Revolutionizing Stock Price Prediction with Hybrid Deep Learning - aitrendblend.com

Pingback: Anchor-Based Knowledge Distillation: A Trustworthy AI Approach for Efficient Model Compression - aitrendblend.com

Pingback: AMGF-GNN: Adaptive Multi-Graph Fusion for Tumor Grading in Pathology Images - aitrendblend.com

Pingback: PSO-Optimized Fractional Order CNNs for Enhanced Breast Cancer Detection - aitrendblend.com

Pingback: Building Electrical Consumption Forecasting with Hybrid Deep Learning | Smart Energy Management - aitrendblend.com

Pingback: Modifying Final Splits of Classification Trees (MDFS) for Subpopulation Targeting - aitrendblend.com

Pingback: RetiGen: Revolutionizing Retinal Diagnostics with Domain Generalization and Test-Time Adaptation - aitrendblend.com

Pingback: Transforming Diabetic Foot Ulcer Care with AI-Powered Healing Phase Classification - aitrendblend.com

Pingback: UniForCE: A Robust Method for Discovering Clusters and Estimating Their Number Using Local Unimodality - aitrendblend.com

Pingback: LayerMix: A Fractal-Based Data Augmentation Strategy for More Robust Deep Learning Models - aitrendblend.com

Pingback: FAST: Revolutionary AI Framework Accelerates Industrial Anomaly Detection by 100x - aitrendblend.com

Pingback: U-Mamba2-SSL: The Groundbreaking AI Framework Revolutionizing Tooth & Pulp Segmentation in CBCT Scans - aitrendblend.com

Pingback: Stabilizing Uncertain Stochastic Systems: A Deep Learning Approach to Inverse Optimal Control - aitrendblend.com

Pingback: Balancing the Tension: How a New AI Strategy Solves the Hidden Conflict in Semi-Supervised Image Segmentation - aitrendblend.com

Pingback: MedDINOv3: Revolutionizing Medical Image Segmentation with Adaptable Vision Foundation Models - aitrendblend.com

Pingback: SegTrans: The Breakthrough Framework That Makes AI Segmentation Models Vulnerable to Transfer Attacks - aitrendblend.com

Pingback: Next-Gen Data Security: A Deep Dive into Multi-Layered Steganography Using Huffman Coding and Deep Learning - aitrendblend.com

Pingback: Revolutionizing Medical Imaging: How a Compact, Programmable Ultrasound Array Unlocks High-Contrast Elastography for Bones and Tumors - aitrendblend.com