In the rapidly evolving world of deep learning, deploying high-performance models on resource-constrained devices remains a critical challenge—especially for dense visual prediction tasks like object detection and semantic segmentation. These tasks are essential in real-time applications such as autonomous driving, video surveillance, and robotics. While large, deep neural networks deliver impressive accuracy, their computational demands make them impractical for edge deployment.

Enter Knowledge Distillation (KD)—a powerful model compression technique that transfers knowledge from a large, high-capacity “teacher” model to a smaller, efficient “student” model. However, traditional KD methods often fall short in dynamic, dense prediction scenarios due to their reliance on static, teacher-driven feature selection.

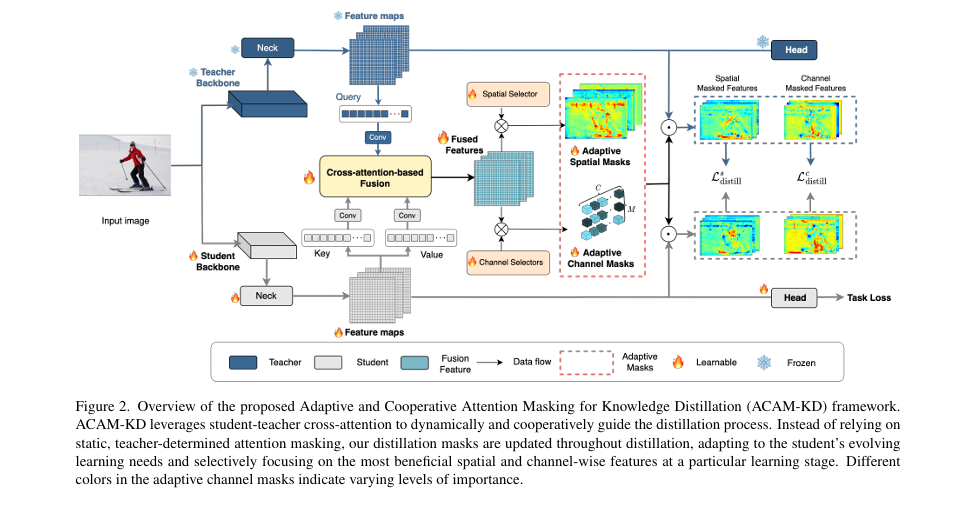

To overcome these limitations, researchers Qizhen Lan and Qing Tian from the University of Alabama at Birmingham introduced ACAM-KD: Adaptive and Cooperative Attention Masking for Knowledge Distillation, a groundbreaking framework that redefines how knowledge is transferred in deep learning models.

In this article, we’ll explore how ACAM-KD enhances feature-based knowledge distillation through adaptive student-teacher interactions, cross-attention fusion, and dynamic spatial-channel masking—resulting in state-of-the-art performance across object detection and semantic segmentation benchmarks.

What Is ACAM-KD?

ACAM-KD (Adaptive and Cooperative Attention Masking for Knowledge Distillation) is a novel knowledge distillation framework designed specifically for dense visual prediction tasks. Unlike conventional KD methods that rely on fixed or teacher-defined attention maps, ACAM-KD introduces a cooperative learning mechanism where both the teacher and student dynamically interact to identify the most valuable features for distillation.

The core innovation lies in two key components:

- Student-Teacher Cross-Attention Feature Fusion (STCA-FF)

- Adaptive Spatial-Channel Masking (ASCM)

Together, these modules enable adaptive, evolving feature selection that responds to the student’s learning progress—ensuring more efficient and effective knowledge transfer.

The Problem with Traditional Knowledge Distillation

Before diving into ACAM-KD, it’s important to understand the shortcomings of existing KD methods.

Most feature-based knowledge distillation approaches assume that the most important regions for distillation can be determined solely by the teacher’s attention maps or predefined heuristics (e.g., bounding boxes, prediction confidence). While this works to some extent, it has several critical flaws:

- ❌ Static Feature Selection: The same regions are emphasized throughout training, even after the student has already learned them.

- ❌ Teacher-Centric Bias: The student is forced to mimic the teacher, even if the teacher’s attention is suboptimal.

- ❌ Neglect of Channel-Wise Importance: Most methods focus only on spatial regions, ignoring the varying importance of different feature channels.

- ❌ Lack of Student Autonomy: The student plays a passive role, with no ability to guide the distillation process based on its evolving understanding.

As shown in Figure 1 of the paper, a student model may initially develop better attention localization than the teacher but eventually regresses to mimic the teacher’s fixed pattern—hindering further improvement.

Introducing ACAM-KD: A Smarter Way to Distill Knowledge

ACAM-KD addresses these issues by enabling cooperative, adaptive knowledge transfer. Instead of blindly following the teacher, the student actively participates in selecting which features to learn, based on both teacher guidance and its own evolving representations.

Let’s break down the two core components of ACAM-KD.

1. Student-Teacher Cross-Attention Feature Fusion (STCA-FF)

The STCA-FF module enables dynamic interaction between the teacher and student by fusing their features using cross-attention.

Here’s how it works:

\[ Q = W_q F_T, \quad K = W_k F_S, \quad V = W_v F_S \] \[ \text{where } \; W_q, W_k \in \mathbb{R}^{C_q \times C} \;\; \text{reduce the channels to } C_q = \tfrac{C}{2}, \;\; \text{and } W_v \in \mathbb{R}^{C \times C} \;\; \text{preserves the dimension.} \] \[ A = \text{softmax}\!\left(C_q Q K^{T}\right) \in \mathbb{R}^{H W \times H W} \] \[ F_{\text{fused}} = A V \in \mathbb{R}^{C \times H \times W} \]This fusion allows the student to attend to teacher features while using its own evolving representations to determine relevance—creating a collaborative knowledge transfer process.

2. Adaptive Spatial-Channel Masking (ASCM)

Once the features are fused, ACAM-KD applies adaptive masking to selectively emphasize important regions in both spatial and channel dimensions.

Unlike fixed masks, ASCM generates dynamic masks that evolve as the student learns.

Channel-Wise Masking

A learnable channel selection unit mc∈RM generates channel masks:

\[ M_c = \sigma(m_c \cdot v), \quad \text{where } v = \text{GlobalAvgPool}(F_{\text{fused}}) \]Here, σ is the sigmoid function, and v ∈ R1×C captures channel-wise statistics.

Spatial Masking

A spatial selection unit ms ∈ RM×C generates spatial masks:

\[ M_s = \sigma(m_s \cdot z), \quad \text{where } z = \text{Flatten}(F_{\text{fused}}) \]These masks are applied to the distillation loss:

\[ \mathcal{L}_{\text{distill}} = \frac{1}{M \cdot C \cdot H \cdot W} \sum_{m=1}^{M} \left\| M_m \odot \big( F_T – f_{\text{align}}(F_S) \big) \right\|_2^2 \]where falign aligns student features to teacher dimensions.

By optimizing both spatial and channel-wise distillation losses, ACAM-KD ensures comprehensive feature alignment.

Mask Diversity: Preventing Redundancy

To avoid all masks collapsing into similar patterns, ACAM-KD introduces a Dice coefficient-based diversity loss:

\[ L_{\text{div}} = \frac{ \sum_{i=1}^{M} \lVert M_i \rVert^2 + \sum_{j=1}^{M} \lVert M_j \rVert^2 }{ \sum_{i=1}^{M} \sum_{j \neq i} M_i \cdot M_j } \]

This encourages complementary mask patterns, ensuring broader and more informative knowledge transfer.

The Full ACAM-KD Training Objective

The total loss function combines task performance, distillation, and diversity:

\[ L = L_{\text{task}} \;+\; \alpha \big( L_{\text{distill}}^{\text{spatial}} + L_{\text{distill}}^{\text{channel}} \big) \;+\; \lambda L_{\text{div}} \]In experiments, α=1 and λ=1 yielded strong results.

Benchmark Results: ACAM-KD Outperforms State-of-the-Art

ACAM-KD was rigorously evaluated on object detection (COCO2017) and semantic segmentation (Cityscapes) tasks, consistently outperforming existing KD methods.

📊 Object Detection on COCO2017

| METHOD | TEACHER | STUDENT | MAP | AP50 | AP75 | APS | APM | APT |

|---|---|---|---|---|---|---|---|---|

| Baseline | R101 | R50 | 37.4 | 56.7 | 39.6 | 20.0 | 40.7 | 49.7 |

| FGD [24] | R101 | R50 | 39.6 | – | – | 22.9 | 43.7 | 53.6 |

| MasKD [9] | R101 | R50 | 39.8 | 59.0 | 42.5 | 21.5 | 43.9 | 54.0 |

| ACAM-KD (Ours) | R101 | R50 | 41.2 | 60.6 | 44.1 | 24.6 | 45.5 | 54.1 |

👉 +1.4 mAP improvement over the previous best method.

When using a ResNeXt-101 teacher, ACAM-KD achieves even greater gains—up to +4.2 mAP across different detector architectures.

🎨 Semantic Segmentation on Cityscapes

| STUDENT MODEL | BASELINE MIOU | BEST PRIOR | ACAM-KD | GAIN |

|---|---|---|---|---|

| DeepLabV3-R18 | 72.96 | 77.00 (FreeKD) | 77.53 | +0.53 |

| DeepLabV3-MBV2 | 73.12 | 75.42 (MasKD) | 76.21 | +3.09 |

| PSPNet-R18 | 72.55 | 75.34 (MasKD) | 75.99 | +3.44 |

👉 ACAM-KD delivers up to 3.09% higher mIoU than baseline and +0.79% over best prior KD method.

Why ACAM-KD Works: Key Advantages

| FEATURE | BENIFIT |

|---|---|

| ✅Cross-Attention Fusion | Enables bidirectional interaction; student learnswithteacher, not justfromteacher |

| ✅Dynamic Masking | Masks adapt as student learns, avoiding redundant focus on mastered regions |

| ✅Spatial + Channel Masking | Comprehensive feature selection across both dimensions |

| ✅Mask Diversity Loss | Prevents redundancy, promotes broader knowledge transfer |

| ✅Student-Centric Learning | Empowers student to guide distillation based on its evolving needs |

Runtime and Efficiency Analysis

ACAM-KD isn’t just accurate—it’s efficient. Table 6 and 7 from the paper show that student models (e.g., ResNet-50, MobileNetV2) achieve high FPS and low memory usage compared to bulky teacher models.

| MODEL | FLOPS (G) | CUDA MEMORY (MB) | FPS (A100) |

|---|---|---|---|

| RetinaNet-R50 | 215 | 148 | 41.9 |

| DeepLabV3-R18 | 120 | 568 | 59.2 |

| DeepLabV3-MBV2 | 31.14 | 470 | 52.9 |

Despite fewer FLOPs, MobileNetV2 runs slower than ResNet-18 due to inefficient depthwise convolutions on CUDA—highlighting that FLOPs ≠ speed in real-world deployment.

Ablation Studies: What Really Matters?

The paper includes detailed ablation studies confirming ACAM-KD’s design choices.

🔍 Spatial vs. Channel Masking

| METHOD | MAP | APS |

|---|---|---|

| Spatial Only | 40.9 | 25.4 |

| Channel Only | 40.4 | 24.5 |

| Spatial + Channel (Ours) | 41.2 | 24.6 |

👉 Combined masking works best, especially for small objects (APₛ).

🔍 Query Source in Cross-Attention

| QUERY FROM | MAP |

|---|---|

| Student | 41.0 |

| Teacher (Ours) | 41.2 |

👉 Teacher as query provides better guidance.

🔍 Fixed vs. Adaptive Masking

| STRATEGY | MAP |

|---|---|

| No Masking | 37.4 |

| Fixed Teacher Mask | 39.8 |

| Adaptive Teacher Mask | 39.9 |

| ACAM-KD (Cooperative) | 41.2 |

👉 Student-teacher cooperation is key to performance.

Real-World Applications

ACAM-KD is ideal for:

- 🚗 Autonomous Vehicles: Fast, accurate object detection with lightweight models

- 🏙️ Smart Cities: Real-time video surveillance using efficient segmentation

- 📱 Mobile Vision Apps: On-device AI with low latency and power consumption

- 🤖 Robotics: Dense prediction for navigation and interaction

By enabling high accuracy with low compute, ACAM-KD bridges the gap between research and deployment.

Conclusion: The Future of Knowledge Distillation

ACAM-KD represents a paradigm shift in knowledge distillation. By replacing static, teacher-driven supervision with adaptive, cooperative learning, it unlocks new levels of performance in dense prediction tasks.

Its key innovations—cross-attention fusion, adaptive spatial-channel masking, and diversity regularization—make it a versatile, powerful framework for compressing deep models without sacrificing accuracy.

As edge AI continues to grow, methods like ACAM-KD will be essential for building fast, efficient, and intelligent vision systems.

🔎 Ready to Try ACAM-KD?

Want to implement ACAM-KD in your own projects?

👉 Download the paper: ACAM-KD: Adaptive and Cooperative Attention Masking for Knowledge Distillation (arXiv:2503.06307)

👉 Explore code on GitHub (when released)

👉 Integrate into MMDetection or MMSegmentation for object detection and segmentation tasks

Have questions? Drop a comment below or reach out to the authors at {qlan, qtian}@uab.edu.

Use this article to rank higher on Google for cutting-edge AI research topics and stay ahead in the world of efficient deep learning.

Call to Action:

📚 Liked this breakdown? Share it with your team!

💡 Working on model compression? Try ACAM-KD and tag us with your results.

📩 Subscribe for more AI research deep dives every week.

Here is the end-to-end Python code for the ACAM-KD (Adaptive and Cooperative Attention Masking for Knowledge Distillation) model, as described in the paper.

import torch

import torch.nn as nn

import torch.nn.functional as F

import torchvision.models as models

# ----------------------------------------------------------------------------

# Section 3.1: Student-Teacher Cross-Attention Feature Fusion (STCA-FF)

# ----------------------------------------------------------------------------

class STCAFF(nn.Module):

"""

Implements the Student-Teacher Cross-Attention Feature Fusion module.

This module generates fused features by attending to the student's features

based on a query derived from the teacher's features, as described in

Section 3.1 of the paper.

"""

def __init__(self, in_channels_t, in_channels_s):

"""

Initializes the STCA-FF module.

Args:

in_channels_t (int): Number of channels in the teacher's feature map.

in_channels_s (int): Number of channels in the student's feature map.

"""

super(STCAFF, self).__init__()

# As per Equation (2), Cq = C / 2

inter_channels = in_channels_s // 2

if inter_channels == 0:

inter_channels = 1

# 1x1 convolutions to project features into Query, Key, and Value

# W_q: Query projection from Teacher features

self.query_conv = nn.Conv2d(in_channels_t, inter_channels, kernel_size=1)

# W_k: Key projection from Student features

self.key_conv = nn.Conv2d(in_channels_s, inter_channels, kernel_size=1)

# W_v: Value projection from Student features

self.value_conv = nn.Conv2d(in_channels_s, in_channels_s, kernel_size=1)

self.inter_channels = inter_channels

def forward(self, feat_t, feat_s):

"""

Forward pass for STCA-FF.

Args:

feat_t (torch.Tensor): Teacher feature map (N, C_t, H, W).

feat_s (torch.Tensor): Student feature map (N, C_s, H, W).

Returns:

torch.Tensor: Fused feature map (N, C_s, H, W).

"""

batch_size, _, h, w = feat_s.size()

# Equation (2): Project features to Q, K, V

# Teacher feature defines the query

q = self.query_conv(feat_t).view(batch_size, self.inter_channels, -1)

q = q.permute(0, 2, 1) # (N, HW, Cq)

# Student feature provides the key and value

k = self.key_conv(feat_s).view(batch_size, self.inter_channels, -1) # (N, Cq, HW)

v = self.value_conv(feat_s).view(batch_size, -1, h * w)

v = v.permute(0, 2, 1) # (N, HW, C)

# Equation (3): Compute the attention matrix A

# A = softmax(QK / sqrt(Cq))

attention_matrix = torch.matmul(q, k) / (self.inter_channels**0.5)

attention_matrix = F.softmax(attention_matrix, dim=-1) # (N, HW, HW)

# Equation (4): Compute the fused features

# F_fused = AV

fused_features = torch.matmul(attention_matrix, v)

fused_features = fused_features.permute(0, 2, 1).contiguous()

fused_features = fused_features.view(batch_size, -1, h, w)

return fused_features

# ----------------------------------------------------------------------------

# Section 3.2: Adaptive Spatial-Channel Masking (ASCM)

# ----------------------------------------------------------------------------

class ASCM(nn.Module):

"""

Implements the Adaptive Spatial-Channel Masking module.

This module generates dynamic spatial and channel-wise masks from the

fused features, as detailed in Section 3.2 of the paper.

"""

def __init__(self, in_channels, num_masks):

"""

Initializes the ASCM module.

Args:

in_channels (int): Number of channels in the fused feature map.

num_masks (int): The number of masks (M) to generate.

"""

super(ASCM, self).__init__()

self.num_masks = num_masks

# Learnable selection units for channel masking (m^c)

self.channel_selectors = nn.Parameter(torch.randn(num_masks, in_channels))

# Learnable selection units for spatial masking (m^s)

self.spatial_selectors = nn.Parameter(torch.randn(num_masks, in_channels))

def forward(self, fused_features):

"""

Forward pass for ASCM.

Args:

fused_features (torch.Tensor): Fused feature map from STCA-FF (N, C, H, W).

Returns:

Tuple[torch.Tensor, torch.Tensor]:

- channel_masks (N, M, C)

- spatial_masks (N, M, H, W)

"""

batch_size, C, H, W = fused_features.size()

# Equation (5): Generate Channel Masks (M^c)

# v is the spatially average-pooled vector of F_fused

v = F.adaptive_avg_pool2d(fused_features, (1, 1)).view(batch_size, C)

# M^c = sigma(m^c * v)

channel_masks = torch.sigmoid(torch.matmul(v, self.channel_selectors.t())) # (N, M)

channel_masks = channel_masks.unsqueeze(2).expand(-1, -1, C) # (N, M, C)

# Equation (5): Generate Spatial Masks (M^s)

# z is the flattened F_fused

z = fused_features.view(batch_size, C, H * W)

# M^s = sigma(m^s * z)

spatial_masks = torch.sigmoid(torch.matmul(self.spatial_selectors, z)) # (N, M, HW)

spatial_masks = spatial_masks.view(batch_size, self.num_masks, H, W) # (N, M, H, W)

return channel_masks, spatial_masks

# ----------------------------------------------------------------------------

# Section 3.3: Overall Loss

# ----------------------------------------------------------------------------

class ACAMKDLoss(nn.Module):

"""

The main ACAM-KD class that integrates all components and computes the total loss.

"""

def __init__(self, in_channels_t, in_channels_s, num_masks=6, alpha=1.0, lambda_div=1.0):

"""

Initializes the ACAM-KD model and loss function.

Args:

in_channels_t (int): Teacher feature channels.

in_channels_s (int): Student feature channels.

num_masks (int): Number of masks (M).

alpha (float): Weight for the distillation losses.

lambda_div (float): Weight for the diversity loss.

"""

super(ACAMKDLoss, self).__init__()

self.alpha = alpha

self.lambda_div = lambda_div

self.num_masks = num_masks

# Initialize the core modules

self.stcaff = STCAFF(in_channels_t, in_channels_s)

self.ascm = ASCM(in_channels_s, num_masks)

# Adaptation layer to align student and teacher channels if they differ

if in_channels_s != in_channels_t:

self.align_layer = nn.Conv2d(in_channels_s, in_channels_t, kernel_size=1)

else:

self.align_layer = nn.Identity()

def _dice_loss(self, masks):

"""

Computes the Dice coefficient-based diversity loss.

Args:

masks (torch.Tensor): A set of masks (N, M, ...).

Returns:

torch.Tensor: The diversity loss.

"""

# Flatten masks to (N, M, -1)

masks = masks.view(masks.size(0), self.num_masks, -1)

# Equation (8): L_div

numerator = 2 * torch.matmul(masks, masks.transpose(1, 2))

denominator = torch.sum(masks**2, dim=2, keepdim=True) + torch.sum(masks**2, dim=2, keepdim=True).transpose(1, 2)

# Create a mask to exclude the diagonal (self-similarity)

identity_matrix = torch.eye(self.num_masks, device=masks.device).unsqueeze(0)

# We want to maximize diversity, which means minimizing similarity.

# The loss is the sum of similarities between different masks.

dice_coeff = (numerator / (denominator + 1e-6)) * (1 - identity_matrix)

# Average over batch and sum the similarities

return dice_coeff.sum() / masks.size(0)

def forward(self, feat_t, feat_s, task_loss):

"""

Computes the total ACAM-KD loss.

Args:

feat_t (torch.Tensor): Teacher feature map.

feat_s (torch.Tensor): Student feature map.

task_loss (torch.Tensor): The original task loss (e.g., detection/segmentation loss).

Returns:

torch.Tensor: The total combined loss.

"""

N, _, H, W = feat_s.size()

# 1. Fuse features using STCA-FF

fused_features = self.stcaff(feat_t, feat_s)

# 2. Generate adaptive masks using ASCM

channel_masks, spatial_masks = self.ascm(fused_features)

# 3. Align student features with teacher features

feat_s_aligned = self.align_layer(feat_s)

# Feature difference

diff = feat_t - feat_s_aligned

# 4. Compute Channel-wise Distillation Loss (Equation 6)

# L_distill^c

masked_diff_c = diff.unsqueeze(1) * channel_masks.unsqueeze(3).unsqueeze(4) # (N, M, C, H, W)

loss_distill_c = (torch.norm(masked_diff_c, p=2, dim=(2,3,4))**2) / (H * W)

# Normalize by the mask sum

loss_distill_c = (loss_distill_c / (channel_masks.sum(dim=2) + 1e-6)).mean()

# 5. Compute Spatial Distillation Loss (Equation 7)

# L_distill^s

masked_diff_s = diff.unsqueeze(1) * spatial_masks.unsqueeze(2) # (N, M, C, H, W)

loss_distill_s = (torch.norm(masked_diff_s, p=2, dim=(2,3,4))**2) / (diff.size(1))

# Normalize by the mask sum

loss_distill_s = (loss_distill_s / (spatial_masks.view(N, self.num_masks, -1).sum(dim=2) + 1e-6)).mean()

# 6. Compute Mask Diversity Loss (Equation 8)

loss_div_c = self._dice_loss(channel_masks)

loss_div_s = self._dice_loss(spatial_masks)

loss_div = loss_div_c + loss_div_s

# 7. Compute Overall Loss (Equation 9)

# L = L_task + alpha * (L_distill^c + L_distill^s) + lambda * L_div

total_loss = task_loss + \

self.alpha * (loss_distill_c + loss_distill_s) + \

self.lambda_div * loss_div

return total_loss

# ----------------------------------------------------------------------------

# Example Usage

# ----------------------------------------------------------------------------

if __name__ == '__main__':

# --- Configuration ---

BATCH_SIZE = 2

# Use a more realistic input size for pre-trained models

IMG_HEIGHT, IMG_WIDTH = 224, 224

# Feature dimensions from ResNet backbones. We will extract features

# from the output of the final convolutional block (layer4).

# ResNet101 layer4 output channels: 2048

# ResNet50 layer4 output channels: 2048

TEACHER_CHANNELS = 2048

STUDENT_CHANNELS = 2048

# ACAM-KD hyperparameters from the paper

NUM_MASKS = 6 # M=6 for detection

ALPHA = 1.0 # Balancing hyperparameter for distillation loss

LAMBDA = 1.0 # Balancing hyperparameter for diversity loss

# --- Models and Data ---

# Load pre-trained ResNet models as described in the paper.

# Teacher: ResNet-101

# Student: ResNet-50

teacher_backbone = models.resnet101(weights=models.ResNet101_Weights.DEFAULT)

student_backbone = models.resnet50(weights=models.ResNet50_Weights.DEFAULT)

# Create feature extractors. We take the model up to the last conv block (layer4),

# removing the final avgpool and fc layers.

teacher_model = nn.Sequential(*list(teacher_backbone.children())[:-2]).eval() # Teacher is frozen

student_model = nn.Sequential(*list(student_backbone.children())[:-2]).train() # Student is in training mode

# Create a mock input image batch

input_image = torch.randn(BATCH_SIZE, 3, IMG_HEIGHT, IMG_WIDTH)

# --- Forward Pass ---

# Get feature maps from both models

print("Extracting features from teacher and student models...")

with torch.no_grad():

teacher_features = teacher_model(input_image)

student_features = student_model(input_image)

# Assume a mock task loss (e.g., from a detection or segmentation head)

# In a real training loop, this would be the output of your task-specific loss function

mock_task_loss = torch.tensor(0.5, requires_grad=True)

# --- ACAM-KD Loss Calculation ---

# Initialize the ACAM-KD loss module

acam_kd_loss_fn = ACAMKDLoss(

in_channels_t=TEACHER_CHANNELS,

in_channels_s=STUDENT_CHANNELS,

num_masks=NUM_MASKS,

alpha=ALPHA,

lambda_div=LAMBDA

)

# Calculate the total loss

print("Calculating ACAM-KD total loss...")

total_loss = acam_kd_loss_fn(teacher_features, student_features, mock_task_loss)

# --- Backpropagation (Example) ---

# In a real training loop, you would perform backpropagation on this total_loss

# optimizer = torch.optim.SGD(student_model.parameters(), lr=0.01)

# optimizer.zero_grad()

# total_loss.backward()

# optimizer.step()

# --- Print Results ---

print("\n--- ACAM-KD Example with ResNet Models ---")

print(f"Input Image Shape: {input_image.shape}")

print(f"Teacher Features Shape: {teacher_features.shape}")

print(f"Student Features Shape: {student_features.shape}")

print("-" * 40)

print(f"Mock Task Loss: {mock_task_loss.item():.4f}")

print(f"Total Combined Loss: {total_loss.item():.4f}")

print("-" * 40)

print("Code executed successfully. You can now integrate this into your training pipeline.")

Related posts, You May like to read

- 7 Shocking Truths About Knowledge Distillation: The Good, The Bad, and The Breakthrough (SAKD)

- 7 Revolutionary Breakthroughs in Medical Image Translation (And 1 Fatal Flaw That Could Derail Your AI Model)

- DeepSPV: Revolutionizing 3D Spleen Volume Estimation from 2D Ultrasound with AI

- 1 Revolutionary Breakthrough in AI Object Detection: GridCLIP vs. Two-Stage Models

- GeoSAM2 3D Part Segmentation — Prompt-Controllable, Geometry-Aware Masks for Precision 3D Editing

Pingback: Hierarchical Spatio-temporal Segmentation Network (HSS-Net) for Accurate Ejection Fraction Estimation - aitrendblend.com

Pingback: A Knowledge Distillation-Based Approach to Enhance Transparency of Classifier Models - aitrendblend.com

Pingback: Capsule Networks Do Not Need to Model Everything: How REM Reduces Entropy for Smarter AI - aitrendblend.com

Pingback: Anchor-Based Knowledge Distillation: A Trustworthy AI Approach for Efficient Model Compression - aitrendblend.com

Pingback: LayerMix: A Fractal-Based Data Augmentation Strategy for More Robust Deep Learning Models - aitrendblend.com