Med-CTX: Revolutionizing Breast Cancer Ultrasound Segmentation with Multimodal Transformers

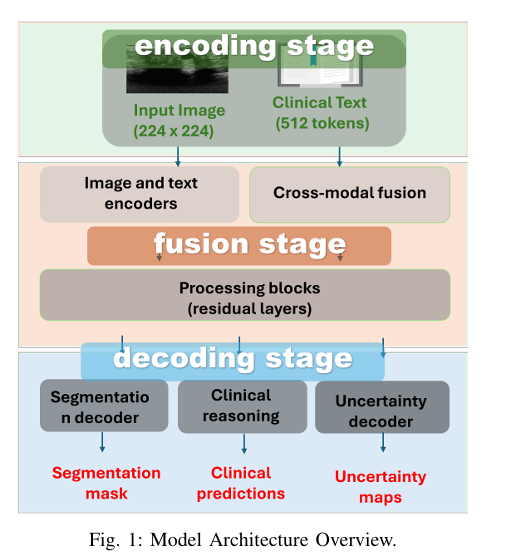

Breast cancer remains one of the most prevalent cancers worldwide, with early and accurate diagnosis being crucial for effective treatment. Medical imaging, particularly ultrasound, plays a vital role in lesion detection and characterization. However, despite advances in artificial intelligence (AI), many deep learning models used for breast cancer ultrasound segmentation still function as “black boxes,” […]