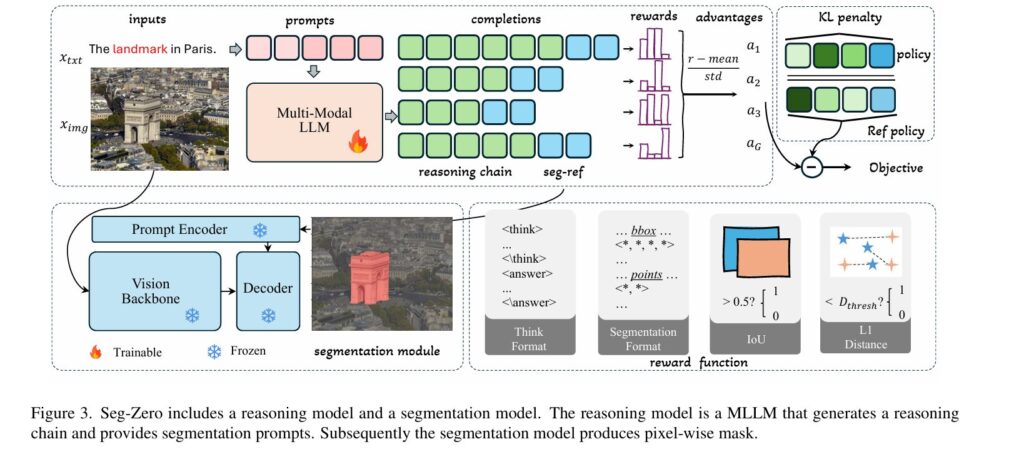

How AI is Learning to Think Before it Segments: Understanding Seg-Zero’s Reasoning-Driven Image Analysis

Introduction Imagine an AI system that doesn’t just identify objects in images, but thinks through its reasoning process step-by-step before producing a final answer—much like how a human would approach a complex visual problem. This is precisely what researchers at CUHK, HKUST, and RUC have accomplished with Seg-Zero, a groundbreaking framework that fundamentally reimagines how […]