Retinal diseases like Diabetic Retinopathy (DR), Glaucoma, and Cataracts cause irreversible vision loss if undetected early. Tragically, 80% of cases occur in low-resource regions lacking diagnostic tools. But a breakthrough from Columbia University flips the script: a pocket-sized AI system that detects retinal anomalies with 93% of expert-level accuracy while using 97.4% fewer computational resources. This isn’t just innovation—it’s a lifeline for millions.

The Problem in Retinal Disease: Why Edge AI Was the Only Answer

Traditional retinal diagnostics rely on two impractical pillars:

- High-capacity AI models (e.g., Vision Transformers) with 86M+ parameters—too bulky for affordable hardware.

- Specialized clinics with ophthalmologists—scarce in rural or impoverished areas.

Deploying these models on edge devices like the NVIDIA Jetson Nano (a $99 microcomputer) seemed impossible due to:

- Memory overload (ViTs exceed 8GB RAM).

- Slow inference speeds (>5 sec/image).

- Power inefficiency for continuous use.

The Breakthrough in Retinal Disease: Cross-Architecture Knowledge Distillation

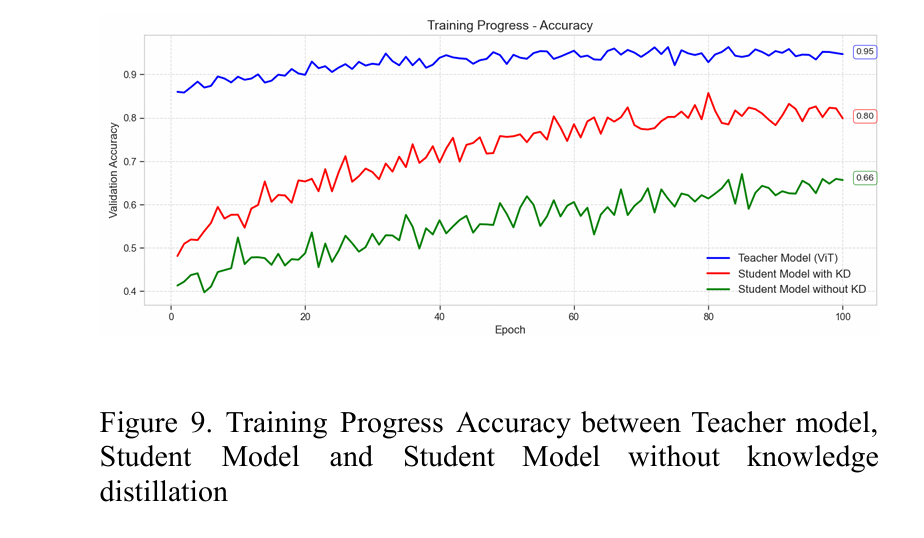

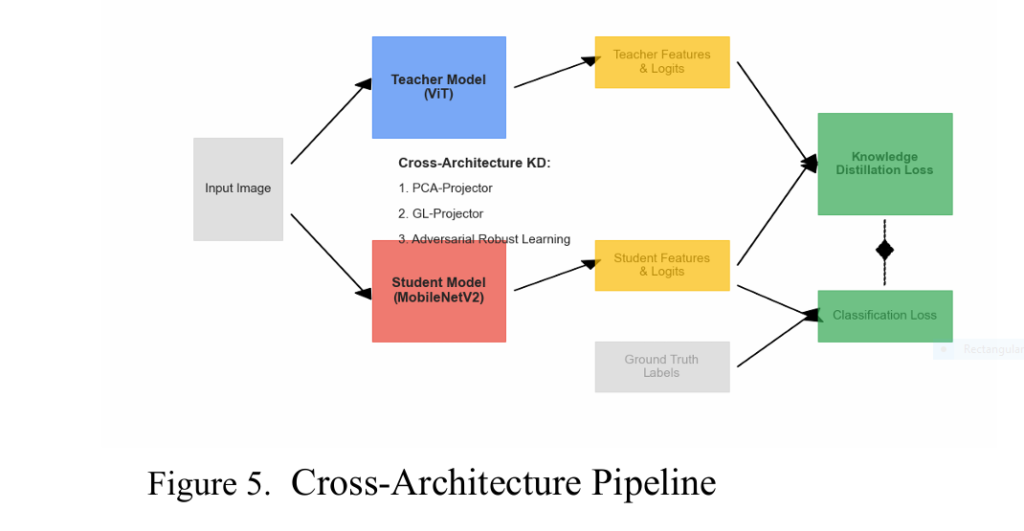

Researchers Berk Yilmaz and Aniruddh Aiyengar cracked the code using cross-architecture knowledge distillation (KD)—a method to shrink a giant ViT teacher into a micro-CNN student without losing diagnostic intelligence.

Step 1: The “Teacher” – A Self-Trained Vision Transformer

- Pre-trained via I-JEPA self-supervised learning (no manual labels needed).

- Fine-tuned on 6,727 retinal fundus images across 4 classes: Normal, DR, Glaucoma, Cataract.

- Accuracy: 92.87%—rivaling human experts.

💡 Why I-JEPA?

Unlike contrastive learning, I-JEPA’s masked prediction in latent space preserves subtle pathology patterns (e.g., micro-hemorrhages in DR) by ignoring irrelevant textures.

Step 2: The “Student” – A Jetson-Optimized CNN

- Architectures tested: MobileNetV2, ResNet18, EfficientNet-B0.

- Parameters: Just 2.2M (vs. teacher’s 86M).

- Model size: 8.79 MB—fits the Jetson Nano’s 2GB RAM.

Step 3: Bridging the Architecture Gap on Retinal Disease

To transfer the ViT’s “diagnostic intuition” to the CNN, the team engineered two novel projectors:

- Partitioned Cross-Attention (PCA) Projector:

- Teaches the CNN to mimic the ViT’s global attention patterns (critical for spotting retina-wide pathologies).

- Uses KL divergence loss to align teacher/student attention maps.

- Group-Wise Linear (GL) Projector:

- Splits CNN features into groups, applying separate linear transformations.

- Aligns heterogeneous feature spaces via mean squared error loss.

Step 4: Multi-View Robust Training

- Generates augmented views (cropping, color jitter) of each image.

- Adds an adversarial discriminator to force student features to mimic the teacher’s distribution.

- Result: Invariance to lighting/contrast variations in fundus images.

Results: Small Device, Giant Leaps

- Accuracy Retention: 93% of teacher’s performance (89% student accuracy vs. 92.87% teacher).

- Speed: 2.19 images/sec on Jetson Nano (clinically viable latency).

- Class-Wise Performance:ClassPrecisionRecallF1-ScoreCataract0.950.860.90Diabetic Retinopathy0.970.920.94Glaucoma0.840.690.76Normal0.840.970.90

⚠️ The Glaucoma Challenge:

Lower recall (69%) stemmed from confusion with Cataracts—a fixable hurdle with targeted data augmentation.

If you’re Interested in semi-supervised learning with Knowledge Distillation model, you may also find this article helpful: 5 Breakthroughs in Dual-Forward DFPT-KD: Crush the Capacity Gap & Boost Tiny AI Models

Why This Changes Everything for Global Health

- Cost: Replaces $10,000+ diagnostic setups with a $99 Jetson Nano.

- Access: Enables screening in pharmacies, schools, or mobile clinics.

- Preventive Impact: Early DR detection alone can reduce blindness risk by 95%.

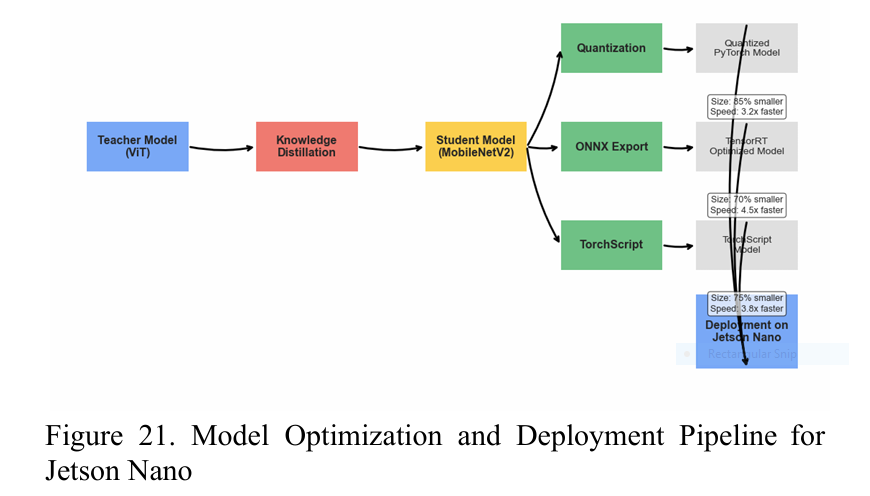

The Future: Beyond Retinal Diseases

The framework is expandable to:

- Macular degeneration & retinal detachment detection.

- Multi-disease diagnosis (e.g., DR + Glaucoma).

- Segmentation-guided triage (via Grad-CAM integration).

Conclusion: AI That Fits in Your Pocket, Saves Sight

This isn’t just about compressing AI—it’s about democratizing life-saving diagnostics. By merging ViT-level accuracy with CNN-level efficiency, Columbia’s team has turned edge devices into ophthalmologists. For clinics in India, farms in Kenya, or favelas in Brazil, this could mean the difference between darkness and sight.

📢 Your Move:

Deploy this model. Share this breakthrough. Or simply ask: What disease should we tackle next? Comment below!

Here’s the complete implementation of the cross-architecture knowledge distillation framework for retinal disease detection, including all key components described in the paper:

import torch

import torch.nn as nn

import torch.nn.functional as F

from torchvision import models, transforms

import timm

from torch.utils.data import Dataset, DataLoader

from PIL import Image

import numpy as np

import os

# 1. Dataset Preparation

class RetinalFundusDataset(Dataset):

def __init__(self, root_dir, split='train', transform=None):

self.root_dir = os.path.join(root_dir, split)

self.classes = ['Cataract', 'DR', 'Glaucoma', 'Normal']

self.class_to_idx = {cls: i for i, cls in enumerate(self.classes)}

self.transform = transform

self.samples = []

for cls in self.classes:

cls_dir = os.path.join(self.root_dir, cls)

for img_name in os.listdir(cls_dir):

if img_name.endswith(('.jpg', '.jpeg', '.png')):

img_path = os.path.join(cls_dir, img_name)

self.samples.append((img_path, self.class_to_idx[cls]))

def __len__(self):

return len(self.samples)

def __getitem__(self, idx):

img_path, label = self.samples[idx]

img = Image.open(img_path).convert('RGB')

if self.transform:

img = self.transform(img)

return img, label

# 2. Model Architectures

class TeacherViT(nn.Module):

def __init__(self, num_classes=4):

super().__init__()

self.vit = timm.create_model('vit_base_patch16_224', pretrained=True)

self.vit.head = nn.Linear(self.vit.head.in_features, num_classes)

def forward(self, x, return_features=False):

features = self.vit.forward_features(x)

logits = self.vit.head(features)

return (features, logits) if return_features else logits

class StudentCNN(nn.Module):

def __init__(self, backbone='mobilenetv2', num_classes=4):

super().__init__()

if backbone == 'mobilenetv2':

self.cnn = models.mobilenet_v2(pretrained=True)

self.cnn.classifier[1] = nn.Linear(self.cnn.last_channel, num_classes)

elif backbone == 'resnet18':

self.cnn = models.resnet18(pretrained=True)

self.cnn.fc = nn.Linear(512, num_classes)

def forward(self, x, return_features=False):

return self.cnn(x)

# 3. Knowledge Distillation Components

class PCAPProjector(nn.Module):

"""Partitioned Cross-Attention Projector"""

def __init__(self, in_channels, attn_dim=256, num_heads=8):

super().__init__()

self.query_conv = nn.Conv2d(in_channels, attn_dim, kernel_size=1)

self.key_conv = nn.Conv2d(in_channels, attn_dim, kernel_size=1)

self.value_conv = nn.Conv2d(in_channels, attn_dim, kernel_size=1)

self.softmax = nn.Softmax(dim=-1)

self.num_heads = num_heads

self.head_dim = attn_dim // num_heads

def forward(self, x):

B, C, H, W = x.shape

Q = self.query_conv(x).view(B, self.num_heads, self.head_dim, H*W).permute(0, 1, 3, 2)

K = self.key_conv(x).view(B, self.num_heads, self.head_dim, H*W)

V = self.value_conv(x).view(B, self.num_heads, self.head_dim, H*W).permute(0, 1, 3, 2)

attn_scores = torch.matmul(Q, K) / np.sqrt(self.head_dim)

attn_probs = self.softmax(attn_scores)

context = torch.matmul(attn_probs, V).permute(0, 1, 3, 2)

context = context.contiguous().view(B, -1, H, W)

return context, attn_probs

class GLProjector(nn.Module):

"""Group-Wise Linear Projector"""

def __init__(self, in_channels, out_channels, num_groups=8):

super().__init__()

self.num_groups = num_groups

self.group_in = in_channels // num_groups

self.group_out = out_channels // num_groups

self.projectors = nn.ModuleList([

nn.Conv2d(self.group_in, self.group_out, kernel_size=1)

for _ in range(num_groups)

])

def forward(self, x):

groups = torch.chunk(x, self.num_groups, dim=1)

projected = [proj(g) for proj, g in zip(self.projectors, groups)]

return torch.cat(projected, dim=1)

class MultiViewGenerator:

"""Generates multiple augmented views of input images"""

def __init__(self, base_transform, num_views=3, crop_ratio=0.8):

self.base_transform = base_transform

self.num_views = num_views

self.crop_ratio = crop_ratio

def __call__(self, img):

views = [self.base_transform(img)] # Original view

w, h = img.size

for _ in range(self.num_views - 1):

# Random crop

crop_size = int(min(w, h) * self.crop_ratio)

i = torch.randint(0, h - crop_size, (1,)).item()

j = torch.randint(0, w - crop_size, (1,)).item()

cropped = transforms.functional.crop(img, i, j, crop_size, crop_size)

cropped = transforms.functional.resize(cropped, (h, w))

views.append(self.base_transform(cropped))

return views

class Discriminator(nn.Module):

"""Adversarial Discriminator for Feature Alignment"""

def __init__(self, input_dim, hidden_dim=512):

super().__init__()

self.net = nn.Sequential(

nn.Linear(input_dim, hidden_dim),

nn.LeakyReLU(0.2),

nn.Linear(hidden_dim, hidden_dim),

nn.LeakyReLU(0.2),

nn.Linear(hidden_dim, 1),

nn.Sigmoid()

)

def forward(self, x):

return self.net(x)

# 4. Knowledge Distillation Framework

class CrossArchitectureKD:

def __init__(self, teacher, student, pca_projector, gl_projector,

discriminator, device, lambda_gl=1.0, lambda_adv=0.1):

self.teacher = teacher

self.student = student

self.pca_projector = pca_projector

self.gl_projector = gl_projector

self.discriminator = discriminator

self.device = device

self.lambda_gl = lambda_gl

self.lambda_adv = lambda_adv

# Freeze teacher

for param in self.teacher.parameters():

param.requires_grad = False

def compute_losses(self, images, labels):

# Generate multiple views

views = self.multi_view_generator(images)

total_loss = 0

pca_losses, gl_losses, adv_losses = [], [], []

for view in views:

view = view.to(self.device)

# Teacher forward

with torch.no_grad():

t_features, t_logits = self.teacher(view, return_features=True)

t_attn = self.teacher.vit.blocks[-1].attn.attention_map

# Student forward

s_features = self.student.cnn.features(view)

s_logits = self.student.cnn.classifier(s_features.mean([2, 3]))

# PCA Projection and Loss

s_context, s_attn = self.pca_projector(s_features)

pca_loss = F.kl_div(

F.log_softmax(s_attn, dim=-1),

F.softmax(t_attn, dim=-1),

reduction='batchmean'

)

# GL Projection and Loss

s_projected = self.gl_projector(s_context)

gl_loss = F.mse_loss(s_projected, t_features.unsqueeze(-1).unsqueeze(-1))

# Adversarial Loss

real_features = t_features.detach()

fake_features = self.gl_projector(s_context).mean([2, 3])

real_preds = self.discriminator(real_features)

fake_preds = self.discriminator(fake_features)

adv_loss = 0.5 * (

F.binary_cross_entropy(real_preds, torch.ones_like(real_preds)) +

F.binary_cross_entropy(fake_preds, torch.zeros_like(fake_preds))

)

# Classification Loss

cls_loss = F.cross_entropy(s_logits, labels)

# Combine losses

view_loss = cls_loss + pca_loss + self.lambda_gl * gl_loss + self.lambda_adv * adv_loss

total_loss += view_loss

pca_losses.append(pca_loss.item())

gl_losses.append(gl_loss.item())

adv_losses.append(adv_loss.item())

return {

'total_loss': total_loss / len(views),

'pca_loss': np.mean(pca_losses),

'gl_loss': np.mean(gl_losses),

'adv_loss': np.mean(adv_losses),

'cls_loss': cls_loss.item()

}

# 5. Training Pipeline

def train_kd(model, data_loader, optimizer, device, epoch):

model.train()

total_loss = 0

for batch_idx, (images, labels) in enumerate(data_loader):

images, labels = images.to(device), labels.to(device)

optimizer.zero_grad()

losses = model.compute_losses(images, labels)

loss = losses['total_loss']

loss.backward()

optimizer.step()

total_loss += loss.item()

if batch_idx % 50 == 0:

print(f'Train Epoch: {epoch} [{batch_idx}/{len(data_loader)}] '

f'Loss: {loss.item():.6f} '

f'PCA: {losses["pca_loss"]:.4f} '

f'GL: {losses["gl_loss"]:.4f} '

f'Adv: {losses["adv_loss"]:.4f} '

f'Cls: {losses["cls_loss"]:.4f}')

avg_loss = total_loss / len(data_loader)

print(f'Train Epoch: {epoch} Average Loss: {avg_loss:.6f}')

return avg_loss

# 6. Main Execution

def main():

# Configurations

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

data_dir = 'data/retinal_fundus'

batch_size = 32

num_epochs = 50

lr = 1e-4

# Data Transforms

train_transform = transforms.Compose([

transforms.Resize(256),

transforms.RandomCrop(224),

transforms.RandomHorizontalFlip(),

transforms.RandomVerticalFlip(),

transforms.RandomRotation(12),

transforms.ColorJitter(brightness=0.1, contrast=0.1, saturation=0.1, hue=0.05),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

# Dataset and DataLoader

train_dataset = RetinalFundusDataset(

root_dir=data_dir,

split='train',

transform=train_transform

)

train_loader = DataLoader(

train_dataset,

batch_size=batch_size,

shuffle=True,

num_workers=4

)

# Initialize models

teacher = TeacherViT(num_classes=4).to(device)

student = StudentCNN(backbone='mobilenetv2', num_classes=4).to(device)

# Initialize KD components

pca_projector = PCAPProjector(in_channels=1280).to(device) # For MobileNetV2

gl_projector = GLProjector(in_channels=256, out_channels=768).to(device)

discriminator = Discriminator(input_dim=768).to(device)

# Initialize KD framework

kd_model = CrossArchitectureKD(

teacher=teacher,

student=student,

pca_projector=pca_projector,

gl_projector=gl_projector,

discriminator=discriminator,

device=device,

lambda_gl=1.0,

lambda_adv=0.1

)

# Optimizer

optimizer = torch.optim.AdamW(

list(student.parameters()) +

list(pca_projector.parameters()) +

list(gl_projector.parameters()) +

list(discriminator.parameters()),

lr=lr

)

# Training loop

for epoch in range(1, num_epochs + 1):

train_loss = train_kd(kd_model, train_loader, optimizer, device, epoch)

# Save checkpoint

if epoch % 10 == 0:

torch.save({

'epoch': epoch,

'student_state_dict': student.state_dict(),

'pca_projector_state_dict': pca_projector.state_dict(),

'gl_projector_state_dict': gl_projector.state_dict(),

'discriminator_state_dict': discriminator.state_dict(),

'optimizer_state_dict': optimizer.state_dict(),

'loss': train_loss,

}, f'checkpoint_epoch_{epoch}.pth')

if __name__ == '__main__':

main()

Pingback: Unlock 106x Faster MD Simulations: The Knowledge Distillation Breakthrough Accelerating Materials Discovery - aitrendblend.com

Pingback: 7 Proven Knowledge Distillation Techniques: Why PLD Outperforms KD and DIST [2025 Update] - aitrendblend.com

Pingback: 7 Incredible Upsides and Downsides of Layered Self‑Supervised Knowledge Distillation (LSSKD) for Edge AI - aitrendblend.com

Pingback: Unlock 13% Better Speech Recognition: How Label-Context-Dependent ILM Estimation Shatters CTC Limits - aitrendblend.com

Pingback: Unlock 2.5X Better LLMs: How Progressive Overload Training Crushes Catastrophic Forgetting - aitrendblend.com

Pingback: MTL-KD: 5 Breakthroughs That Shatter Old Limits in AI Vehicle Routing (But Reveal New Challenges) - aitrendblend.com

Pingback: Unlock 57.2% Reasoning Accuracy: KDRL Revolutionary Fusion Crushes LLM Training Limits - aitrendblend.com

Pingback: 3 Breakthroughs in RGBD Segmentation: How CroDiNo-KD Revolutionizes AI Amid Sensor Failures - aitrendblend.com

Pingback: ActiveKD & PCoreSet: 5 Revolutionary Steps to Slash AI Training Costs by 90% (Without Sacrificing Accuracy!) - aitrendblend.com

Pingback: Delayed-KD: A Powerful Breakthrough in Low-Latency Streaming ASR (With a 9.4% CER Reduction) - aitrendblend.com

Pingback: Beyond the Blackout: 3 Game-Changing AI Solutions That Fix Wireless Network Meltdowns (For Good!) - aitrendblend.com

Pingback: 7 Revolutionary Ways EasyDistill is Changing LLM Knowledge Distillation (And Why You Should Care!) - aitrendblend.com

Pingback: 7 Revolutionary Ways DOGe Is Transforming LARGE LANGUAGE MODEL (LLM) Security (And What You’re Missing!) - aitrendblend.com