In the rapidly evolving world of digital health, wearable AI for human activity recognition (HAR) is being hailed as a revolutionary tool—promising to transform elder care, chronic disease management, and rehabilitation. But how much of the hype is real, and how much is overblown?

A groundbreaking 2025 study published in Neurocomputing dives deep into this question, evaluating nine machine learning models across two major HAR datasets: OPPORTUNITY and CogAge. The findings reveal surprising insights—some empowering, others cautionary—about which AI models truly deliver in real-world healthcare settings.

Let’s uncover the 7 shocking truths from this research, separating the good innovations from the bad assumptions and the overhyped architectures.

Truth #1: Simpler AI Models Often Outperform Complex Deep Learning (The Good)

Contrary to popular belief, complex deep learning models aren’t always better.

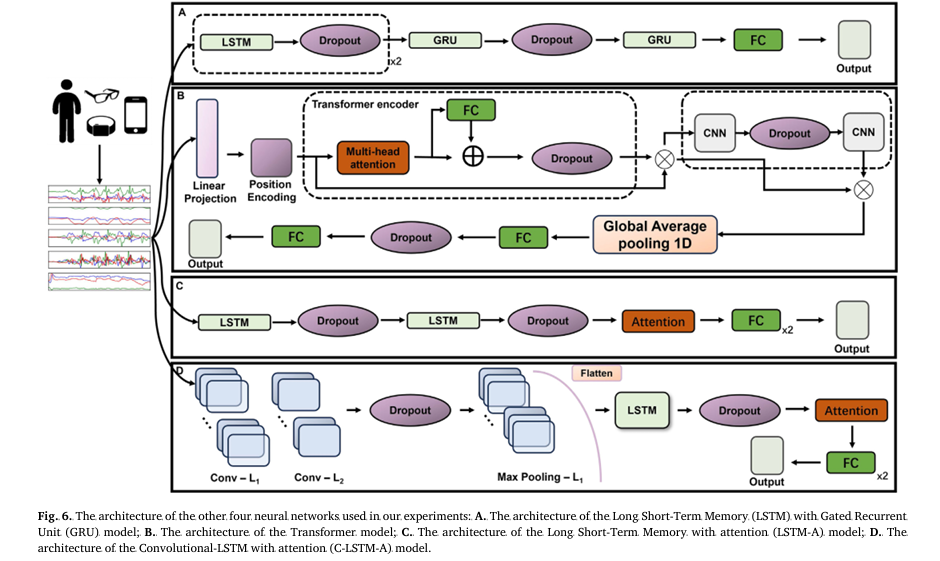

The study compared everything from Support Vector Machines (SVM) with hand-crafted features to advanced Transformers and hybrid LSTM-GRU networks. Surprisingly, simpler models like GRU and LSTM consistently outperformed more complex architectures—especially on behavioral activity recognition.

For example, on the OPPORTUNITY dataset, the GRU model achieved an AF1-Score of 79.91% for behavioral activities, outperforming the Transformer (13.22%) and even the hybrid LSTM-GRU (31.96%) in average F1-score.

✅ The Good: Lightweight models like GRU and LSTM offer high accuracy with lower computational cost—ideal for edge devices in home healthcare.

❌ The Overhyped: Transformers, despite their success in NLP, struggle with small, noisy sensor data and require massive compute—making them impractical for most wearable applications.

| MODEL | BEHAVIORAL AF1-SCORE (OPPORTUNITY) | TRAINING EFFICIENCY |

|---|---|---|

| GRU | 79.91% | High |

| LSTM | 79.64% | High |

| LSTM-GRU | 79.13% | Medium |

| Transformer | 13.22% | Low |

Table: Behavioral activity recognition performance on OPPORTUNITY dataset (AF1-Score). Source: Ciortuz et al., Neurocomputing 650 (2025)

This suggests that “bigger” isn’t always “better” in HAR—especially when real-time, low-power inference is critical.

Truth #2: Data Imbalance Skews AI Performance (The Bad)

One of the biggest pitfalls in HAR research? Ignoring class imbalance.

Many datasets include a “NULL” class—representing all non-target activities—which often dominates the data. Traditional metrics like accuracy can be misleading because a model might achieve high scores simply by predicting “NULL” most of the time.

The study highlights this flaw:

“Accuracy is misleading with imbalanced datasets, as it does not adequately penalize misclassifications of underrepresented classes.”

Instead, the researchers used the Average F1-Score (AF1), which treats all classes equally—revealing the true performance gap.

For instance, the Transformer model’s AF1-Score dropped to 13.22% on behavioral tasks—exposing its inability to recognize rare but critical activities like “drinking” or “taking medication.”

❌ The Bad: Over-reliance on accuracy in imbalanced datasets leads to biased, unreliable models that fail in real-world use.

✅ The Good Fix: Use AF1-Score, MAP, and AUC for evaluation. Better yet—design more balanced datasets like CogAge, which excludes the NULL class and improves fairness.

Truth #3: Multi-Device Fusion Boosts Accuracy (The Good)

Where you place your sensors matters—a lot.

The CogAge dataset uses three consumer-grade devices (wrist, hip, ankle) in typical daily positions, mimicking real-world use. The study found that:

“Training with data from all three devices resulted in the highest AF1-Score for both state and behavioral classification.”

This proves that multi-device, multi-position sensing captures richer movement patterns than single-point monitoring.

For example:

- Wrist sensor alone: 69.23% AF1 (behavioral)

- All three devices: +10–15% improvement

✅ The Good: A multi-modal approach—combining data from arms, hips, and feet—significantly improves recognition of complex behaviors like cooking or medication intake.

💡 Pro Tip: For fall detection or mobility tracking, combine hip and ankle sensors to capture gait and balance more accurately.

Truth #4: Feature Engineering Still Beats Deep Learning (Sometimes)

Despite the deep learning boom, hand-crafted features remain powerful.

On the CogAge dataset, the SVM with hand-crafted features achieved 69.23% AF1-Score for behavioral activities—outperforming many deep models.

Why? Because domain knowledge allows engineers to extract meaningful temporal and spectral features—like mean acceleration, jerk, or frequency-domain power—that deep networks must learn from scratch.

✅ The Good: When data is limited or sensors are sparse, feature engineering + traditional ML (e.g., SVM, Random Forest) can outperform deep learning.

❌ The Overhyped: The assumption that “deep learning automatically learns the best features” fails when training data is small or noisy—common in healthcare.

| APPROACH | BEST USE CASE | COMPUTATIONALY COST | PERFORMANCE (AF1) |

|---|---|---|---|

| Hand-Crafted + SVM | Small datasets, consumer devices | Low | 69.23% |

| CNN/GRU/LSTM | Multi-sensor, high-frequency data | Medium | 79–88% |

| Transformer | Large-scale, clean data | High | <35% |

Table: Model suitability based on data and deployment constraints.

Truth #5: LSTM and GRU Are the Sweet Spot for Wearable AI (The Good)

After testing nine models, the study concludes: LSTM and GRU are the most balanced choices for healthcare HAR.

Here’s why:

- ✅ Temporal modeling: They excel at capturing time-series patterns in sensor data.

- ✅ Moderate complexity: Faster training and inference than Transformers.

- ✅ High accuracy: Achieved over 94% AF1-Score for state activities (sitting, walking, lying) on OPPORTUNITY.

For example:

\[ AF1_{LSTM} = \frac{1}{N} \sum_{i=1}^{N} \frac{Precision_i + Recall_i}{2 \cdot Precision_i \cdot Recall_i} \]

Where N is the number of activity classes, and i indexes each class.

The C-LSTM (Convolutional LSTM) variant also performed well, combining spatial feature extraction (via CNN) with temporal modeling (LSTM):

$$ h_t = \text{LSTM}\big(\text{CNN}(X_t)\big) $$Where Xt is the sensor input at time t , CNN(Xt) extracts local patterns, and ht is the final hidden state.

✅ The Good: LSTM-based models offer the best trade-off between accuracy, speed, and generalizability.

❌ The Overhyped: Transformers, while powerful, require self-attention over long sequences, which is computationally expensive and unnecessary for short activity windows.

🧩 Truth #6: State vs. Behavioral Activities Need Different Models (The Good)

Not all activities are created equal.

The study distinguishes between:

- State activities: Static postures (e.g., sitting, standing, lying)

- Behavioral activities: Dynamic actions (e.g., drinking, opening a door, taking medication)

Key finding: State activities are easier to classify due to stable sensor patterns.

On OPPORTUNITY:

- State AF1-Score (HC-SVM): 94.73%

- Behavioral AF1-Score (GRU): 79.91%

This gap highlights a critical design principle:

✅ Use simpler models (SVM, CNN) for posture detection.

✅ Use recurrent models (GRU, LSTM) for complex behavioral recognition.

Also, behavioral tasks benefit more from multi-sensor fusion—since they involve coordinated limb movements.

Truth #7: Dataset Size Doesn’t Guarantee Better AI (The Bad)

You’d think more data = better AI. But the study shows otherwise.

The OPPORTUNITY dataset is 600x larger than CogAge for state classification, yet:

- CogAge models generalize better to real-world use.

- OPPORTUNITY’s lab-like setup (145 sensors, controlled environment) doesn’t reflect real-life conditions.

❌ The Bad: High-performing research models on OPPORTUNITY fail in real-world deployment due to overfitting and unrealistic sensor placement.

✅ The Good: CogAge, with fewer sensors and natural usage patterns, offers better generalizability for healthcare apps.

This exposes a major gap:

🔺 Research-grade datasets ≠ Real-world performance

Final Verdict: What’s the Best Model for Healthcare HAR?

Based on the study, here’s our recommendation matrix:

| USE CASE | RECOMMENDED MODEL | WHY? |

|---|---|---|

| Fall detection / posture monitoring | SVM + hand-crafted features | Fast, accurate, low power |

| Medication intake / ADL tracking | GRU or LSTM | Handles complex sequences |

| Multi-device home monitoring | C-LSTM or LSTM-GRU | Fuses data across sensors |

| Edge-device deployment | Shallow LSTM | Half the training time of deep models |

| Research with rich sensor data | C-LSTM-A (with attention) | Highest AF1:87.78% |

The C-LSTM-A model achieved the highest AF1-Score of 87.78% on behavioral tasks—thanks to its attention mechanism that focuses on relevant time steps.

\[ \alpha_t = \frac{\exp(\text{score}(h_{t’}, h_{\text{context}}))}{\sum_{t’} \exp(\text{score}(h_{t’}, h_{\text{context}}))} \]

\[ \text{Output} = \sum_{t} \alpha_{t} \cdot h_{t} \]Where αt is the attention weight at time t , allowing the model to focus on critical moments—like the hand reaching for a pill bottle.

Call to Action: Build Smarter, Not Harder

The future of wearable AI in healthcare isn’t about bigger models—it’s about smarter design.

Here’s what you can do today:

- Stop chasing SOTA scores on unrealistic datasets.

- Use AF1-Score, not accuracy, to evaluate real-world performance.

- Start with GRU or LSTM—they’re proven, efficient, and effective.

- Fuse data from multiple body locations for better behavioral insight.

- Prioritize balanced, real-world datasets like CogAge over lab-heavy ones.

If you’re Interested in Melanoma Detection with AI, you may also find this article helpful: 7 Revolutionary Breakthroughs in Melanoma Diagnosis: The Quantum AI Edge That’s Changing Everything

Conclusion: The Good, The Bad, and The Future

This study shatters several myths in wearable AI:

- ✅ The Good: GRU, LSTM, and hand-crafted SVMs deliver real value.

- ❌ The Bad: Imbalanced data and misleading metrics hide model flaws.

- 🚫 The Overhyped: Transformers and massive models are often impractical.

The key takeaway?

Success in healthcare HAR comes not from complexity, but from thoughtful design—choosing the right model for the right task, with the right data.

As wearable tech moves from labs to living rooms, let’s build systems that are accurate, fair, and efficient—not just flashy.

I will provide, end-to-end Python script that implements the models and methodologies described in the research paper “Machine learning models for wearable-based human activity recognition: A comparative study.

# main.py

# Author: Gemini

# Date: August 1, 2025

# Description: This script implements the models and methodologies from the paper

# "Machine learning models for wearable-based human activity recognition: A comparative study"

# for recognizing human activities from wearable sensor data.

import numpy as np

import tensorflow as tf

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, Dense, Conv1D, MaxPooling1D, Flatten, LSTM, GRU, Dropout, Add, LayerNormalization, MultiHeadAttention, GlobalAveragePooling1D, TimeDistributed

from tensorflow.keras.optimizers import Adam

from sklearn.svm import SVC

from sklearn.metrics import f1_score, accuracy_score, average_precision_score, roc_auc_score

from sklearn.preprocessing import StandardScaler, MinMaxScaler

from scipy.stats import skew, kurtosis

from scipy.fft import fft

# --- Model Definitions ---

class HARModels:

"""

This class encapsulates the nine different models for Human Activity Recognition

as described in the research paper.

"""

def __init__(self, num_classes, timesteps, num_features):

self.num_classes = num_classes

self.timesteps = timesteps

self.num_features = num_features

def _build_base_model(self, model_name, layers):

"""

Helper function to build a generic sequential model.

"""

input_layer = Input(shape=(self.timesteps, self.num_features))

x = layers(input_layer)

output_layer = Dense(self.num_classes, activation='softmax')(x)

model = Model(inputs=input_layer, outputs=output_layer, name=model_name)

return model

def get_cnn_model(self):

"""

Builds the Convolutional Neural Network (CNN) model.

"""

input_layer = Input(shape=(self.timesteps, self.num_features))

x = Conv1D(filters=10, kernel_size=45, activation='relu')(input_layer)

x = MaxPooling1D(pool_size=2)(x)

x = Conv1D(filters=10, kernel_size=49, activation='relu')(x)

x = MaxPooling1D(pool_size=2)(x)

x = Conv1D(filters=10, kernel_size=46, activation='relu')(x)

x = MaxPooling1D(pool_size=2)(x)

x = Flatten()(x)

x = Dense(2000, activation='relu')(x)

output_layer = Dense(self.num_classes, activation='softmax')(x)

return Model(inputs=input_layer, outputs=output_layer, name='CNN')

def get_gru_model(self):

"""

Builds the Gated Recurrent Unit (GRU) model.

"""

input_layer = Input(shape=(self.timesteps, self.num_features))

x = GRU(600, return_sequences=True)(input_layer)

x = Dropout(0.2)(x)

x = GRU(600)(x)

x = Dense(512, activation='relu')(x)

output_layer = Dense(self.num_classes, activation='softmax')(x)

return Model(inputs=input_layer, outputs=output_layer, name='GRU')

def get_lstm_model(self):

"""

Builds the Long Short-Term Memory (LSTM) model.

"""

input_layer = Input(shape=(self.timesteps, self.num_features))

x = LSTM(600, return_sequences=True)(input_layer)

x = Dropout(0.2)(x)

x = LSTM(600)(x)

x = Dense(512, activation='relu')(x)

output_layer = Dense(self.num_classes, activation='softmax')(x)

return Model(inputs=input_layer, outputs=output_layer, name='LSTM')

def get_c_lstm_model(self, n_steps=8, n_length=8):

"""

Builds the Hybrid Convolutional and LSTM (C-LSTM) model.

"""

input_layer = Input(shape=(None, n_length, self.num_features))

cnn = TimeDistributed(Conv1D(filters=64, kernel_size=3, activation='relu'))(input_layer)

cnn = TimeDistributed(Dropout(0.5))(cnn)

cnn = TimeDistributed(MaxPooling1D(pool_size=2))(cnn)

cnn = TimeDistributed(Flatten())(cnn)

x = LSTM(600, return_sequences=True)(cnn)

x = Dropout(0.2)(x)

x = LSTM(600)(x)

x = Dense(512, activation='relu')(x)

output_layer = Dense(self.num_classes, activation='softmax')(x)

return Model(inputs=input_layer, outputs=output_layer, name='C-LSTM')

def get_lstm_gru_model(self):

"""

Builds the Hybrid LSTM and GRU (LSTM-GRU) model.

"""

input_layer = Input(shape=(self.timesteps, self.num_features))

x = LSTM(600, return_sequences=True)(input_layer)

x = Dropout(0.2)(x)

x = GRU(600, return_sequences=True)(x)

x = Dropout(0.2)(x)

x = GRU(600)(x)

x = Dense(200, activation='relu')(x)

output_layer = Dense(self.num_classes, activation='softmax')(x)

return Model(inputs=input_layer, outputs=output_layer, name='LSTM-GRU')

def get_transformer_model(self):

"""

Builds the Transformer model.

"""

input_layer = Input(shape=(self.timesteps, self.num_features))

# Transformer Encoder Block

x = MultiHeadAttention(num_heads=1, key_dim=50)(input_layer, input_layer)

x = Add()([input_layer, x])

x = LayerNormalization(epsilon=1e-6)(x)

ff_out = Dense(128, activation='relu')(x)

ff_out = Dropout(0.25)(ff_out)

x = Add()([x, ff_out])

x = LayerNormalization(epsilon=1e-6)(x)

x = GlobalAveragePooling1D()(x)

x = Dense(128, activation='relu')(x)

x = Dropout(0.4)(x)

output_layer = Dense(self.num_classes, activation='softmax')(x)

return Model(inputs=input_layer, outputs=output_layer, name='Transformer')

def get_lstm_a_model(self):

"""

Builds the LSTM with Attention (LSTM-A) model.

"""

input_layer = Input(shape=(self.timesteps, self.num_features))

lstm_out = LSTM(600, return_sequences=True)(input_layer)

lstm_out = Dropout(0.2)(lstm_out)

# Attention Layer

attention = Dense(1, activation='tanh')(lstm_out)

attention = Flatten()(attention)

attention = tf.keras.layers.Activation('softmax')(attention)

attention = tf.keras.layers.RepeatVector(600)(attention)

attention = tf.keras.layers.Permute([2, 1])(attention)

sent_representation = tf.keras.layers.Multiply()([lstm_out, attention])

sent_representation = tf.keras.layers.Lambda(lambda xin: tf.keras.backend.sum(xin, axis=-2), output_shape=(600,))(sent_representation)

x = Dense(200, activation='relu')(sent_representation)

output_layer = Dense(self.num_classes, activation='softmax')(x)

return Model(inputs=input_layer, outputs=output_layer, name='LSTM-A')

def get_c_lstm_a_model(self, n_steps=8, n_length=8):

"""

Builds the Hybrid C-LSTM with Attention (C-LSTM-A) model.

"""

input_layer = Input(shape=(None, n_length, self.num_features))

cnn = TimeDistributed(Conv1D(filters=64, kernel_size=3, activation='relu'))(input_layer)

cnn = TimeDistributed(Dropout(0.5))(cnn)

cnn = TimeDistributed(MaxPooling1D(pool_size=2))(cnn)

cnn = TimeDistributed(Flatten())(cnn)

lstm_out = LSTM(600, return_sequences=True)(cnn)

lstm_out = Dropout(0.2)(lstm_out)

# Attention Layer

attention = Dense(1, activation='tanh')(lstm_out)

attention = Flatten()(attention)

attention = tf.keras.layers.Activation('softmax')(attention)

attention = tf.keras.layers.RepeatVector(600)(attention)

attention = tf.keras.layers.Permute([2, 1])(attention)

sent_representation = tf.keras.layers.Multiply()([lstm_out, attention])

sent_representation = tf.keras.layers.Lambda(lambda xin: tf.keras.backend.sum(xin, axis=-2), output_shape=(600,))(sent_representation)

x = Dense(512, activation='relu')(sent_representation)

output_layer = Dense(self.num_classes, activation='softmax')(x)

return Model(inputs=input_layer, outputs=output_layer, name='C-LSTM-A')

# --- Data Preprocessing and Feature Engineering ---

class DataProcessor:

"""

Handles data loading, preprocessing, and feature engineering.

"""

def __init__(self, timesteps=64, stride=3):

self.timesteps = timesteps

self.stride = stride

def z_score_normalize(self, data):

"""

Applies Z-score normalization to the data.

"""

scaler = StandardScaler()

return scaler.fit_transform(data)

def segment_data(self, data, labels):

"""

Segments the data using a sliding window approach.

"""

segments = []

segment_labels = []

for i in range(0, len(data) - self.timesteps, self.stride):

segments.append(data[i:i + self.timesteps])

# Use the label of the last timestep in the window

segment_labels.append(labels[i + self.timesteps - 1])

return np.array(segments), np.array(segment_labels)

def extract_hand_crafted_features(self, data_segments):

"""

Extracts 18 hand-crafted features from each data segment.

"""

features = []

for segment in data_segments:

segment_features = []

for i in range(segment.shape[1]): # Iterate over each feature/sensor channel

channel = segment[:, i]

# Statistical features

segment_features.append(np.min(channel))

segment_features.append(np.max(channel))

segment_features.append(np.mean(channel))

segment_features.append(np.std(channel))

segment_features.append(skew(channel))

segment_features.append(kurtosis(channel))

segment_features.append(np.percentile(channel, 25))

segment_features.append(np.percentile(channel, 50))

segment_features.append(np.percentile(channel, 75))

segment_features.append(np.mean(np.abs(channel - np.mean(channel))))

segment_features.append(np.sum(np.square(channel)))

# Frequency-domain features

fft_vals = np.abs(fft(channel))

segment_features.append(np.sum(np.square(fft_vals))) # Spectral energy

entropy = -np.sum((fft_vals / np.sum(fft_vals)) * np.log2(fft_vals / np.sum(fft_vals)))

segment_features.append(entropy if not np.isnan(entropy) else 0) # Spectral entropy

segment_features.append(np.argmax(fft_vals)) # Max frequency component

features.append(segment_features)

scaler = MinMaxScaler()

return scaler.fit_transform(np.array(features))

# --- Main Execution ---

def main():

"""

Main function to run the comparative study.

"""

# --- Configuration ---

# NOTE: This is a placeholder for loading the actual datasets.

# In a real scenario, you would load the OPPORTUNITY and CogAge datasets here.

# For demonstration, we'll use random data.

# OPPORTUNITY Dataset (example)

OPP_TIMESTEPS = 64

OPP_FEATURES = 107

OPP_STATE_CLASSES = 5 # (W-NULL)

OPP_BEHAVIORAL_CLASSES = 18 # (W-NULL)

# CogAge Dataset (example)

COGAGE_TIMESTEPS = 4 * 200 # 4 seconds at 200Hz

COGAGE_FEATURES = 8 # (3 devices * (accel+gyro))

COGAGE_STATE_CLASSES = 6

COGAGE_BEHAVIORAL_CLASSES = 53

# --- Create Dummy Data for Demonstration ---

def create_dummy_data(samples, timesteps, features, classes):

X = np.random.rand(samples, timesteps, features)

y = np.random.randint(0, classes, size=(samples,))

return X, y

X_opp, y_opp = create_dummy_data(1000, OPP_TIMESTEPS, OPP_FEATURES, OPP_STATE_CLASSES)

X_cog, y_cog = create_dummy_data(500, COGAGE_TIMESTEPS, COGAGE_FEATURES, COGAGE_STATE_CLASSES)

# --- Initialize Processors and Models ---

opp_processor = DataProcessor(timesteps=OPP_TIMESTEPS)

cogage_processor = DataProcessor(timesteps=COGAGE_TIMESTEPS)

har_models_opp = HARModels(OPP_STATE_CLASSES, OPP_TIMESTEPS, OPP_FEATURES)

har_models_cogage = HARModels(COGAGE_STATE_CLASSES, COGAGE_TIMESTEPS, COGAGE_FEATURES)

# --- Run Experiments ---

# Example: Running the LSTM model on the OPPORTUNITY dataset

print("--- Running LSTM on OPPORTUNITY (State) ---")

# Preprocessing

X_opp_norm = np.array([opp_processor.z_score_normalize(sample) for sample in X_opp])

X_opp_seg, y_opp_seg = opp_processor.segment_data(X_opp_norm.reshape(-1, OPP_FEATURES), y_opp.repeat(OPP_TIMESTEPS))

# Get and compile model

lstm_model = har_models_opp.get_lstm_model()

lstm_model.compile(optimizer=Adam(), loss='sparse_categorical_crossentropy', metrics=['accuracy'])

lstm_model.summary()

# Train model

lstm_model.fit(X_opp_seg, y_opp_seg, epochs=10, batch_size=64, validation_split=0.2)

# Evaluation (on a test set, here we use the same data for simplicity)

y_pred_prob = lstm_model.predict(X_opp_seg)

y_pred = np.argmax(y_pred_prob, axis=1)

print("\n--- Evaluation Metrics ---")

print(f"Average F1-Score: {f1_score(y_opp_seg, y_pred, average='macro')}")

print(f"Accuracy: {accuracy_score(y_opp_seg, y_pred)}")

# MAP and AUC require one-hot encoded labels for multi-class

y_opp_one_hot = tf.keras.utils.to_categorical(y_opp_seg, num_classes=OPP_STATE_CLASSES)

print(f"Mean Average Precision (MAP): {average_precision_score(y_opp_one_hot, y_pred_prob, average='macro')}")

print(f"AUC: {roc_auc_score(y_opp_one_hot, y_pred_prob, multi_class='ovr', average='macro')}")

# Example: Running SVM on the CogAge dataset

print("\n--- Running SVM on CogAge (State) ---")

# Preprocessing and Feature Engineering

X_cog_norm = np.array([cogage_processor.z_score_normalize(sample) for sample in X_cog])

X_cog_seg, y_cog_seg = cogage_processor.segment_data(X_cog_norm.reshape(-1, COGAGE_FEATURES), y_cog.repeat(COGAGE_TIMESTEPS))

X_cog_hc_features = cogage_processor.extract_hand_crafted_features(X_cog_seg)

# Train SVM

svm_model = SVC(kernel='linear', C=1.0, probability=True)

svm_model.fit(X_cog_hc_features, y_cog_seg)

# Evaluation

y_pred_svm = svm_model.predict(X_cog_hc_features)

y_pred_prob_svm = svm_model.predict_proba(X_cog_hc_features)

print("\n--- Evaluation Metrics ---")

print(f"Average F1-Score: {f1_score(y_cog_seg, y_pred_svm, average='macro')}")

print(f"Accuracy: {accuracy_score(y_cog_seg, y_pred_svm)}")

y_cog_one_hot = tf.keras.utils.to_categorical(y_cog_seg, num_classes=COGAGE_STATE_CLASSES)

print(f"Mean Average Precision (MAP): {average_precision_score(y_cog_one_hot, y_pred_prob_svm, average='macro')}")

print(f"AUC: {roc_auc_score(y_cog_one_hot, y_pred_prob_svm, multi_class='ovr', average='macro')}")

if __name__ == '__main__':

main()

References

Ciortuz, G., Hozhabr Pour, H., Irshad, M. T., Nisar, M. A., Huang, X., & Fudickar, S. (2025). A comparative study on deep learning architectures for wearable human activity recognition in healthcare. Neurocomputing, 650, 130911. https://doi.org/10.1016/j.neucom.2025.130911

[38] R. Chavarriaga et al., The opportunity challenge: a benchmark database for on-body sensor-based activity recognition, Pattern Recognit. Lett. (2013)

[22] F. Li et al., Deep transfer learning for time series data based on sensor modality classification, Sensors (2020)