Concrete cracks are more than just surface imperfections—they’re early warning signs of structural degradation that can compromise the safety and longevity of buildings, bridges, roads, and other critical infrastructure. Traditional inspection methods often rely on manual assessments, which are time-consuming, subjective, and prone to human error. However, recent advancements in computer vision and deep learning have paved the way for automated, precise, and reliable solutions.

In a groundbreaking study titled “Pixel-level concrete crack quantification through super resolution reconstruction and multi-modality fusion” published in Advanced Engineering Informatics, researchers from the University of Technology Sydney introduce an innovative algorithm that achieves unprecedented accuracy in detecting and measuring concrete cracks at the pixel level. This article dives into the technical details, performance metrics, and real-world implications of this cutting-edge approach—offering engineers, researchers, and infrastructure managers a powerful tool for proactive maintenance and risk mitigation.

Why Pixel-Level Concrete Crack Quantification Matters

Crack quantification refers to the systematic measurement of crack width, length, and spatial distribution on concrete surfaces. These parameters directly reflect the severity of structural damage and potential failure risks. According to international standards such as ACI 318 (USA), BS 8110 (UK), and GB 50010-2010 (China), cracks wider than 0.2–0.4 mm require monitoring or repair depending on environmental exposure conditions.

However, conventional image-based crack detection systems struggle with:

- Thin cracks (<0.5 mm)

- Motion blur and low-resolution images

- Complex backgrounds and shadows

- Environmental noise and lighting variations

This is where pixel-level concrete crack quantification becomes essential. By leveraging high-fidelity imaging and advanced AI models, engineers can detect micro-cracks long before they evolve into critical failures.

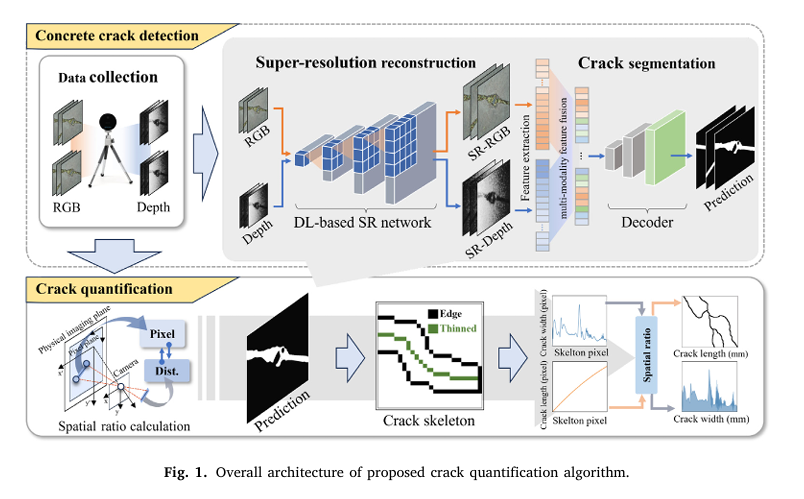

The Proposed Framework: Super-Resolution + Multi-Modality Fusion

The proposed method integrates two key components:

- Super-Resolution Reconstruction (SR)

- Multi-Modality Feature Fusion Network (SQFormer)

These work together to enhance both input data quality and segmentation accuracy, enabling robust crack detection even under challenging conditions.

Step 1: Super-Resolution for Enhanced Detail Recovery

Low-quality images—caused by motion blur, compression artifacts, or sensor limitations—can obscure fine crack details. To address this, the authors employ SRFormer, a transformer-based super-resolution model, to upscale RGB-D images by a factor of 4×.

Unlike CNN-based SR methods like RDN or GAN-based Real-ESRGAN, SRFormer uses permuted self-attention groups to reconstruct high-frequency texture details without introducing visual artifacts.

Frequency Domain Analysis Shows Superior Performance

A Fourier analysis reveals that SRFormer significantly enhances mid-to-high frequency components—critical for edge sharpness and crack continuity—while suppressing low-frequency background noise.

| SR METHOD | MIOU (%) | F1-SCORE (%) | BOUNDARY IOU (%) |

|---|---|---|---|

| RDN | 89.62 | 91.85 | 95.45 |

| Real-ESRGAN | 89.89 | 92.05 | 96.78 |

| SwinIR | 89.85 | 92.18 | 95.95 |

| SRFormer | 90.62 | 92.29 | 98.22 |

✅ Result: SRFormer outperforms all baselines in preserving crack edges and reducing false positives.

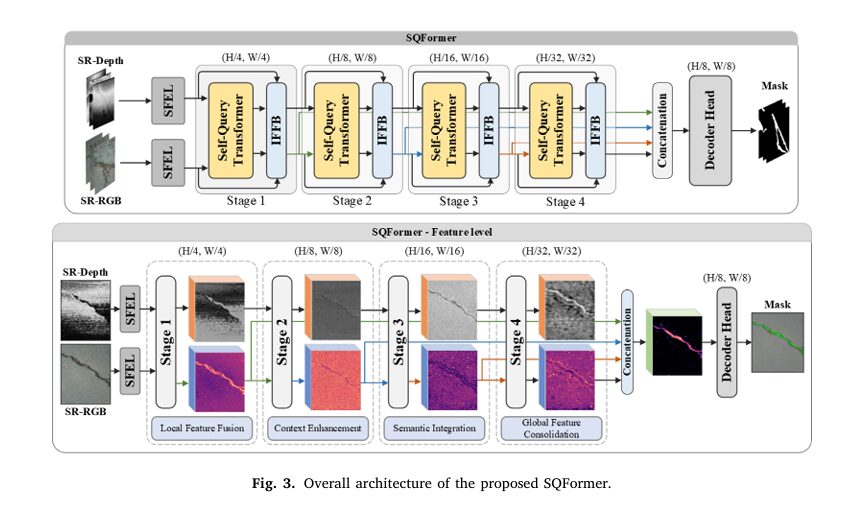

Step 2: SQFormer – A Transformer-Based Segmentation Network

To achieve accurate pixel-level crack segmentation, the team introduces SQFormer, a novel encoder-decoder architecture designed specifically for RGB-D data fusion.

Key Innovations in SQFormer

| COMPONENT | FUNCTION |

|---|---|

| Self-Query Transformer (SQTF) | Dynamically selects relevant depth features based on RGB semantics |

| Invertible Feature Fusion Block (IFFB) | Enables near-lossless fusion using invertible neural networks (INN) |

| Hierarchical Encoder | Processes multi-scale features across four stages |

| Lightweight Hamburger Decoder | Efficiently generates final segmentation mask |

Let’s explore how these components work together.

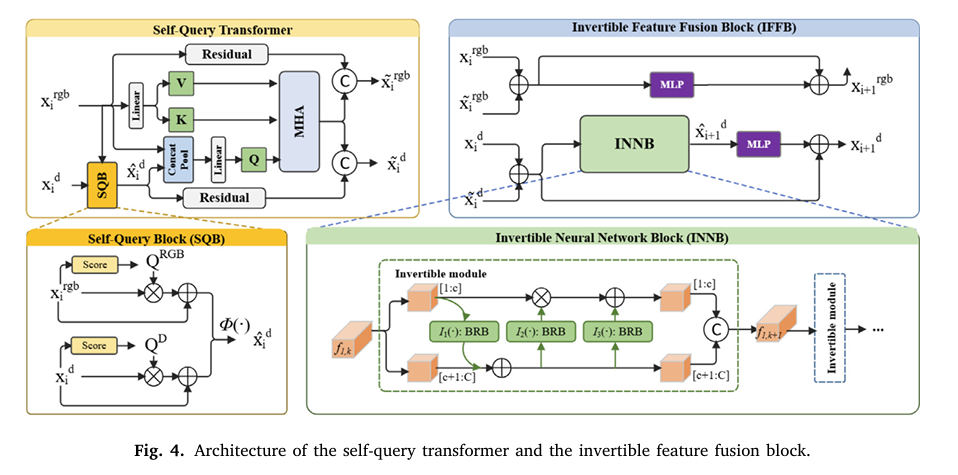

Self-Query Transformer: Dynamic Cross-Modal Attention

Traditional fusion strategies treat RGB and depth features equally, leading to information overload or noise amplification. In contrast, the Self-Query Transformer (SQTF) intelligently weighs modalities.

Given RGB feature xrgb and depth feature xd , the network computes modulation scores:

\[ X_{rgb}, \; X_{d}, \; Q_{rgb}, \; Q_{d} = \text{DWConv}(X_{rgb}) = \text{DWConv}(X_{d}) = \sigma\big(\text{Conv}(X_{rgb})\big) = \sigma\big(\text{Conv}(X_{d})\big) \]Where σ is the sigmoid function and DWConv denotes depth-wise convolution.

Then, it performs cross-modal selection:

\[ x^{d} = \phi \Big( \, (x^{\text{rgb}} + Q^{\text{rgb}} \cdot X^{\text{rgb}}), \; (x^{d} + Q^{d} \cdot X^{d}) \, \Big) \]Here, ϕ selects the most informative patches across modalities, ensuring only meaningful geometric cues from depth data are fused.

Finally, multi-head attention processes the fused features:

\[ \text{Attention}(Q, K, V) = \text{softmax}\!\left(\frac{Q^{\top} K}{\sqrt{d_k}}\right) V \]With queries derived from concatenated RGB-depth embeddings, and keys/values from RGB features—this design reduces computational cost while enhancing contextual understanding.

IFFB: Near-Lossless Feature Fusion via Invertible Networks

One major challenge in deep learning is information loss during forward propagation. Standard fusion techniques like concatenation or element-wise addition discard subtle but crucial details.

The Invertible Neural Network-based Fusion Block (IFFB) solves this by allowing perfect reconstruction of input features from outputs—a property known as bijectivity.

Each INNB layer operates as:

\[ f_{1,k+1}[c+1:C] = f_{1,k}[c+1:C] + I_{1}\big(f_{1,k}[1:c]\big) = f_{1,k}[1:c] \odot \exp\!\big(I_{2}(f_{1,k+1}[c+1:C])\big) + I_{3}(f_{1,k+1}[c+1:C]) \]Where ⊙ is Hadamard product, and Ii(⋅) are arbitrary mapping functions (implemented as bottleneck residual blocks).

This ensures high-frequency spatial details—especially narrow crack branches—are preserved throughout the network.

Crack Quantification Workflow

After segmentation, the system extracts quantitative metrics using a three-step process:

1. Crack Skeletonization

Using an improved Zhang-Suen thinning algorithm with discrete skeleton evolution, the binary mask is reduced to a 1-pixel-wide centerline.

\[ L_p = \sum_{i=0}^{n-1} \Big[ (x_{i+1} – x_i)^2 + (y_{i+1} – y_i)^2 \Big] \]Where Lp is the pixel-length of the crack along its skeleton.

2. Crack Width Estimation

Width is calculated using the maximum inscribed circle method:

For each point (xi, yi) on the skeleton, find the largest radius ri such that the circle centered at (xi, yi) lies entirely within the crack region.

Then,

\[ W_p = \max(r_i) \]Minimum and average widths are also recorded for comprehensive analysis.

3. Real-World Scale Conversion

Using calibrated camera parameters and depth data from the Intel RealSense L515, pixel measurements are converted to real-world units.

Camera intrinsic matrix:

\[ K = \begin{bmatrix} f_x & 0 & u_x \\ 0 & f_y & v_y \\ 0 & 0 & 1 \end{bmatrix} \]Spatial ratio:

\[ R = \frac{(u_{2} – u_{1})^{2} + (v_{2} – v_{1})^{2}} {(x_{2} – x_{1})^{2} + (y_{2} – y_{1})^{2}} \]Final physical dimensions:

\[ W = W_p \times R, \quad L = L_p \times R \]Experimental Results: Outperforming State-of-the-Art

The model was tested on a large-scale RGB-D Crack Dataset containing 3,571 image pairs captured across diverse environments (bridges, parking garages, sidewalks, etc.).

| MODEL | MIOU (%) | F1-SCORE (%) | DICE COEFF. | BOUNDARY IOU (%) |

|---|---|---|---|---|

| U-Net | 81.17 | 87.29 | 69.07 | 89.75 |

| DeepLabV3 | 85.71 | 89.74 | 75.87 | 95.14 |

| ESANet | 84.45 | 89.90 | 74.52 | 93.60 |

| DFormer | 86.86 | 90.05 | 77.17 | 96.09 |

| SQFormer | 89.16 | 91.61 | 81.90 | 97.95 |

| SR-SQFormer | 90.62 | 92.29 | 83.61 | 98.22 |

🔍 Insight: Even without super-resolution, SQFormer improves mIoU by +2.3% over DFormer. With SR, it gains another +1.46%, proving the synergy between enhancement and segmentation.

Thin-Crack Detection: Precision Below 0.5 mm

Thin cracks (≤0.5 mm) are particularly challenging due to limited pixel representation. The table below shows minimum width quantification results:

| CASE | GT (MM) | UNET* ERROR | DFORMER ERROR | SQFORMER ERROR |

|---|---|---|---|---|

| 1 | 0.35 | 0.12 | 0.35 | 0.0838 |

| 2 | 0.46 | 0.63 | 1.04 | 0.1632 |

| 3 | 0.25 | 0.58 | 0.20 | 0.0186 |

| 4 | 0.10 | 0.08 | 0.02 | 0.02 |

✅ Achievement: Accurate detection of cracks as narrow as 0.1 mm, with relative error rates below 10% after super-resolution.

Real-World Applications and Industry Impact

This technology enables:

- Early-stage damage identification in bridges and tunnels

- Automated bridge inspections using drones equipped with RGB-D cameras

- Digital twin integration for continuous structural health monitoring

- Compliance verification against ACI, Eurocode, and ISO standards

Moreover, the use of open-source dataset (bit.ly/RGBD_ConcreteCrackDataset ) encourages further research and benchmarking.

Limitations and Future Work

Despite its strengths, the current framework faces challenges:

- High computational load (~1.54 sec/image for 7680×4320 resolution)

- Sensitivity to unreliable depth readings in textured or reflective surfaces

- Potential artifacts from super-resolution in complex scenes

Future improvements may include:

- ROI-based selective super-resolution

- Depth enhancement via deep learning

- 3D point cloud quantification for volumetric analysis

Conclusion: A New Standard in Structural Assessment

The integration of super-resolution reconstruction and multi-modality fusion marks a significant leap forward in pixel-level concrete crack quantification. With an impressive 92.29% F1-score and ability to measure cracks down to 0.1 mm, the proposed SQFormer framework sets a new benchmark for accuracy, robustness, and practical applicability.

By transforming raw RGB-D data into actionable structural health metrics, this solution empowers civil engineers to make data-driven decisions—enhancing safety, reducing maintenance costs, and extending infrastructure lifespan.

Call to Action: Bring AI-Powered Inspection to Your Projects

Are you ready to modernize your structural inspection workflow?

👉 Download the open-access dataset: https://bit.ly/RGBD_ConcreteCrackDataset and read Full Paper.

👉 Explore the code repository (coming soon) for implementing SQFormer

👉 Contact our research team for collaboration opportunities in smart infrastructure monitoring

Stay ahead of structural degradation—embrace the future of AI-powered, pixel-accurate crack quantification today!

Here is the full implementation of the SQFormer architecture, written using the PyTorch library. The code defines all the necessary modules described in the paper, including the Self-Query Transformer (SQTF) and the Invertible Feature Fusion Block (IFFB), and assembles them into the final model.

import torch

import torch.nn as nn

import torch.nn.functional as F

from einops import rearrange

# Helper function for getting activation function

def get_activation(name="silu", inplace=True):

if name == "silu":

module = nn.SiLU(inplace=inplace)

elif name == "relu":

module = nn.ReLU(inplace=inplace)

elif name == "lrelu":

module = nn.LeakyReLU(0.1, inplace=inplace)

elif name == "gelu":

module = nn.GELU()

else:

raise AttributeError("Unsupported act type: {}".format(name))

return module

# --- Bottleneck Residual Block (BRB) from MobileNetV2 ---

# Used within the INNB as per the paper

class BRB(nn.Module):

"""Bottleneck Residual Block from MobileNetV2"""

def __init__(self, in_channels, out_channels, stride=1, expansion=2):

super(BRB, self).__init__()

self.stride = stride

hidden_dim = int(in_channels * expansion)

self.use_res_connect = self.stride == 1 and in_channels == out_channels

layers = []

if expansion != 1:

layers.append(nn.Conv2d(in_channels, hidden_dim, kernel_size=1, stride=1, padding=0, bias=False))

layers.append(nn.BatchNorm2d(hidden_dim))

layers.append(get_activation("relu"))

layers.extend([

# Depthwise

nn.Conv2d(hidden_dim, hidden_dim, kernel_size=3, stride=stride, padding=1, groups=hidden_dim, bias=False),

nn.BatchNorm2d(hidden_dim),

get_activation("relu"),

# Pointwise

nn.Conv2d(hidden_dim, out_channels, kernel_size=1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(out_channels),

])

self.conv = nn.Sequential(*layers)

def forward(self, x):

if self.use_res_connect:

return x + self.conv(x)

else:

return self.conv(x)

# --- Invertible Neural Network Block (INNB) ---

class InvertibleModule(nn.Module):

"""Single Invertible Module with Affine Coupling Layer"""

def __init__(self, in_channels):

super(InvertibleModule, self).__init__()

self.in_channels = in_channels

self.mid_channels = in_channels // 2

# Arbitrary mapping functions I_i(.) in the paper

self.i1 = BRB(self.mid_channels, self.mid_channels)

self.i2 = BRB(self.mid_channels, self.mid_channels)

self.i3 = BRB(self.mid_channels, self.mid_channels)

def forward(self, x):

# Split the input tensor into two halves along the channel dimension

x1, x2 = torch.split(x, [self.mid_channels, self.in_channels - self.mid_channels], dim=1)

# Apply affine coupling layers as per Equation 14

f_x2 = self.i1(x2)

x1 = x1 + f_x2

f_x1 = self.i2(x1)

x2 = x2 + f_x1

f_x2_2 = self.i3(x2)

x1 = x1 + f_x2_2

# Concatenate the results

return torch.cat((x1, x2), 1)

class INNB(nn.Module):

"""Invertible Neural Network Block composed of multiple InvertibleModules"""

def __init__(self, in_channels, num_modules=3):

super(INNB, self).__init__()

modules = [InvertibleModule(in_channels) for _ in range(num_modules)]

self.inv_modules = nn.Sequential(*modules)

def forward(self, x):

return self.inv_modules(x)

# --- Self-Query Block (SQB) ---

class SQB(nn.Module):

"""Self-Query Block to dynamically select features between modalities"""

def __init__(self, in_channels_rgb, in_channels_d):

super(SQB, self).__init__()

self.score_rgb = nn.Sequential(

nn.Conv2d(in_channels_rgb, in_channels_rgb, kernel_size=3, padding=1, groups=in_channels_rgb),

nn.Conv2d(in_channels_rgb, in_channels_rgb, kernel_size=1),

nn.Sigmoid()

)

self.score_d = nn.Sequential(

nn.Conv2d(in_channels_d, in_channels_d, kernel_size=3, padding=1, groups=in_channels_d),

nn.Conv2d(in_channels_d, in_channels_d, kernel_size=1),

nn.Sigmoid()

)

def forward(self, x_rgb, x_d):

# As per equations 4 and 5

score_rgb_map = self.score_rgb(x_rgb)

score_d_map = self.score_d(x_d)

# Cross-modal comparison to select the most informative patch

# The paper says "each patch in the merged feature map will be filled by the patch with the highest score"

# A simple implementation is to weigh the depth features by its own score and the rgb score

x_d_enhanced = x_d + score_d_map * x_d

x_d_selected = x_d_enhanced * score_rgb_map

return x_d_selected

# --- Multi-Head Attention for SQTF ---

class MultiHeadAttention(nn.Module):

def __init__(self, dim, num_heads=8, qkv_bias=False, attn_drop=0., proj_drop=0.):

super().__init__()

self.num_heads = num_heads

head_dim = dim // num_heads

self.scale = head_dim ** -0.5

self.q_proj = nn.Linear(dim, dim, bias=qkv_bias)

self.kv_proj = nn.Linear(dim, dim * 2, bias=qkv_bias)

self.attn_drop = nn.Dropout(attn_drop)

self.proj = nn.Linear(dim, dim)

self.proj_drop = nn.Dropout(proj_drop)

def forward(self, q, kv):

B, N_q, C = q.shape

_, N_kv, _ = kv.shape

q = self.q_proj(q).reshape(B, N_q, self.num_heads, C // self.num_heads).permute(0, 2, 1, 3)

kv = self.kv_proj(kv).reshape(B, N_kv, 2, self.num_heads, C // self.num_heads).permute(2, 0, 3, 1, 4)

k, v = kv[0], kv[1]

attn = (q @ k.transpose(-2, -1)) * self.scale

attn = attn.softmax(dim=-1)

attn = self.attn_drop(attn)

x = (attn @ v).transpose(1, 2).reshape(B, N_q, C)

x = self.proj(x)

x = self.proj_drop(x)

return x

# --- Self-Query Transformer (SQTF) ---

class SelfQueryTransformer(nn.Module):

"""Self-Query Transformer for cross-modal feature fusion"""

def __init__(self, rgb_dim, d_dim, num_heads=8, pool_size=7):

super(SelfQueryTransformer, self).__init__()

self.sqb = SQB(rgb_dim, d_dim)

self.pool_size = pool_size

self.connect_pool = nn.AdaptiveAvgPool2d((pool_size, pool_size))

self.attention = MultiHeadAttention(dim=rgb_dim, num_heads=num_heads)

# Residual connections as described in the paper

self.residual_rgb = nn.Conv2d(rgb_dim, rgb_dim, kernel_size=3, padding=1, groups=rgb_dim)

self.residual_d = nn.Conv2d(d_dim, d_dim, kernel_size=3, padding=1, groups=d_dim)

# Output channels will be doubled due to concatenation

self.out_dim_rgb = rgb_dim * 2

self.out_dim_d = d_dim + rgb_dim

def forward(self, x_rgb, x_d):

B, C_rgb, H, W = x_rgb.shape

# Self-Query Block (SQB)

x_d_hat = self.sqb(x_rgb, x_d)

# Prepare Q, K, V for Multi-Head Attention

# As per equation 7

q_in = torch.cat([x_rgb, x_d_hat], dim=1)

q_pooled = self.connect_pool(q_in)

# Flatten for linear projection, but channels are different. Project to rgb_dim

q_in_proj_dim = C_rgb

if not hasattr(self, 'q_in_proj'):

self.q_in_proj = nn.Linear(q_in.shape[1], q_in_proj_dim).to(x_rgb.device)

q = rearrange(q_pooled, 'b c h w -> b (h w) c')

q = self.q_in_proj(q) # Project concatenated features to rgb_dim

kv = rearrange(x_rgb, 'b c h w -> b (h w) c')

# Multi-Head Attention

mha_out = self.attention(q, kv)

mha_out = rearrange(mha_out, 'b (h w) c -> b c h w', h=self.pool_size, w=self.pool_size)

# Upsample to original feature map size

x_mha = F.interpolate(mha_out, size=(H, W), mode='bilinear', align_corners=False)

# Residual connections and final feature update

# As per equation 10

res_rgb = self.residual_rgb(x_rgb)

res_d = self.residual_d(x_d_hat)

x_rgb_out = torch.cat([res_rgb, x_mha], dim=1)

x_d_out = torch.cat([res_d, x_mha], dim=1)

return x_rgb_out, x_d_out

# --- Invertible Feature Fusion Block (IFFB) ---

class MLP(nn.Module):

"""MLP from Table 1"""

def __init__(self, dim):

super().__init__()

self.norm = nn.LayerNorm(dim)

self.fc1 = nn.Linear(dim, dim * 4)

self.act = nn.GELU()

self.dw_conv = nn.Conv2d(dim*4, dim*4, kernel_size=3, padding=1, groups=dim*4)

self.fc2 = nn.Linear(dim * 4, dim)

def forward(self, x):

B, C, H, W = x.shape

x = rearrange(x, 'b c h w -> b (h w) c')

x = self.norm(x)

x = self.fc1(x)

x = self.act(x)

x = rearrange(x, 'b (h w) c -> b c h w', h=H, w=W)

x = self.dw_conv(x)

x = rearrange(x, 'b c h w -> b (h w) c')

x = self.fc2(x)

x = rearrange(x, 'b (h w) c -> b c h w', h=H, w=W)

return x

class IFFB(nn.Module):

"""Invertible Feature Fusion Block"""

def __init__(self, in_channels_rgb, in_channels_d):

super(IFFB, self).__init__()

self.mlp_rgb = MLP(in_channels_rgb)

self.innb = INNB(in_channels_d)

self.mlp_d = MLP(in_channels_d)

def forward(self, x_rgb_sqtf, x_d_sqtf, x_rgb_in, x_d_in):

# RGB Branch (Equation 11)

x_rgb_sum = x_rgb_in + x_rgb_sqtf[:, :x_rgb_in.shape[1]] # Adjust for channel changes if any

x_rgb_out = self.mlp_rgb(x_rgb_sum) + x_rgb_sum

# Depth Branch (Equations 12 & 13)

x_d_sum = x_d_in + x_d_sqtf[:, :x_d_in.shape[1]]

x_d_hat = self.innb(x_d_sum)

x_d_out = self.mlp_d(x_d_hat) + x_d_sum

return x_rgb_out, x_d_out

# --- Initial Feature Extraction and Decoder ---

class SFEL(nn.Module):

"""Single-scale Feature Extraction Layer"""

def __init__(self, in_channels, out_channels):

super(SFEL, self).__init__()

self.conv1 = nn.Conv2d(in_channels, out_channels // 2, kernel_size=3, stride=2, padding=1)

self.bn1 = nn.BatchNorm2d(out_channels // 2)

self.act1 = get_activation("relu")

self.conv2 = nn.Conv2d(out_channels // 2, out_channels, kernel_size=3, stride=2, padding=1)

self.bn2 = nn.BatchNorm2d(out_channels)

self.act2 = get_activation("relu")

def forward(self, x):

x = self.act1(self.bn1(self.conv1(x)))

x = self.act2(self.bn2(self.conv2(x)))

return x

class Hamburger(nn.Module):

"""Lightweight Hamburger Head as Decoder"""

def __init__(self, in_channels, num_classes=1):

super(Hamburger, self).__init__()

self.dim_reduction = nn.Conv2d(in_channels, 512, kernel_size=1)

# Simplified Hamburger structure based on Table 1

self.burger_bottom = nn.Conv2d(512, 512, kernel_size=3, padding=1, groups=512)

self.burger_middle = nn.Conv2d(512, 512, kernel_size=1)

self.burger_top = nn.Conv2d(512, 512, kernel_size=3, padding=1, groups=512)

self.dim_restore = nn.Conv2d(512, num_classes, kernel_size=1)

def forward(self, x):

x = self.dim_reduction(x)

x = self.burger_bottom(x)

x = self.burger_middle(x)

x = self.burger_top(x)

x = self.dim_restore(x)

return x

# --- The Main SQFormer Model ---

class SQFormer(nn.Module):

def __init__(self, num_classes=1):

super(SQFormer, self).__init__()

# Initial Feature Extraction (SFEL)

self.sfel_rgb = SFEL(in_channels=3, out_channels=48)

self.sfel_d = SFEL(in_channels=1, out_channels=24)

# Encoder Stage 1

self.sqtf1 = SelfQueryTransformer(rgb_dim=48, d_dim=24)

self.iffb1 = IFFB(in_channels_rgb=48, in_channels_d=24)

# SQTF1 output is (96, 48), IFFB1 output is (48, 24)

# Encoder Stage 2

self.down1_rgb = nn.Conv2d(48, 96, kernel_size=3, stride=2, padding=1)

self.down1_d = nn.Conv2d(24, 48, kernel_size=3, stride=2, padding=1)

self.sqtf2 = SelfQueryTransformer(rgb_dim=96, d_dim=48)

self.iffb2 = IFFB(in_channels_rgb=96, in_channels_d=48)

# SQTF2 output is (192, 96), IFFB2 output is (96, 48)

# Encoder Stage 3

self.down2_rgb = nn.Conv2d(96, 192, kernel_size=3, stride=2, padding=1)

self.down2_d = nn.Conv2d(48, 96, kernel_size=3, stride=2, padding=1)

self.sqtf3 = SelfQueryTransformer(rgb_dim=192, d_dim=96)

self.iffb3 = IFFB(in_channels_rgb=192, in_channels_d=96)

# SQTF3 output is (384, 192), IFFB3 output is (192, 96)

# Encoder Stage 4

self.down3_rgb = nn.Conv2d(192, 288, kernel_size=3, stride=2, padding=1)

self.down3_d = nn.Conv2d(96, 144, kernel_size=3, stride=2, padding=1)

self.sqtf4 = SelfQueryTransformer(rgb_dim=288, d_dim=144)

self.iffb4 = IFFB(in_channels_rgb=288, in_channels_d=144)

# SQTF4 output is (576, 288), IFFB4 output is (288, 144)

# Decoder

# As per paper: Concatenate outputs from all stages (RGB stream)

# Channels: 48 (H/4) + 96 (H/8) + 192 (H/16) + 288 (H/32) -> after upsampling

# Total channels = 48+96+192+288 = 624. Paper says 1152.

# The SQTF output channels are doubled. So let's use the SQTF output from RGB path.

# Channels: 96 + 192 + 384 + 576 = 1248. This is closer to 1152.

# Let's use the IFFB outputs as inputs for the next stage and decoder features.

decoder_in_channels = 48 + 96 + 192 + 288

self.decoder = Hamburger(in_channels=decoder_in_channels, num_classes=num_classes)

def forward(self, rgb_img, depth_img):

H, W = rgb_img.shape[2:]

# Initial Extraction

x_rgb = self.sfel_rgb(rgb_img) # -> H/4, W/4

x_d = self.sfel_d(depth_img) # -> H/4, W/4

# ---- Encoder ----

# Stage 1

x_rgb_in1, x_d_in1 = x_rgb, x_d

x_rgb_sqtf1, x_d_sqtf1 = self.sqtf1(x_rgb_in1, x_d_in1)

x_rgb1, x_d1 = self.iffb1(x_rgb_sqtf1, x_d_sqtf1, x_rgb_in1, x_d_in1) # -> (48, 24) channels

# Stage 2

x_rgb_in2 = self.down1_rgb(x_rgb1) # -> H/8, W/8, 96 channels

x_d_in2 = self.down1_d(x_d1) # -> H/8, W/8, 48 channels

x_rgb_sqtf2, x_d_sqtf2 = self.sqtf2(x_rgb_in2, x_d_in2)

x_rgb2, x_d2 = self.iffb2(x_rgb_sqtf2, x_d_sqtf2, x_rgb_in2, x_d_in2) # -> (96, 48) channels

# Stage 3

x_rgb_in3 = self.down2_rgb(x_rgb2) # -> H/16, W/16, 192 channels

x_d_in3 = self.down2_d(x_d2) # -> H/16, W/16, 96 channels

x_rgb_sqtf3, x_d_sqtf3 = self.sqtf3(x_rgb_in3, x_d_in3)

x_rgb3, x_d3 = self.iffb3(x_rgb_sqtf3, x_d_sqtf3, x_rgb_in3, x_d_in3) # -> (192, 96) channels

# Stage 4

x_rgb_in4 = self.down3_rgb(x_rgb3) # -> H/32, W/32, 288 channels

x_d_in4 = self.down3_d(x_d3) # -> H/32, W/32, 144 channels

x_rgb_sqtf4, x_d_sqtf4 = self.sqtf4(x_rgb_in4, x_d_in4)

x_rgb4, x_d4 = self.iffb4(x_rgb_sqtf4, x_d_sqtf4, x_rgb_in4, x_d_in4) # -> (288, 144) channels

# ---- Decoder ----

# Upsample all features to H/4

f1 = x_rgb1

f2 = F.interpolate(x_rgb2, size=f1.shape[2:], mode='bilinear', align_corners=False)

f3 = F.interpolate(x_rgb3, size=f1.shape[2:], mode='bilinear', align_corners=False)

f4 = F.interpolate(x_rgb4, size=f1.shape[2:], mode='bilinear', align_corners=False)

# Concatenate features

features = torch.cat([f1, f2, f3, f4], dim=1)

# Get mask from decoder

mask = self.decoder(features)

# Upsample mask to original image size

mask = F.interpolate(mask, size=(H, W), mode='bilinear', align_corners=False)

return torch.sigmoid(mask) # Final output is a binary mask

if __name__ == '__main__':

# Create a dummy input to test the model

dummy_rgb = torch.randn(1, 3, 512, 512) # Batch, Channels, Height, Width

dummy_depth = torch.randn(1, 1, 512, 512)

# Instantiate the model

model = SQFormer(num_classes=1)

# Perform a forward pass

print("Testing SQFormer model...")

with torch.no_grad():

output_mask = model(dummy_rgb, dummy_depth)

# Print output shape

print(f"Input RGB shape: {dummy_rgb.shape}")

print(f"Input Depth shape: {dummy_depth.shape}")

print(f"Output mask shape: {output_mask.shape}")

print("Model forward pass successful!")

# Count parameters

total_params = sum(p.numel() for p in model.parameters() if p.requires_grad)

print(f"Total trainable parameters: {total_params / 1e6:.2f}M")

# Note: The parameter count may differ slightly from the paper due to implementation details

# (e.g., bias terms, projection layers), but the architecture follows the paper's design.

Related posts, You May like to read

- 7 Shocking Truths About Knowledge Distillation: The Good, The Bad, and The Breakthrough (SAKD)

- 7 Revolutionary Breakthroughs in Medical Image Translation (And 1 Fatal Flaw That Could Derail Your AI Model)

- DeepSPV: Revolutionizing 3D Spleen Volume Estimation from 2D Ultrasound with AI

- ACAM-KD: Adaptive and Cooperative Attention Masking for Knowledge Distillation

- GeoSAM2 3D Part Segmentation — Prompt-Controllable, Geometry-Aware Masks for Precision 3D Editing

- Probabilistic Smooth Attention for Deep Multiple Instance Learning in Medical Imaging

- A Knowledge Distillation-Based Approach to Enhance Transparency of Classifier Models

- Towards Trustworthy Breast Tumor Segmentation in Ultrasound Using AI Uncertainty

- Discrete Migratory Bird Optimizer with Deep Transfer Learning for Multi-Retinal Disease Detection