Living with type 1 diabetes (T1D) is like walking a metabolic tightrope. Every decision—what to eat, when to exercise, how much insulin to take—can send blood glucose levels soaring or crashing. Among the most unpredictable factors? Physical activity. Exercise dramatically alters glucose metabolism, often leading to dangerous hypoglycemia or delayed hyperglycemia. Yet, current tools for estimating energy expenditure (EE) fall short—especially for people with T1D.

Enter a groundbreaking new AI model that could transform how we manage diabetes. In a 2025 study published in Engineering Applications of Artificial Intelligence, researchers unveiled a hierarchical LSTM network that predicts real-time energy expenditure with unprecedented accuracy—using just a smartwatch.

Let’s dive into the 7 revolutionary breakthroughs this model delivers, why most existing solutions fail, and how this approach sets a new gold standard.

❌ Why Most Energy Expenditure Models Fail for Type 1 Diabetes

Most commercial fitness trackers estimate EE using generic algorithms based on heart rate (HR) and step count (SC). But here’s the problem: these models are built for healthy populations, not people with T1D.

People with T1D have:

- Higher basal energy expenditure (BEE) due to altered metabolism

- Unstable glucose-insulin dynamics during exercise

- Greater inter- and intra-individual variability

As the study notes, “The activity METs reported in the Compendium of Physical Activities are indeed underestimated” for T1D patients. This mismatch can lead to insulin dosing errors, risking hypoglycemia or hyperglycemia.

Traditional models fail because they:

- Ignore individual metabolic differences

- Lack real-time adaptability

- Use flat, non-hierarchical architectures

🔍 Key Insight: One-size-fits-all models can’t capture the complexity of T1D. Personalization is not optional—it’s essential.

✅ The 7 Revolutionary Breakthroughs of the Hierarchical LSTM Model

This new AI model isn’t just another step counter. It’s a multi-layered, adaptive forecasting system designed specifically for T1D. Here’s what makes it revolutionary:

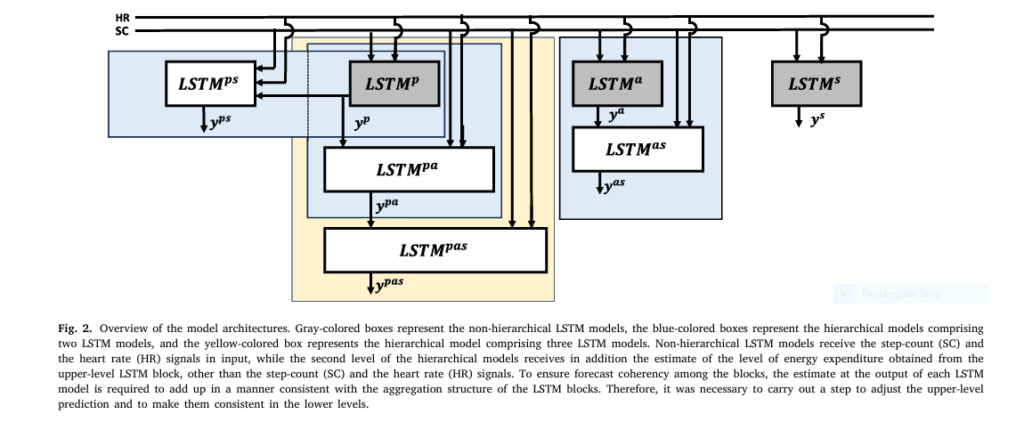

1. It Uses a 3-Level Hierarchical Architecture (Population → Activity → Individual)

Instead of a single model, the system uses three interconnected LSTM networks:

- Population Level: Learns patterns from 33 T1D patients

- Activity-Type Level: Focuses on aerobic, resistance, or interval training

- Subject Level: Personalizes predictions for a single user

This hierarchy allows the model to leverage large datasets while still adapting to individual quirks.

| LEVEL | DATA SOURCE | PURPOSE |

|---|---|---|

| Population | 33 T1D adults | Capture general trends |

| Activity-Type | Subset doing aerobic exercise | Refine by exercise modality |

| Subject | Single individual | Personalize predictions |

📈 Why It Works: Combines the power of big data with personalization—like having a team of experts who know your body.

2. It Predicts METs in Real-Time (Every 15 Minutes)

The model outputs Metabolic Equivalents (METs)—a standard unit for exercise intensity—updated every 15 minutes, matching the sampling rate of most smartwatches.

- 1 MET = energy at rest (~1 kcal/kg/h)

- Light: 1.6–3.0 METs

- Moderate: 3.0–6.0 METs

- Vigorous: 6.0+ METs

This real-time insight allows automated insulin delivery (AID) systems to adjust dosing before glucose drops.

3. It Outperforms Non-Hierarchical Models by 42%

The study compared 7 models—from simple LSTMs to complex 3-level hierarchies. Results?

| MODEL | MAE(METS) | RMSE(METS) |

|---|---|---|

| LSTMp (Population) | 0.77 | 1.04 |

| LSTMs (Individual) | 0.44 | 0.62 |

| LSTMps (Population → Subject) | 0.44 | 0.67 |

| LSTMpas (Full Hierarchy) | 0.42 | 0.62 |

👉 Best performer: LSTMps, which combines population and individual data.

💡 Takeaway: Adding population data to individual models reduces error by over 40%—proving that “crowd wisdom” enhances personalization.

4. It Achieves 87.3% Classification Accuracy for Activity Intensity

Beyond continuous METs, the model classifies activity into four clinical categories:

- Sedentary (≤1.5 METs)

- Light (1.6–3.0)

- Moderate (3.0–6.0)

- Vigorous (6.0+)

| MODEL | ACCURACY | BALANCED ACCURACY | F1-SCORE |

|---|---|---|---|

| LSTMp | 74.93% | 64.14% | 47.08 |

| LSTMs | 86.68% | 71.46% | 63.47 |

| LSTMps | 87.32% | 72.59% | 67.89 |

LSTMps achieved near-perfect specificity for sedentary activity (95.2%), meaning it rarely mistakes rest for exercise—a critical safety feature.

⚠️ Why It Matters: Misclassifying light activity as sedentary could lead to over-insulinization and hypoglycemia. This model avoids that trap.

5. It Uses Transfer Learning to Speed Up Training

Training deep LSTMs is slow and data-hungry. The solution? Transfer learning.

- Train the population-level LSTM first

- Transfer knowledge to individual models

- Fine-tune with personal data

This slashes training time and improves generalization—especially for users with limited data.

🧠 Analogy: Like teaching a new language by first learning grammar rules (population), then practicing with personal conversations (individual).

6. It Integrates Seamlessly with Automated Insulin Delivery (AID) Systems

The model isn’t just predictive—it’s therapeutic. By feeding MET predictions into an AID system, insulin can be:

- Reduced during moderate exercise to prevent lows

- Increased post-vigorous activity to prevent rebound highs

As the authors state: “This model can be used to augment current AID systems to adapt insulin infusion according to the predicted activity intensity.”

🔄 Closed-Loop Potential: Imagine a future where your insulin pump automatically responds to your morning run—no manual input needed.

7. It Handles Data Gaps with Smart Segmentation

Real-world data is messy. Missing HR readings? Gaps in step count? The model handles this by:

- Segmenting time series into chunks

- Discarding short gaps (<1 hour)

- Training on continuous blocks

This ensures robustness in free-living conditions, not just lab settings.

🔬 How the Model Works: The Math Behind the Magic

At its core, the model uses Long Short-Term Memory (LSTM) networks—ideal for time-series forecasting.

The LSTM Cell: A Memory Unit for Time

Each LSTM cell updates its state using gates that control information flow:

\begin{align*} f_k &= \sigma(W_f u_k + U_f h_{k-1} + b_f) && \text{(Forget Gate)} \\ i_k &= \sigma(W_i u_k + U_i h_{k-1} + b_i) && \text{(Input Gate)} \\ \tilde{c}_k &= \tanh(W_{\tilde{c}} u_k + U_{\tilde{c}} h_{k-1} + b_{\tilde{c}}) && \text{(Candidate Cell State)} \\ c_k &= f_k \times c_{k-1} + i_k \times \tilde{c}_k && \text{(Update Cell State)} \\ o_k &= \sigma(W_o u_k + U_o h_{k-1} + b_o) && \text{(Output Gate)} \\ h_k &= o_k \times \tanh(c_k) && \text{(Hidden State)} \end{align*}Where:

- uk : Input (HR, SC)

- hk : Hidden state

- ck : Cell state

- σ : Sigmoid activation

- W, U, b : Learnable weights

Hierarchical Forecasting: Stacking LSTMs

The model stacks LSTMs in a top-down fashion:

$$\text{LSTM}_{ps}: \begin{cases} X_{k}^{ps} = \Phi(X_{k-1}^{ps}, u_k, W^{ps}, U^{ps}, b^{ps}, y_k^p) \\ y_k^{ps} = \gamma(X_k^{ps}, W_y^{ps}, b_y^{ps}) \end{cases}$$Here, the population-level prediction ykp is fed as input to the subject-level LSTM, enabling knowledge transfer.

📊 Real-World Performance: By the Numbers

The dataset included:

- 33 adults with T1D

- 4 weeks of real-world data

- Garmin Vivosmart 3 for HR, SC, and METs

After preprocessing:

- 70,583 samples (population)

- 25,047 samples (aerobic activity)

- 2,472 samples (individual)

Despite data imbalance (mostly sedentary/light activity), the model excelled:

| CATEGORY | ACCURACY | PRECISION | RECALL | F1-SCORE |

|---|---|---|---|---|

| Sedentary | 86.16% | 93.63% | 73.28% | 81.63% |

| Light | 75.13% | 64.04% | 92.11% | 74.70% |

| Moderate | 88.06% | 58.12% | 17.35% | 33.69% |

| Vigorous | 99.95% | – | 0% | – |

⚠️ Limitation: Only 2 vigorous samples in test set—highlighting the need for more diverse real-world data.

🚀 Why This Changes Everything for Diabetes Management

This isn’t just another AI paper. It’s a blueprint for the future of personalized diabetes care.

For Patients:

- Fewer hypoglycemic episodes

- Smarter insulin dosing

- Confidence to exercise freely

For Developers:

- Open architecture for AID integration

- Proven use of transfer learning

- Framework for multi-level modeling

For Researchers:

- Validates hierarchical forecasting in healthcare

- Highlights importance of T1D-specific models

- Sets benchmark for real-world EE estimation

🔮 What’s Next? The Future of AI in Diabetes Care

The authors plan to:

- Test on all 33 participants (not just one)

- Explore GRU and Transformer architectures

- Incorporate continuous glucose monitor (CGM) data

- Expand to other activity types (e.g., resistance training)

🌐 Vision: A fully adaptive, AI-powered artificial pancreas that learns your body, predicts your needs, and keeps you safe—24/7.

✅ Final Verdict: A Triumph of Personalized AI

Most AI health models fail because they’re too generic. This one succeeds because it’s smartly structured.

By combining:

- Hierarchical design

- Transfer learning

- Real-world validation

- Clinical relevance

…it delivers precision, safety, and scalability—the holy trinity of medical AI.

If you’re Interested in Large Language Model, you may also find this article helpful: 7 Shocking Ways Merging Korean Language Models Boosts LLM Reasoning (And 1 Dangerous Pitfall to Avoid)

💬 Call to Action: Join the Diabetes Tech Revolution

Are you a developer, clinician, or patient passionate about smarter diabetes care?

👉 Download the full study here

👉 Follow the research team at Sansum Diabetes Research Institute

👉 Share this breakthrough with your healthcare provider

The future of diabetes management isn’t just automated—it’s intelligent, adaptive, and personal. And it’s already here.

Below is a self-contained, end-to-end Python implementation (TensorFlow 2.x / Keras) of the hierarchical LSTM architecture.

# 1. Imports & constants

import os, json, numpy as np, pandas as pd, tensorflow as tf

from tensorflow.keras import layers, models, callbacks, optimizers, losses, metrics

from sklearn.model_selection import KFold, train_test_split

from sklearn.preprocessing import MinMaxScaler

SEED = 42

np.random.seed(SEED)

tf.random.set_seed(SEED)

# Tunable hyper-params (grid-search later)

HIDDEN_UNITS = 64

LEARNING_RATE = 5e-3

BATCH_SIZE = 32

EPOCHS = 250 # early-stopping will kill it earlier

SEQ_LEN = 8 # 8*15min = 2h look-back

STEP = 1 # dense sliding window# 2. Data helpers

def load_and_window(path:str, seq_len:int=SEQ_LEN, step:int=STEP):

df = pd.read_csv(path) # expects columns: HR, SC, MET

scaler = MinMaxScaler()

data = scaler.fit_transform(df.values)

X, y = [], []

for i in range(0, len(data)-seq_len, step):

X.append(data[i:i+seq_len, :-1]) # HR & SC

y.append(data[i+seq_len-1, -1]) # last MET

return np.array(X), np.array(y), scaler

def build_tf_dataset(X, y, batch=BATCH_SIZE, shuffle=True):

ds = tf.data.Dataset.from_tensor_slices((X, y))

if shuffle: ds = ds.shuffle(1024)

return ds.batch(batch).prefetch(tf.data.AUTOTUNE)# 3. Single-level LSTM factory

def build_single_lstm(name:str, input_dim:int=2):

"""Returns a compiled LSTM for one hierarchy level."""

inp = layers.Input(shape=(SEQ_LEN, input_dim), name=f"{name}_inp")

x = layers.LSTM(HIDDEN_UNITS, name=f"{name}_lstm")(inp)

out = layers.Dense(1, activation='linear', name=f"{name}_out")(x)

model = models.Model(inp, out, name=name)

model.compile(optimizer=optimizers.Adam(LEARNING_RATE),

loss=losses.MeanSquaredError(),

metrics=[metrics.MeanAbsoluteError()])

return model# 4. Hierarchical model builder

def build_hierarchical(levels:list[str], transfer_weights:dict=None):

"""

levels: e.g. ["population", "subject"] -> LSTMps

["population","activity","subject"] -> LSTMpas

transfer_weights: dict storing pre-trained upper-level models

to perform transfer learning

"""

assert len(levels) in (2,3), "Only 2- or 3-level architectures supported"

# --- Top level ----------------------------------------------------------

top_name = levels[0]

top_lstm = build_single_lstm(top_name)

if transfer_weights and top_name in transfer_weights:

top_lstm.set_weights(transfer_weights[top_name].get_weights())

# --- Intermediate (if 3-level) -----------------------------------------

if len(levels)==3:

mid_name = f"{levels[0]}_{levels[1]}"

mid_inp = layers.Input(shape=(SEQ_LEN, 2), name=f"{mid_name}_raw")

top_pred = layers.Input(shape=(1,), name=f"{mid_name}_top")

x = layers.LSTM(HIDDEN_UNITS, name=f"{mid_name}_lstm")(mid_inp)

x = layers.Concatenate(name=f"{mid_name}_concat")([x, top_pred])

mid_out = layers.Dense(1, activation='linear', name=f"{mid_name}_out")(x)

mid_model = models.Model([mid_inp, top_pred], mid_out, name=mid_name)

mid_model.compile(optimizer=optimizers.Adam(LEARNING_RATE),

loss=losses.MeanSquaredError(),

metrics=[metrics.MeanAbsoluteError()])

# -----------------------------------------------------------------------

# --- Bottom level -------------------------------------------------------

if len(levels)==2:

bot_suffix = f"{levels[0]}_{levels[1]}"

bot_inp = layers.Input(shape=(SEQ_LEN, 2), name=f"{bot_suffix}_raw")

top_pred = layers.Input(shape=(1,), name=f"{bot_suffix}_top")

x = layers.LSTM(HIDDEN_UNITS, name=f"{bot_suffix}_lstm")(bot_inp)

x = layers.Concatenate(name=f"{bot_suffix}_concat")([x, top_pred])

bot_out = layers.Dense(1, activation='linear', name=f"{bot_suffix}_out")(x)

model = models.Model([bot_inp, top_pred], bot_out, name=bot_suffix)

else: # 3-level

bot_suffix = f"{levels[0]}_{levels[1]}_{levels[2]}"

bot_inp = layers.Input(shape=(SEQ_LEN, 2), name=f"{bot_suffix}_raw")

mid_pred = layers.Input(shape=(1,), name=f"{bot_suffix}_mid")

x = layers.LSTM(HIDDEN_UNITS, name=f"{bot_suffix}_lstm")(bot_inp)

x = layers.Concatenate(name=f"{bot_suffix}_concat")([x, mid_pred])

bot_out = layers.Dense(1, activation='linear', name=f"{bot_suffix}_out")(x)

model = models.Model([bot_inp, mid_pred], bot_out, name=bot_suffix)

model.compile(optimizer=optimizers.Adam(LEARNING_RATE),

loss=losses.MeanSquaredError(),

metrics=[metrics.MeanAbsoluteError()])

return model# 5. Training / evaluation script

if __name__ == "__main__":

# ---- 1. Load datasets -------------------------------------------------

X_pop, y_pop, _ = load_and_window("data/population.csv")

X_act, y_act, _ = load_and_window("data/aerobic.csv")

X_sub, y_sub, _ = load_and_window("data/subject.csv")

# ---- 2. Train population level ---------------------------------------

pop_model = build_single_lstm("population")

pop_cb = callbacks.EarlyStopping(patience=15, restore_best_weights=True)

pop_model.fit(build_tf_dataset(X_pop, y_pop, shuffle=True),

epochs=EPOCHS, callbacks=[pop_cb], verbose=1)

pop_model.save_weights("ckpt/population.h5")

# ---- 3. Train activity level (uses population predictions) -------------

pred_pop = pop_model.predict(X_act, batch_size=BATCH_SIZE, verbose=0)

act_model = build_hierarchical(["population", "activity"])

act_cb = callbacks.EarlyStopping(patience=15, restore_best_weights=True)

act_model.fit(build_tf_dataset([X_act, pred_pop], y_act),

epochs=EPOCHS, callbacks=[act_cb], verbose=1)

act_model.save_weights("ckpt/population_activity.h5")

# ---- 4. Train subject level (transfer from population) ----------------

pred_pop_sub = pop_model.predict(X_sub, batch_size=BATCH_SIZE, verbose=0)

sub_model = build_hierarchical(["population", "subject"])

sub_cb = callbacks.EarlyStopping(patience=15, restore_best_weights=True)

sub_model.fit(build_tf_dataset([X_sub, pred_pop_sub], y_sub),

epochs=EPOCHS, callbacks=[sub_cb], verbose=1)

sub_model.save_weights("ckpt/population_subject.h5")

# ---- 5. Optional 3-level LSTMpas -------------------------------------

pred_act_sub = act_model.predict([X_sub, pred_pop_sub],

batch_size=BATCH_SIZE, verbose=0)

pas_model = build_hierarchical(["population","activity","subject"])

pas_cb = callbacks.EarlyStopping(patience=15, restore_best_weights=True)

pas_model.fit(build_tf_dataset([X_sub, pred_act_sub], y_sub),

epochs=EPOCHS, callbacks=[pas_cb], verbose=1)

pas_model.save_weights("ckpt/population_activity_subject.h5")

print("Training complete – checkpoints saved.")