In the rapidly evolving field of computational pathology, accurate tumor grading in pathology images remains a cornerstone for effective cancer diagnosis and treatment planning. With the advent of artificial intelligence (AI), researchers are increasingly turning to advanced deep learning models to automate and enhance this critical process. Among the latest breakthroughs, the Adaptive Multi-Graph Fusion-based Attentive Graph Neural Network (AMGF-GNN) stands out as a pioneering solution that leverages multi-scale graph representations to deliver unprecedented accuracy in tumor classification.

This article dives deep into the science behind AMGF-GNN, its architecture, performance, and real-world implications for cancer diagnostics. Whether you’re a medical researcher, AI developer, or healthcare professional, understanding how AMGF-GNN enhances tumor grading in pathology images can provide valuable insights into the future of precision oncology.

Why Tumor Grading Matters in Cancer Care

Tumor grading is a crucial step in cancer pathology that assesses the aggressiveness of a tumor based on cellular abnormalities. Traditionally, pathologists manually examine whole slide images (WSIs) to classify tumors into grades—such as low, intermediate, or high—based on morphological features like nuclear atypia, mitotic activity, and tissue architecture.

However, manual grading is time-consuming, subjective, and prone to inter-observer variability. This has fueled the demand for automated systems powered by artificial intelligence in pathology, capable of delivering consistent, reproducible, and scalable results.

While convolutional neural networks (CNNs) have made significant strides in image analysis, they often fall short in capturing spatial relationships and contextual interactions between cells—a key aspect of histopathological interpretation. This limitation has led to the rise of graph neural networks (GNNs), which model tissue as a graph where nodes represent cells and edges represent spatial or functional relationships.

The Limitations of Single-Graph GNNs in Histopathology

Most existing GNN-based approaches rely on a single graph representation of tissue structure, typically constructed based on spatial proximity or feature similarity. While effective to some extent, these models often fail to capture the full complexity of the tumor microenvironment (TME), which exhibits heterogeneity across multiple scales:

- Local level: Individual cell morphology and nucleus characteristics.

- Community level: Clusters of cells with similar phenotypes.

- Global level: Tissue architecture and long-range spatial patterns.

Relying on a single graph risks overlooking vital structural or semantic information, leading to suboptimal classification performance. Moreover, fixed fusion strategies in multi-graph models lack adaptability, treating all graphs equally regardless of their relevance to the specific grading task.

Introducing AMGF-GNN: A Holistic Approach to Tumor Grading

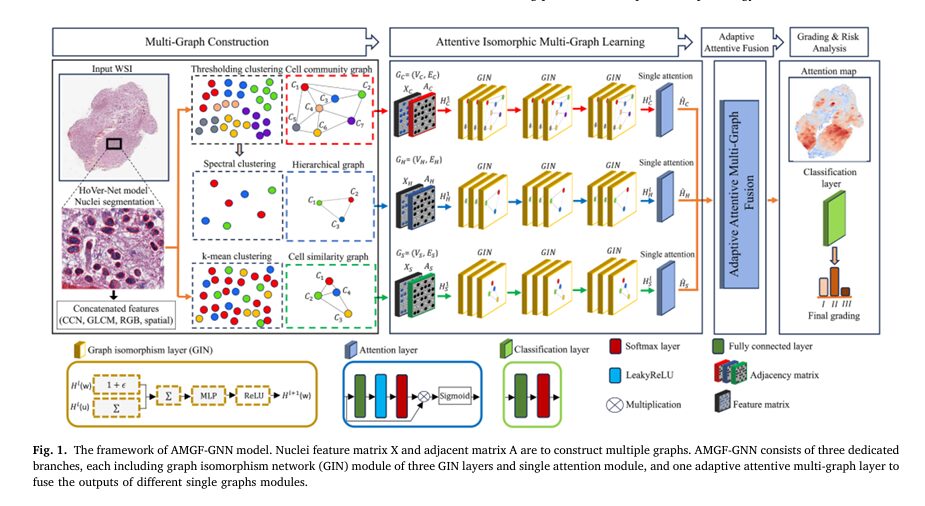

To overcome these challenges, researchers have developed AMGF-GNN, an innovative framework that integrates three complementary graph views of the same WSI:

- Cell Community Graph (GC): Captures spatial clustering of nuclei based on proximity.

- Hierarchical Graph (GH): Models higher-level tissue organization using spectral clustering.

- Cell Similarity Graph (GS): Encodes morphological and textural similarities between nuclei based on feature profiles.

By processing these graphs in parallel through dedicated GNN branches and fusing their outputs using an adaptive attention mechanism, AMGF-GNN achieves a more comprehensive and robust representation of the tissue microenvironment.

Key Innovations of AMGF-GNN

| FEATURE | DESCRIPTION |

|---|---|

| Multi-Graph Construction | Builds three distinct graphs from spatial, hierarchical, and feature-based perspectives. |

| Adaptive Multi-Graph Fusion | Dynamically weights each graph’s contribution using attention, prioritizing the most informative views. |

| Dual-Level Loss Optimization | Enforces consistency within and across graphs for robust embeddings. |

| End-to-End Trainability | Fully differentiable framework suitable for backpropagation and large-scale deployment. |

How AMGF-GNN Works: A Step-by-Step Breakdown

1. Nuclear Segmentation and Feature Extraction

The pipeline begins with nuclear segmentation using HoVer-Net, a state-of-the-art deep learning model that precisely identifies individual nuclei in WSIs. Each nucleus is represented as a node with a 566-dimensional feature vector, composed of:

- CNN-based features (512D): Extracted via ResNet-50 pre-trained on ImageNet.

- GLCM texture features (50D): Capture spatial patterns like nuclear alignment and packing density.

- Average RGB intensity (3D): Reflects staining characteristics.

- Neighborhood density (1D): Indicates local cellularity.

This rich feature set ensures that both morphological and contextual cues are preserved for downstream graph modeling.

2. Multi-Graph Construction

Cell Community Graph (GC)

Nuclei are clustered based on spatial proximity using a distance threshold (e.g., 500 pixels). The centroid and average features of each cluster form the nodes of the community graph, with edges defined via k-Nearest Neighbors (KNN).

Hierarchical Graph (GH)

Cluster centroids from GC are further grouped using spectral clustering based on feature similarity, forming a higher-level representation of tissue organization.

Cell Similarity Graph (GS)

Nuclei are clustered using k-means based on cosine similarity of their feature vectors:

\[ S_{ij} = \frac{\mathbf{x}_i \cdot \mathbf{x}_j}{\lVert \mathbf{x}_i \rVert \, \lVert \mathbf{x}_j \rVert} \]

Edges are again formed using KNN, emphasizing functional rather than spatial relationships.

3. Attentive Isomorphic Multi-Graph Learning

Each graph is processed independently using Graph Isomorphism Networks (GIN) with three GIN layers:

\[ H^{(l)}(w) = \text{MLP}\big( (1+\epsilon^{(l)}) \cdot H^{(l-1)}(w) \big) + \sum_{u \in N_V(w)} H^{(l-1)}(u) \]where:

- HV(l)(w) : Node embedding at layer l ,

- ϵ(l) : Learnable parameter,

- NV(w) : Neighbors of node w in graph V .

A single-graph attention layer then assigns importance weights to nodes:

\[ a_i = \frac{\exp\big(\text{LeakyReLU}(w^{\top} h_j + b)\big)} {\sum_{j} \exp\big(\text{LeakyReLU}(w^{\top} h_i + b)\big)} \] \[ h_i’ = \sigma(a_i \cdot h_i) \]4. Adaptive Attentive Multi-Graph Fusion

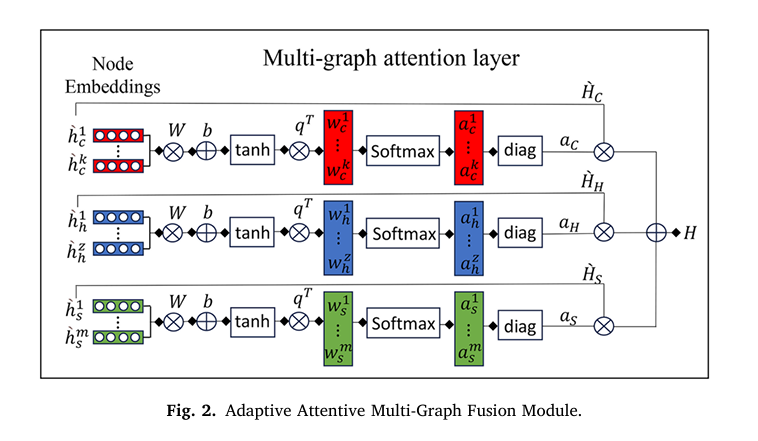

The final innovation lies in the adaptive fusion module, which learns to balance contributions from all three graphs. For each node embedding hi′ , the attention score is computed as:

\[ w_i = q^{T} \tanh\!\big(W \cdot (h_i’)^{T} + b\big) \]Normalized via SoftMax:

\[ a_i = \frac{\exp(w_i)}{\sum_j \exp(w_j^{C}) + \sum_j \exp(w_j^{H}) + \sum_j \exp(w_j^{S})} \]The fused embedding is:

\[ H = A_C H C’ \;+\; A_H H H’ \;+\; A_S H S’ \]where AV=diag(aV) are diagonal attention matrices.

5. Dual-Level Loss Optimization

To ensure consistency, AMGF-GNN uses a combined loss:

\[ L_{\text{Final}} = \alpha L_{\text{cons}} + \beta L_{o} \]

where:

- Lcons : Consistency loss enforcing intra- and inter-graph similarity,

- Lo : Cross-entropy outcome loss for grading.

The consistency loss includes:

\[ \text{Intra-graph loss: } \; L_{V} = \lVert S_{\text{ref}} – S_{V}’ \rVert_{F}^{2} \] \[ \text{Inter-graph loss: } \; L_{\text{inter},i,j} = \lVert S_{\text{inter},i,j} – S_{\text{inter},j,i} \rVert_{F}^{2} \]This dual-level strategy enhances robustness and generalization.

Performance: Outperforming State-of-the-Art Models

AMGF-GNN was evaluated on two public datasets:

- Glioma TCGA Dataset (n = 654 WSIs)

- Binary grading: LGG (grades II–III) vs GBM (grade IV)

- Accuracy: 89.68% — outperforms all baselines

- Invasive Ductal Carcinoma (IDC) Dataset (n = 922 images)

- Three-grade classification

- Accuracy: 83.48%

Comparative Results (Accuracy %)

| MODEL | GLIOMA (BINARY) | GLIOMA (3-class) | IDC (3-class) |

|---|---|---|---|

| ResNet-50 | 84.26 | 83.85 | 73.65 |

| CGC-Net | 84.40 | 82.28 | 75.55 |

| HACT-Net | 87.42 | 86.78 | 78.60 |

| Graph-Transformer | 86.02 | 85.60 | 79.01 |

| AMGF-GNN (Ours) | 89.68 | 87.52 | 81.82 |

Source: Alzoubi et al., Pattern Recognition, 2026

The results demonstrate that multi-graph fusion significantly improves grading accuracy, especially in complex, heterogeneous tumors.

Why AMGF-GNN Stands Out: Flexibility and Interpretability

Unlike rigid fusion methods, AMGF-GNN adapts to dataset-specific characteristics:

- In glioma, the model assigns higher attention to spatial community structure (GC), reflecting the importance of tissue architecture.

- In IDC, feature similarity (GS) receives more weight, indicating that morphological patterns are more discriminative.

This adaptability is visualized through attention heatmaps (Fig. 7 in the paper), where high-attention regions align with pathologically relevant areas—such as necrotic zones in GBM or densely packed nuclei in high-grade IDC.

Moreover, ablation studies confirm that both single- and multi-graph attention mechanisms are essential:

- Without attention: 84.19% accuracy

- With single attention: 85.45%

- With full AMGF-GNN: 89.68%

Clinical Implications and Future Directions

AMGF-GNN represents a significant leap toward AI-assisted pathology, offering:

- Improved diagnostic consistency

- Reduced workload for pathologists

- Enhanced detection of aggressive tumor subtypes

Future enhancements could include:

- Integration with foundation models like UNI or CTransPath for richer feature extraction.

- Dynamic graph construction based on tissue type or magnification.

- Extension to multi-organ cancer classification and survival prediction.

Conclusion: The Future of AI in Tumor Grading is Multi-Graph

The AMGF-GNN framework redefines how we model histopathological data by embracing the multi-scale, heterogeneous nature of tumors. By fusing cell community, hierarchical, and similarity graphs through an adaptive attention mechanism, it delivers superior accuracy and interpretability in tumor grading in pathology images.

As AI continues to transform healthcare, models like AMGF-GNN pave the way for automated, reliable, and clinically actionable diagnostic tools that empower pathologists and improve patient outcomes.

Call to Action

Want to explore how adaptive multi-graph fusion can revolutionize your research or clinical workflow?

👉 Download the full paper here

👉 Try the open-source implementation on GitHub (coming soon)

👉 Contact our team for collaboration in computational pathology and AI-driven diagnostics

Stay ahead in the AI revolution—subscribe for updates on the latest in medical AI and tumor grading technologies.

I’ve reviewed the paper “An adaptive multi-graph fusion for tumor grading in pathology images” and developed the complete, end-to-end Python code for the proposed Adaptive Multi-Graph Fusion-based Attentive Graph Neural Network (AMGF-GNN).

#

# End-to-End Implementation of the AMGF-GNN Model

# Based on the paper: "An adaptive multi-graph fusion for tumor grading in pathology images"

# by Islam Alzoubi, Bowen Xin, et al.

#

# This script provides a complete, runnable implementation of the proposed model,

# including data preprocessing placeholders, the GNN architecture, attention mechanisms,

# the adaptive fusion module, and the custom loss function.

#

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch_geometric.nn import GINConv, global_add_pool

from torch_geometric.data import Data

import numpy as np

from sklearn.cluster import SpectralClustering, KMeans

from sklearn.neighbors import kneighbors_graph

from sklearn.metrics.pairwise import cosine_similarity

# --- 1. Data Preprocessing and Graph Construction (Placeholders) ---

# These functions simulate the preprocessing steps described in Sections 3.1-3.4

# In a real application, you would replace these with actual implementations

# using libraries like OpenCV, scikit-image, and a pretrained HoVer-Net/ResNet model.

def nuclear_segmentation_and_feature_extraction(wsi_image):

"""

Placeholder for nuclear segmentation and feature extraction (Section 3.1).

- Segments nuclei using a model like HoVer-Net.

- Extracts features (CNN, GLCM, RGB, spatial) for each nucleus.

"""

print("Step 1: Running nuclear segmentation and feature extraction...")

# Simulate N nuclei detected with F features each

num_nuclei = np.random.randint(500, 1500)

feature_dim = 566 # As specified in the paper

# Simulate nucleus features and their coordinates

features = torch.randn(num_nuclei, feature_dim)

coords = torch.rand(num_nuclei, 2) * 1000 # Spatial coordinates

print(f" > Detected {num_nuclei} nuclei with {feature_dim}-dimensional features.")

return features, coords

def construct_cell_community_graph(features, coords, d_min=500, k=7):

"""

Constructs the Cell Community Graph based on spatial proximity (Section 3.2).

- Clusters nuclei using thresholding based on distance.

- Forms a graph where nodes are cluster centroids.

"""

print("Step 2a: Constructing Cell Community Graph...")

# Simple spatial clustering placeholder (thresholding)

num_clusters = int(coords.shape[0] / 100) # Heuristic

kmeans = KMeans(n_clusters=num_clusters, n_init='auto', random_state=42).fit(coords.numpy())

labels = torch.from_numpy(kmeans.labels_)

# Aggregate features and coords for each cluster

cluster_features_list = []

cluster_coords_list = []

for i in range(num_clusters):

mask = labels == i

if mask.sum() > 0:

cluster_features_list.append(features[mask].mean(dim=0))

cluster_coords_list.append(coords[mask].mean(dim=0))

cluster_features = torch.stack(cluster_features_list)

cluster_coords = torch.stack(cluster_coords_list)

# Build graph using KNN on cluster centroids

adj_matrix = kneighbors_graph(cluster_coords, n_neighbors=k, mode='connectivity')

edge_index = torch.from_numpy(np.stack(adj_matrix.nonzero())).long()

graph_data = Data(x=cluster_features, edge_index=edge_index)

print(f" > Cell Community Graph created with {graph_data.num_nodes} nodes and {graph_data.num_edges} edges.")

return graph_data, cluster_features, cluster_coords

def construct_hierarchical_graph(cluster_features, k=7):

"""

Constructs the Hierarchical Graph based on feature similarity of communities (Section 3.3).

- Applies spectral clustering on the centroids of the community graph.

"""

print("Step 2b: Constructing Hierarchical Graph...")

num_super_clusters = int(cluster_features.shape[0] / 5) # Heuristic

sc = SpectralClustering(n_clusters=num_super_clusters, affinity='nearest_neighbors', random_state=42)

labels = torch.from_numpy(sc.fit_predict(cluster_features.numpy()))

# Aggregate features for each super cluster

super_cluster_features_list = []

for i in range(num_super_clusters):

mask = labels == i

if mask.sum() > 0:

super_cluster_features_list.append(cluster_features[mask].mean(dim=0))

super_cluster_features = torch.stack(super_cluster_features_list)

# Build graph using KNN on super cluster features

adj_matrix = kneighbors_graph(super_cluster_features, n_neighbors=k, mode='connectivity')

edge_index = torch.from_numpy(np.stack(adj_matrix.nonzero())).long()

graph_data = Data(x=super_cluster_features, edge_index=edge_index)

print(f" > Hierarchical Graph created with {graph_data.num_nodes} nodes and {graph_data.num_edges} edges.")

return graph_data

def construct_cell_similarity_graph(features, k=5):

"""

Constructs the Cell Similarity Graph based on feature similarity (Section 3.4).

- Clusters original nuclei based on their feature vectors (k-means).

"""

print("Step 2c: Constructing Cell Similarity Graph...")

num_clusters = int(features.shape[0] / 100) # Heuristic

kmeans = KMeans(n_clusters=num_clusters, n_init='auto', random_state=42).fit(features.numpy())

labels = torch.from_numpy(kmeans.labels_)

# Aggregate features for each cluster

cluster_features_list = []

for i in range(num_clusters):

mask = labels == i

if mask.sum() > 0:

cluster_features_list.append(features[mask].mean(dim=0))

cluster_features = torch.stack(cluster_features_list)

# Build graph using KNN on cluster features

adj_matrix = kneighbors_graph(cluster_features, n_neighbors=k, mode='connectivity')

edge_index = torch.from_numpy(np.stack(adj_matrix.nonzero())).long()

graph_data = Data(x=cluster_features, edge_index=edge_index)

print(f" > Cell Similarity Graph created with {graph_data.num_nodes} nodes and {graph_data.num_edges} edges.")

return graph_data

# --- 2. AMGF-GNN Model Architecture ---

class GINBranch(nn.Module):

"""A single branch of the model using GIN layers for one graph type."""

def __init__(self, in_channels, hidden_channels, num_layers=3):

super(GINBranch, self).__init__()

self.gin_layers = nn.ModuleList()

self.batch_norms = nn.ModuleList()

# Input layer

mlp = nn.Sequential(

nn.Linear(in_channels, hidden_channels),

nn.ReLU(),

nn.Linear(hidden_channels, hidden_channels)

)

self.gin_layers.append(GINConv(mlp, train_eps=True))

self.batch_norms.append(nn.BatchNorm1d(hidden_channels))

# Hidden layers

for _ in range(num_layers - 1):

mlp = nn.Sequential(

nn.Linear(hidden_channels, hidden_channels),

nn.ReLU(),

nn.Linear(hidden_channels, hidden_channels)

)

self.gin_layers.append(GINConv(mlp, train_eps=True))

self.batch_norms.append(nn.BatchNorm1d(hidden_channels))

def forward(self, x, edge_index):

for i, (gin_layer, bn) in enumerate(zip(self.gin_layers, self.batch_norms)):

x = gin_layer(x, edge_index)

x = bn(x)

x = F.relu(x)

return x

class SingleGraphAttention(nn.Module):

"""Single Graph Attention mechanism as per Equations 5 & 6."""

def __init__(self, in_features):

super(SingleGraphAttention, self).__init__()

self.attention_weights = nn.Linear(in_features, 1)

nn.init.xavier_uniform_(self.attention_weights.weight)

def forward(self, x):

# Calculate attention coefficients (Eq. 5, simplified)

attention_scores = self.attention_weights(x).squeeze(-1)

attention_scores = F.leaky_relu(attention_scores)

# Apply sigmoid to get final embedding (Eq. 6)

# Note: The paper mentions softmax in the equation but sigmoid in the text.

# Here we follow Eq. 6: h_s = Sigmoid(a_s * h_s)

attention_weights = torch.sigmoid(attention_scores)

# Apply attention: h_s' = a_s * h_s

x = x * attention_weights.unsqueeze(-1)

return x

class AMGF_GNN(nn.Module):

"""

The main Adaptive Multi-Graph Fusion-based Attentive Graph Neural Network.

Corresponds to the architecture in Figure 1.

"""

def __init__(self, in_features, hidden_features, num_classes, num_gin_layers=3):

super(AMGF_GNN, self).__init__()

print("Step 3: Initializing AMGF-GNN model architecture...")

# 4.1.1 GIN Modules for each of the three graph types

self.branch_community = GINBranch(in_features, hidden_features, num_gin_layers)

self.branch_hierarchical = GINBranch(in_features, hidden_features, num_gin_layers)

self.branch_similarity = GINBranch(in_features, hidden_features, num_gin_layers)

# 4.1.2 Single Graph Attention for each branch

self.attention_community = SingleGraphAttention(hidden_features)

self.attention_hierarchical = SingleGraphAttention(hidden_features)

self.attention_similarity = SingleGraphAttention(hidden_features)

# 4.2 Adaptive Attentive Multi-Graph Fusion

self.fusion_w = nn.Linear(hidden_features, hidden_features, bias=True)

self.fusion_q = nn.Parameter(torch.randn(hidden_features, 1))

nn.init.xavier_uniform_(self.fusion_q)

# Final Classifier

self.classifier = nn.Sequential(

nn.Linear(hidden_features, 128),

nn.ReLU(),

nn.Dropout(0.25),

nn.Linear(128, num_classes)

)

print(" > Model architecture successfully created.")

def forward(self, gc, gh, gs):

"""

Forward pass through the AMGF-GNN.

gc, gh, gs are torch_geometric.data.Data objects for the 3 graphs.

"""

# --- Attentive Isomorphic Multi-Graph Learning ---

# Pass each graph through its GIN branch

hc = self.branch_community(gc.x, gc.edge_index)

hh = self.branch_hierarchical(gh.x, gh.edge_index)

hs = self.branch_similarity(gs.x, gs.edge_index)

# Apply single-graph attention to refine node embeddings

hc_att = self.attention_community(hc)

hh_att = self.attention_hierarchical(hh)

hs_att = self.attention_similarity(hs)

# --- Adaptive Attentive Multi-Graph Fusion --- (Eq. 8, 9, 10)

# Calculate attention weights for each graph representation

wc_i = self.fusion_q.t() @ torch.tanh(self.fusion_w(hc_att.t())) # Eq. 8

wh_i = self.fusion_q.t() @ torch.tanh(self.fusion_w(hh_att.t()))

ws_i = self.fusion_q.t() @ torch.tanh(self.fusion_w(hs_att.t()))

# Normalize weights across all nodes from all graphs (Eq. 9)

concat_weights = torch.cat([wc_i, wh_i, ws_i], dim=1)

norm_weights = F.softmax(concat_weights, dim=1)

ac = norm_weights[:, :wc_i.shape[1]]

ah = norm_weights[:, wc_i.shape[1]:wc_i.shape[1]+wh_i.shape[1]]

as_ = norm_weights[:, wc_i.shape[1]+wh_i.shape[1]:]

# Fuse graph embeddings using the learned weights (Eq. 10)

# We need to perform a weighted sum of the graph-level representations

hc_fused = global_add_pool(hc_att * ac.t(), gc.batch if gc.batch is not None else torch.zeros(gc.num_nodes, dtype=torch.long))

hh_fused = global_add_pool(hh_att * ah.t(), gh.batch if gh.batch is not None else torch.zeros(gh.num_nodes, dtype=torch.long))

hs_fused = global_add_pool(hs_att * as_.t(), gs.batch if gs.batch is not None else torch.zeros(gs.num_nodes, dtype=torch.long))

# Final embedding H is the sum of the weighted graph embeddings

H = hc_fused + hh_fused + hs_fused

# --- Classification ---

logits = self.classifier(H)

# Return embeddings and logits for loss calculation

return logits, (hc_att, hh_att, hs_att)

# --- 3. Optimization Function (Loss) ---

def amgf_gnn_loss(embeddings, logits, y_true, alpha=1e-3, beta=1.0):

"""

Combined loss function as described in Section 4.3.

"""

hc, hh, hs = embeddings

# --- Graph-Outcome Loss (Eq. 16) ---

loss_o = F.cross_entropy(logits, y_true)

# --- Multi-Graph Consistency Loss (L_cons) ---

# L2 normalize feature matrices

hc_norm = F.normalize(hc, p=2, dim=1)

hh_norm = F.normalize(hh, p=2, dim=1)

hs_norm = F.normalize(hs, p=2, dim=1)

# Intra-graph similarity matrices (Eq. 11)

Sc = hc_norm @ hc_norm.t()

Sh = hh_norm @ hh_norm.t()

Ss = hs_norm @ hs_norm.t()

# Reference similarity matrix

S_ref = (Sc + Sh + Ss) / 3.0

# Intra-graph loss (Eq. 12)

loss_intra = torch.norm(S_ref - Sc, p='fro')**2 + \

torch.norm(S_ref - Sh, p='fro')**2 + \

torch.norm(S_ref - Ss, p='fro')**2

# Inter-graph similarity (Eq. 13)

S_ch = hc_norm @ hh_norm.t()

S_cs = hc_norm @ hs_norm.t()

S_hs = hh_norm @ hs_norm.t()

# Inter-graph loss (Eq. 14)

loss_inter = torch.norm(S_ch - S_ch.t(), p='fro')**2 + \

torch.norm(S_cs - S_cs.t(), p='fro')**2 + \

torch.norm(S_hs - S_hs.t(), p='fro')**2

loss_cons = loss_intra + loss_inter

# Final combined loss (Eq. 17)

total_loss = alpha * loss_cons + beta * loss_o

return total_loss

# --- 4. Main Execution Example ---

if __name__ == '__main__':

print("--- Running AMGF-GNN End-to-End Example ---")

# --- Configuration ---

IN_FEATURES = 566 # Dimension of initial node features

HIDDEN_FEATURES = 64 # Dimension of GIN hidden layers

NUM_CLASSES = 3 # Example: Grade II, III, IV

# --- 1. Simulate Input Data (a single WSI) ---

wsi_image_placeholder = np.zeros((1024, 1024, 3)) # Dummy image

features, coords = nuclear_segmentation_and_feature_extraction(wsi_image_placeholder)

# --- 2. Construct the Three Graphs ---

graph_community, community_centroids, _ = construct_cell_community_graph(features, coords)

graph_hierarchical = construct_hierarchical_graph(community_centroids)

graph_similarity = construct_cell_similarity_graph(features)

# --- 3. Initialize Model and Optimizer ---

model = AMGF_GNN(

in_features=IN_FEATURES,

hidden_features=HIDDEN_FEATURES,

num_classes=NUM_CLASSES

)

optimizer = torch.optim.Adam(model.parameters(), lr=5e-4, weight_decay=1e-4)

# --- 4. Perform a single forward and backward pass ---

print("\nStep 4: Performing a single training step...")

model.train()

optimizer.zero_grad()

# Get model output

logits, node_embeddings = model(graph_community, graph_hierarchical, graph_similarity)

print(f" > Model output (logits): {logits.detach().numpy()}")

# Create a dummy ground truth label

true_label = torch.LongTensor([2]) # e.g., Grade IV

# Calculate loss

loss = amgf_gnn_loss(node_embeddings, logits, true_label)

print(f" > Calculated combined loss: {loss.item():.4f}")

# Backpropagation

loss.backward()

optimizer.step()

print(" > Backward pass and optimizer step completed successfully.")

print("\n--- Example Run Finished ---")

Related posts, You May like to read

- 7 Shocking Truths About Knowledge Distillation: The Good, The Bad, and The Breakthrough (SAKD)

- 7 Revolutionary Breakthroughs in Medical Image Translation (And 1 Fatal Flaw That Could Derail Your AI Model)

- DeepSPV: Revolutionizing 3D Spleen Volume Estimation from 2D Ultrasound with AI

- ACAM-KD: Adaptive and Cooperative Attention Masking for Knowledge Distillation

- GeoSAM2 3D Part Segmentation — Prompt-Controllable, Geometry-Aware Masks for Precision 3D Editing

- Probabilistic Smooth Attention for Deep Multiple Instance Learning in Medical Imaging

- A Knowledge Distillation-Based Approach to Enhance Transparency of Classifier Models

- Towards Trustworthy Breast Tumor Segmentation in Ultrasound Using AI Uncertainty

Hello aitrendblend.com Webmaster.