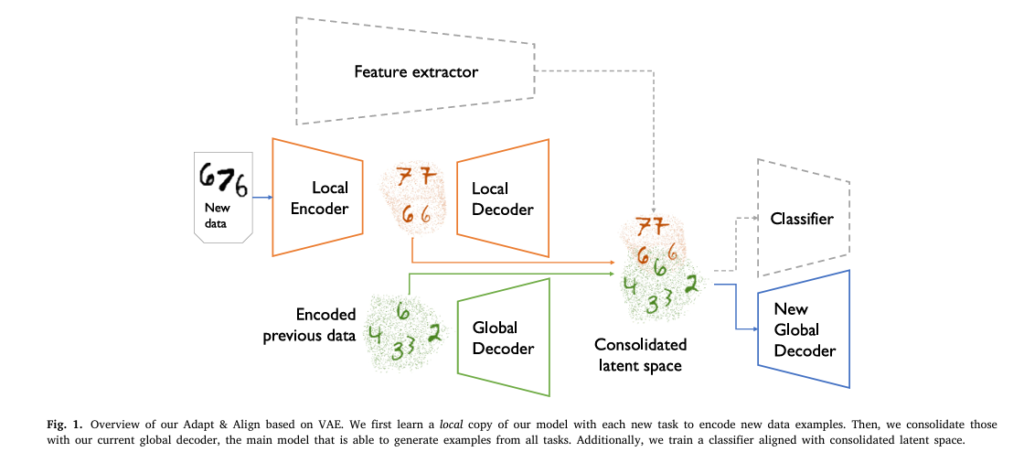

7 Revolutionary Breakthroughs in Continual Learning: The Rise of Adapt&Align

In the fast-evolving world of artificial intelligence, one of the most persistent challenges has been catastrophic forgetting—a phenomenon where neural networks abruptly lose performance on previously learned tasks when trained on new data. This flaw undermines the dream of truly intelligent, adaptive systems. But what if there was a way to not only prevent forgetting […]

7 Revolutionary Breakthroughs in Continual Learning: The Rise of Adapt&Align Read More »