Transforming Diabetic Foot Ulcer Care with AI-Powered Healing Phase Classification

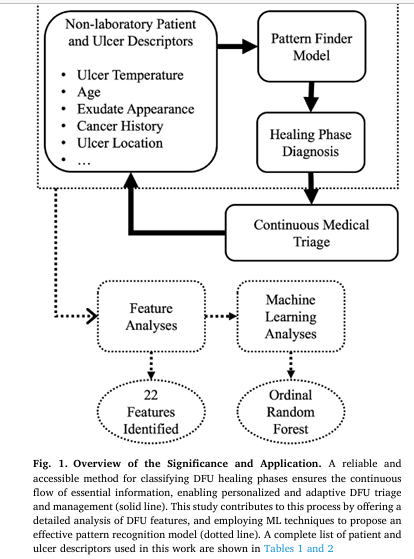

Revolutionizing Diabetic Foot Ulcer Management: How Machine Learning Classifies Healing Phases Using Clinical Metadata Diabetic foot ulcers (DFUs) are one of the most severe and costly complications of diabetes, affecting up to 25% of people with the condition during their lifetime. Left untreated or mismanaged, DFUs can progress to infection, gangrene, and ultimately lead to […]

Transforming Diabetic Foot Ulcer Care with AI-Powered Healing Phase Classification Read More »