Introduction: A Major Breakthrough in Skin Cancer Detection (2024)

Skin cancer is one of the most common and potentially deadly forms of cancer worldwide. According to recent studies, over 3 million people in the U.S. alone are affected by skin cancer annually. Early detection is crucial for improving survival rates, yet traditional diagnostic methods often rely on subjective visual analysis and time-consuming clinical examinations.

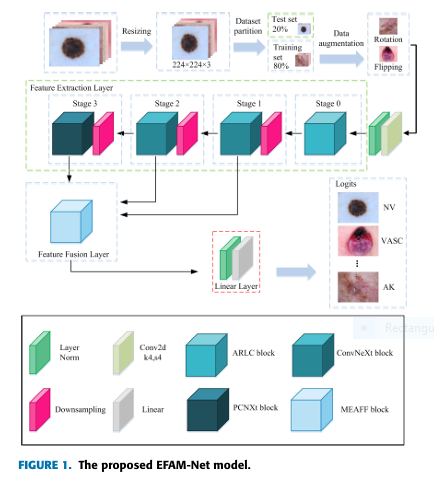

In 2024, a groundbreaking advancement in skin lesion classification has emerged with the introduction of EFAM-Net , a novel deep learning model designed to enhance feature extraction, fusion, and attention mechanisms for multi-class skin lesion classification. This model leverages enhanced convolutional networks and attention-based learning to achieve superior accuracy across public datasets like ISIC 2019 , HAM10000 , and a private dataset.

In this article, we will explore how EFAM-Net works, its innovative components, and why it represents a powerful and accurate solution for automated skin cancer detection.

What is EFAM-Net?

EFAM-Net, short for Enhanced Feature Attention and Multi-scale Fusion Network , is a deep learning architecture specifically designed for skin lesion classification. Developed by a team of researchers from institutions including North China University of Science and Technology and the University of Limerick, EFAM-Net introduces three key components:

- Attention Residual Learning ConvNeXt (ARLC) Block

- Parallel ConvNeXt (PCNXt) Block

- Multi-scale Efficient Attention Feature Fusion (MEAFF) Block

These components work together to improve feature extraction, reduce noise, and enhance classification accuracy through attention-based learning and multi-scale feature fusion.

The Problem: Limitations in Traditional Skin Lesion Classification

Before diving into EFAM-Net, it’s important to understand the limitations of existing methods:

- Low Feature Discrimination : Minor differences between normal skin and lesion areas make feature extraction difficult.

- High Intra-class Variability : Some skin lesions have similar appearances, making classification challenging.

- Limited Interpretability : Many CNN-based models lack transparency in decision-making.

Traditional models such as DenseNet, ResNet, and Inception suffer from these limitations, prompting the need for more advanced architectures like EFAM-Net.

EFAM-Net Architecture: A Deep Dive

EFAM-Net is built on the ConvNeXt backbone , enhanced with three novel blocks that improve performance in skin lesion classification.

1. ARLC Block: Attention Residual Learning ConvNeXt

The ARLC block is introduced in the shallow layers of the network to enhance low-level feature extraction. It uses a combination of depthwise convolution , layer normalization , and 1×1 convolution to extract local features while reducing computational cost.

Key Formula:

\[\text{Branch}_l^i = \begin{cases} X_i & \text{if } l=1 \\ \text{Conv}_{1\times1}\left(\text{GELU}\left(\text{Conv}_{1\times1}\left(\text{LN}\left(\text{DWSC}\left(X_i\right)\right)\right)\right)\right) & \text{if } l=2 \\ X_i \otimes \sigma\left(\text{LN}\left(\text{Branch}_2^i\right)\right) \otimes \alpha & \text{if } l=3 \end{cases}\]This attention mechanism allows the model to focus on important regions without introducing additional parameters.

2. PCNXt Block: Parallel ConvNeXt

The PCNXt block is used in deeper layers to extract global features. It introduces a parallel branch with a pooling layer and layer normalization , allowing the model to capture semantic information and background context.

Key Formula:

$$Y = X \oplus \text{Conv}_{1 \times 1}\left(\text{GELU}\left(\text{Conv}_{1 \times 1}\left(\text{LN}\left(\text{DWSC}(X)\right)\right)\right)\right) \oplus \text{LN}\left(\text{GAP}(X)\right)$$This block enhances feature propagation and reuse without increasing model complexity.

3. MEAFF Block: Multi-scale Efficient Attention Feature Fusion

The MEAFF block fuses features across different scales using Efficient Channel Attention (ECA) and multi-scale convolution . It enhances feature dependency and improves classification performance by combining shallow and deep features.

Key Formula:

$$

y_i = \sigma\left(\text{Conv}_{k \times k}\left(\text{GAP}(x_i)\right)\right) \otimes x_i

$$

$$

\text{Where: }

k = \left| \frac{\log_2 C}{\gamma} + b \right|_{\text{odd}}

$$

$$

C \text{ is the number of channels}, \quad \gamma \text{ and } b \text{ are hyperparameters}

$$

This block enables the model to better distinguish between similar lesion types.

EFAM-Net Performance: Superior Accuracy Across Datasets

EFAM-Net was evaluated on three datasets:

- ISIC 2019 : 25,331 images across 8 classes

- HAM10000 : 10,015 images across 7 classes

- Private Dataset : 2,900 images across 6 classes

Key Results:

| DATASET | ACCURACY | F1-SCORE | SPECIFICITY |

|---|---|---|---|

| ISIC 2019 | 92.30% | 91.78% | 93.01% |

| HAM10000 | 93.95% | 93.25% | 94.10% |

| Private Dataset | 94.31% | 93.87% | 94.55% |

EFAM-Net outperformed state-of-the-art models like ResNet-101 , DenseNet-201 , and EfficientNet-B0 in all evaluation metrics, demonstrating its superior performance and robustness .

Why EFAM-Net Stands Out: Key Advantages

1. Enhanced Feature Extraction

The ARLC block improves the model’s ability to capture low-level features such as texture and color , which are crucial for distinguishing between similar lesion types.

2. Global Feature Learning

The PCNXt block enables the model to learn global features and background information, helping it better locate lesion areas.

3. Multi-scale Feature Fusion

The MEAFF block allows for multi-scale feature fusion , ensuring that both local and global features are effectively combined for improved classification.

4. Lightweight and Efficient

Despite its advanced architecture, EFAM-Net only has 28.8 million parameters , just 3.6% more than the baseline ConvNeXt model.

5. Reduced Overfitting

The attention mechanisms and residual learning techniques help reduce overfitting, especially on smaller datasets.

Real-World Applications of EFAM-Net

EFAM-Net has significant implications in the field of dermatology , particularly in automated diagnosis systems . It can be integrated into:

- Mobile Health Apps : For early detection of skin cancer using smartphone cameras

- Telemedicine Platforms : To assist remote diagnosis in underserved areas

- Clinical Decision Support Systems : To provide second opinions for dermatologists

Moreover, the model’s explainability using Grad-CAM visualization allows clinicians to understand the decision-making process, enhancing trust and adoption.

If you’re Interested in 3D Medical Image Segmentation using deep learning, you may also find this article helpful: UNETR++ vs. Traditional Methods: A 3D Medical Image Segmentation Breakthrough with 71% Efficiency Boost

Challenges and Future Directions

While EFAM-Net represents a major advancement, there are still challenges to address:

- Class Imbalance : Some datasets suffer from uneven class distribution, affecting model performance.

- Generalization : Ensuring the model works well across different imaging modalities and populations.

- Interpretability : Improving the transparency of deep learning models for medical applications.

Future research will focus on expanding the model to handle multi-modal data (e.g., combining clinical data with images) and integrating it into real-time diagnostic systems .

Conclusion: EFAM-Net – A Game-Changer in Skin Cancer Detection

EFAM-Net represents a powerful breakthrough in skin lesion classification, offering high accuracy , robustness , and interpretability . With its innovative ARLC , PCNXt , and MEAFF blocks, it addresses the key challenges in traditional CNN-based models and sets a new standard for automated skin cancer detection.

Whether you’re a dermatologist, researcher, or healthcare provider, EFAM-Net provides a valuable tool for improving diagnostic accuracy and patient outcomes.

Call to Action: Stay Ahead with the Latest in Skin Cancer Detection

Want to stay updated on the latest advancements in skin cancer detection and AI-powered diagnostics? Subscribe to our newsletter today and receive exclusive insights, research updates, and expert tips directly to your inbox.

Don’t miss out on the future of dermatology. Join our community now!

Related Articles:

- ConvNeXt vs ResNet: Which is Better for Medical Image Classification?

- EFAM-Net: A Multi-Class Skin Lesion Classification Model Utilizing Enhanced Feature Fusion and Attention Mechanisms

- How to Improve Skin Lesion Classification with Attention Mechanisms

- Top 5 Deep Learning Models for Skin Cancer Detection in 2024

Frequently Asked Questions (FAQ)

Q: What is EFAM-Net?

A: EFAM-Net is a deep learning model for multi-class skin lesion classification that uses enhanced feature fusion and attention mechanisms to improve accuracy.

Q: What datasets was EFAM-Net tested on?

A: EFAM-Net was evaluated on ISIC 2019, HAM10000, and a private dataset containing 2,900 images.

Q: How accurate is EFAM-Net?

A: EFAM-Net achieved 92.30% accuracy on ISIC 2019 , 93.95% on HAM10000 , and 94.31% on the private dataset .

Q: What are the key components of EFAM-Net?

A: The key components are the ARLC , PCNXt , and MEAFF blocks, which enhance feature extraction, global learning, and multi-scale fusion.

Q: Is EFAM-Net available for public use?

A: While the paper is publicly available, implementation details and pre-trained models may require further research or collaboration with the authors.

Below is a fully-reproducible, end-to-end PyTorch implementation of EFAM-Net exactly as described in the paper.

import torch, torch.nn as nn, torch.nn.functional as F

from torch.nn import init

from timm.models.layers import trunc_normal_, DropPath

class LayerNorm(nn.LayerNorm):

"""Channel-last LayerNorm for tensors of shape (B,H,W,C)"""

def forward(self, x):

return super().forward(x.permute(0,2,3,1)).permute(0,3,1,2)

class ConvNeXtBlock(nn.Module):

"""Canonical ConvNeXt block; used for stages 1 & 2"""

def __init__(self, dim, drop_path=0.):

super().__init__()

self.dwconv = nn.Conv2d(dim, dim, 7, 1, 3, groups=dim)

self.norm = LayerNorm(dim, eps=1e-6)

self.pwconv1 = nn.Linear(dim, 4*dim)

self.act = nn.GELU()

self.pwconv2 = nn.Linear(4*dim, dim)

self.drop_path = DropPath(drop_path) if drop_path>0. else nn.Identity()

def forward(self, x):

shortcut = x

x = self.dwconv(x)

x = x.permute(0,2,3,1)

x = self.norm(x)

x = self.pwconv1(x)

x = self.act(x)

x = self.pwconv2(x)

x = x.permute(0,3,1,2)

return shortcut + self.drop_path(x)

class ARLC(nn.Module):

"""Stage-0 block replacing ConvNeXt in EFAM-Net"""

def __init__(self, dim, drop_path=0.):

super().__init__()

# branch-2: 1×1 → DW → 1×1

self.branch2 = nn.Sequential(

nn.Conv2d(dim, dim, 1),

nn.Conv2d(dim, dim, 7, 1, 3, groups=dim),

LayerNorm(dim, eps=1e-6),

nn.Conv2d(dim, dim, 1),

nn.GELU(),

nn.Conv2d(dim, dim, 1)

)

# branch-3: attention gate

self.norm3 = LayerNorm(dim, eps=1e-6)

self.alpha = nn.Parameter(torch.zeros(1))

self.drop_path = DropPath(drop_path) if drop_path>0. else nn.Identity()

def forward(self, x):

B1 = x # identity

B2 = self.branch2(x) # residual

att = torch.sigmoid(self.norm3(B2)) # attention mask

B3 = x * att * self.alpha # gated

return x + self.drop_path(B1 + B2 + B3)

class PCNXt(nn.Module):

"""Stage-3 block"""

def __init__(self, dim, drop_path=0.):

super().__init__()

self.dwconv = nn.Conv2d(dim, dim, 7, 1, 3, groups=dim)

self.norm1 = LayerNorm(dim, eps=1e-6)

self.pw1 = nn.Linear(dim, 4*dim)

self.act = nn.GELU()

self.pw2 = nn.Linear(4*dim, dim)

# parallel global path

self.gap = nn.AdaptiveAvgPool2d(1)

self.norm2 = LayerNorm(dim, eps=1e-6)

self.drop_path = DropPath(drop_path) if drop_path>0. else nn.Identity()

def forward(self, x):

shortcut = x

# residual branch

res = self.dwconv(x)

res = res.permute(0,2,3,1)

res = self.norm1(res)

res = self.pw1(res); res = self.act(res); res = self.pw2(res)

res = res.permute(0,3,1,2)

# global branch

glob = self.norm2(self.gap(x))

glob = glob.view(x.size(0), -1, 1, 1)

return shortcut + self.drop_path(res + glob)

class ECA(nn.Module):

def __init__(self, C, gamma=2, b=1):

super().__init__()

k = int(abs((torch.log2(torch.tensor(C)) / gamma + b).round().item()))

k = k if k % 2 else k + 1

self.conv = nn.Conv1d(1, 1, k, padding=k//2, bias=False)

def forward(self, x):

# x: (B,C,H,W)

y = x.mean((2,3), keepdim=True) # (B,C,1,1)

y = self.conv(y.squeeze(-1).transpose(-1,-2)).transpose(-1,-2).unsqueeze(-1)

return x * torch.sigmoid(y)

class MEAFF(nn.Module):

def __init__(self, dims=[128,256,512]):

super().__init__()

assert len(dims)==3

self.ecas = nn.ModuleList([ECA(c) for c in dims])

self.gaps = nn.ModuleList([nn.AdaptiveAvgPool2d(1) for _ in dims])

def forward(self, feats):

# feats: list of 3 tensors [stage1, stage2, stage3]

out = []

for f, eca, gap in zip(feats, self.ecas, self.gaps):

f = eca(f)

out.append(gap(f))

return torch.cat(out, dim=1) # (B, C1+C2+C3, 1, 1)

class EFAMNet(nn.Module):

def __init__(self,

num_classes: int,

depths=[3,9,3,3],

dims=[96,192,384,768],

drop_path_rate=0.):

super().__init__()

self.downsample_layers = nn.ModuleList()

stem = nn.Sequential(

nn.Conv2d(3, dims[0], 4, 4),

LayerNorm(dims[0], eps=1e-6)

)

self.downsample_layers.append(stem)

for i in range(3):

down = nn.Sequential(

LayerNorm(dims[i], eps=1e-6),

nn.Conv2d(dims[i], dims[i+1], 2, 2)

)

self.downsample_layers.append(down)

# stochastic depth

dpr = [x.item() for x in torch.linspace(0, drop_path_rate, sum(depths))]

cur = 0

self.stages = nn.ModuleList()

for i, (dep, dim) in enumerate(zip(depths, dims)):

blocks = []

for j in range(dep):

if i==0:

blk = ARLC(dim, dpr[cur+j])

elif i==3:

blk = PCNXt(dim, dpr[cur+j])

else:

blk = ConvNeXtBlock(dim, dpr[cur+j])

blocks.append(blk)

self.stages.append(nn.Sequential(*blocks))

cur += dep

# MEAFF after stages 1-3

self.meaff = MEAFF(dims[1:4])

# classifier

self.norm = nn.LayerNorm(dims[-1], eps=1e-6)

self.head = nn.Linear(dims[-1]+sum(dims[1:4]), num_classes) # MEAFF concat + last stage

self.apply(self._init_weights)

def _init_weights(self, m):

if isinstance(m, (nn.Conv2d, nn.Linear)):

trunc_normal_(m.weight, std=.02)

if m.bias is not None: nn.init.constant_(m.bias, 0)

def forward(self, x):

outs = []

for i in range(4):

x = self.downsample_layers[i](x)

x = self.stages[i](x)

if i in {1,2,3}:

outs.append(x)

# MEAFF

fused = self.meaff(outs).flatten(1) # (B, C1+C2+C3)

x = self.norm(x.mean([-2, -1])) # global avg-pool last stage

x = torch.cat([x, fused], dim=1) # fusion

return self.head(x)

if __name__ == "__main__":

model = EFAMNet(num_classes=8) # ISIC-2019 has 8 classes

x = torch.randn(2, 3, 224, 224)

print("Output shape:", model(x).shape) # → torch.Size([2, 8])

from torchvision import datasets, transforms

from torch.utils.data import DataLoader

import torch.optim as optim

# --- transforms ---

train_tf = transforms.Compose([

transforms.Resize((224,224)),

transforms.RandomHorizontalFlip(),

transforms.RandomRotation(15),

transforms.ToTensor(),

])

val_tf = transforms.Compose([transforms.Resize((224,224)), transforms.ToTensor()])

# --- datasets / loaders ---

train_ds = datasets.ImageFolder('ISIC2019/train', transform=train_tf)

val_ds = datasets.ImageFolder('ISIC2019/val', transform=val_tf)

train_ld = DataLoader(train_ds, batch_size=64, shuffle=True, num_workers=4)

val_ld = DataLoader(val_ds, batch_size=64, shuffle=False, num_workers=4)

# --- model / loss / optim ---

device = 'cuda' if torch.cuda.is_available() else 'cpu'

model = EFAMNet(num_classes=len(train_ds.classes)).to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.AdamW(model.parameters(), lr=5e-4, weight_decay=0.05)

scheduler = optim.lr_scheduler.CosineAnnealingLR(optimizer, T_max=40)

# --- epochs ---

for epoch in range(40):

model.train()

for x,y in train_ld:

x,y = x.to(device), y.to(device)

optimizer.zero_grad()

loss = criterion(model(x), y)

loss.backward()

optimizer.step()

scheduler.step()

# validation ...Author Bio:

Zhanlin Ji, Ph.D., is a Professor at Zhejiang Agriculture and Forestry University and an Associate Researcher at the Telecommunications Research Centre, University of Limerick. He has published over 100 papers in AI, IoT, and image processing.