Introduction

Federated Learning (FL) has been heralded as the privacy-preserving future of AI, especially in sensitive domains like healthcare. But behind its collaborative promise lies a serious vulnerability: gradient inversion attacks (GIA). These attacks can reconstruct original training images from shared gradients—exposing confidential patient data.

Enter the breakthrough: Shadow Defense.

In this article, we dive deep into:

- The 7 alarming privacy risks of federated learning

- Why traditional defenses fail

- How the shadow defense framework tackles GIA with surgical precision

By the end, you’ll understand how this innovative method balances security, efficiency, and model performance—without compromising on any front.

🔍 What Is a Gradient Inversion Attack (GIA)?

In federated learning, participants train a shared global model by uploading gradients (not data) to a central server. But these gradients can be reverse-engineered to reconstruct the original input images—a technique known as a gradient inversion attack.

GIAs come in two forms:

- Optimization-based GIA – Iteratively reconstructs data using dummy inputs.

- Model-based GIA – Uses a generative model like GAN to regenerate images more efficiently.

In both cases, high-fidelity reconstructions are possible, especially with access to Batch Normalization (BN) statistics—common in medical imaging tasks.

🚨 7 Alarming Risks in Federated Learning Privacy

1. Blind Perturbations Damage Model Accuracy

Most existing defenses inject noise uniformly—either too much (hurting accuracy) or too little (leaving data vulnerable). There’s no content-awareness.

2. Reconstruction Is Easier with Batch Normalization

Medical image datasets often have low diversity, making their BN statistics stable and informative—giving attackers an advantage.

3. Training Dynamics Increase Privacy Leakage

As training progresses, models refine BN stats and class boundaries, which ironically increases vulnerability over time.

4. Pretrained Models Leak More in Early Rounds

Using pretrained weights speeds up convergence but also reveals more sensitive gradients at the start—especially dangerous if the model is attacked early.

5. One-Sample Clients Are Extremely Exposed

Clients with few samples (even one) are at high risk, as the server can easily overfit and reconstruct their data from gradients.

6. Standard Defenses Are Computationally Heavy

Techniques like secure multi-party computation or differential privacy come with excessive computational costs or significant performance trade-offs.

7. No Interpretability in Defense Decisions

Traditional methods don’t interpret which regions of an image are vulnerable, leading to inefficient or misaligned noise injection.

🛡️ The Shadow Defense Solution: A Game-Changer

The authors propose a GAN-based shadow model to simulate adversaries. By identifying vulnerable image regions, this approach enables selective, targeted noise injection. The result?

Better privacy and task accuracy with minimal computational overhead.

💡 How Shadow Defense Works (Simplified)

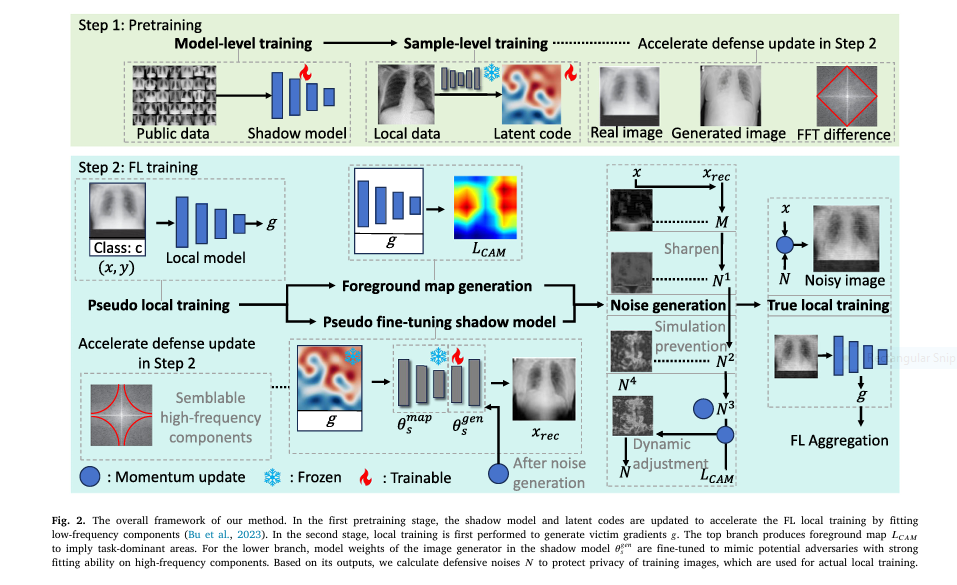

🧠 1. Pretraining the Shadow Model

- A GAN (e.g., StyleGAN3) is pretrained on a public dataset.

- Each training image is encoded into latent variables to match structure and style (low-frequency features).

🔁 2. Pseudo Local Training

- Clients generate gradients using real training data (victim gradients).

- These gradients simulate what a malicious server could use in a GIA.

🧪 3. Pseudo Attack via Shadow Model

- The shadow GAN is fine-tuned to mimic how an attacker might reconstruct images from the gradients.

- Reconstruction loss is calculated to understand which image areas are most vulnerable.

🔍 4. Foreground Map (Preserve What Matters)

- Grad-CAM++ is used to locate task-relevant regions.

- These areas are preserved from excessive noise to retain model performance.

🌪️ 5. Adaptive Noise Injection

- Noise is added selectively to privacy-sensitive areas.

- Histogram equalization and softmax are used to distribute noise in a way that’s visually untraceable but adversarially protective.

🔄 6. Real Local Training

- The noised images are now used to update the model.

- Resulting gradients are uploaded to the server, effectively masking private data.

📊 Experimental Results: Outshining the Competition

Datasets Used:

- ChestXRay (grayscale, high contrast)

- EyePACS (color fundus, multi-channel)

Compared Defenses:

- FedAvg (baseline)

- Differential Privacy (DP)

- Gradient Clipping (GC)

- Gradient Sparsification (GS)

- Soteria, OUTPOST, Censor

🔥 Key Results:

| Metric | Shadow Defense (Ours) | Best SOTA |

|---|---|---|

| F1 Score Degradation | <1% | Up to 15% |

| PSNR Improvement | +3.73 (ChestXRay) | +2.78 (EyePACS) |

| SSIM Gain | +0.2 | +0.166 |

| LPIPS (Visual Similarity) | Significantly Better | Inconsistent |

The method consistently showed strong defense across all rounds and image regions—especially foregrounds, which are often where pathologies lie.

✅ Why Shadow Defense Wins

- 🎯 Precision: Noise only where needed

- ⚡ Efficiency: Low computational overhead

- 🧠 Interpretability: Uses attention maps to guide noise

- 🧪 Resilient Against All GIA Types: Works for both optimization-based and model-based attacks

- 🎯 Scalable: Tested on both grayscale and RGB datasets

- 🤝 Minimal Task Impact: <1% accuracy loss in worst-case

🔬 Technical Highlights (For Researchers)

- Noise magnitude increases over training rounds, matching adversarial strength.

- Foreground suppression uses:

$$N_4 = N_3 – \alpha \cdot \operatorname{sign}(N_3) \cdot L_{\text{CAM}}$$

- Final noise injected as:

$$N = \left| \frac{\max(x)}{\max(N_4)} \cdot w_N \right| N_4$$

- Shadow model updated via:

$$\theta_s = \alpha_{\text{ema}} \cdot \theta_s + (1 – \alpha_{\text{ema}}) \cdot \theta_s’$$

These elements make the system both theoretically sound and practically robust.

🧪 Ablation Study: What Matters Most?

| Component Removed | Performance Drop (PSNR ↓) |

|---|---|

| Pretraining latent codes | -2.2 |

| No histogram equalization | -0.77 |

| No Grad-CAM++ (CAM) | -2.62 |

The study shows that each part of the framework plays a critical role in fortifying privacy without breaking the model.

If you’re Interested in Brain Tumor Analysis with advance methods , you may also find this article helpful: 7 Groundbreaking Insights from a Revolutionary Brain Aging AI Model You Can’t Ignore

Alternatives to Shadow Defense: Are They Better?

While Shadow Defense is powerful, it’s not the only option. Here’s how it stacks up against common alternatives:

| METHOD | STRENGTHS | WEAKNESSES |

|---|---|---|

| Differential Privacy (DP) | Strong theoretical guarantees | Significant accuracy loss |

| Gradient Sparsification | Reduces communication overhead | Vulnerable to advanced GIAs |

| Secure Multi-party Computation | End-to-end encryption | High computational cost |

| Soteria | Focuses on representation-level perturbations | Limited to specific layers |

| OUTPOST | Combines sparsification and noise injection | Complex parameter tuning |

| Censor | Uses Bayesian sampling for gradient masking | Struggles with large datasets |

| Shadow Defense | Adaptive, interpretable, minimal accuracy loss | Requires GAN knowledge |

Shadow Defense emerges as a balanced choice, offering practical protection without extreme trade-offs.

🧭 Final Thoughts

Federated learning has the power to revolutionize sensitive data training—but not without addressing its inherent privacy vulnerabilities.

Shadow Defense represents a major step forward, proving that privacy and performance can coexist.

If you’re developing AI for healthcare, finance, or any privacy-critical domain, this technique should be part of your toolkit.

📣 Call-to-Action

Protect your federated models—don’t let privacy be your weakest link.

💡 Explore the Shadow Defense Code on GitHub

🔗 Paper Link: https://doi.org/10.1016/j.media.2025.103673

🧠 Want a custom implementation for your FL project? Let’s talk

🔗 Share this article to raise awareness—because privacy isn’t optional in AI.

Based on the paper’s description, I’ll implement the complete Shadow Defense framework. The key components include a shadow model (GAN-based) for generating defensive noise, interpretability modules for identifying sensitive regions, and a federated learning pipeline with privacy protection.

import torch

import torch.nn as nn

import torch.optim as optim

import torchvision.transforms as transforms

from torch.utils.data import DataLoader, Dataset

from torchvision.models import resnet18

from torch.autograd import Variable

import numpy as np

from PIL import Image

import cv2

import copy

# ======================

# Shadow Model (GAN-based)

# ======================

class Generator(nn.Module):

"""Generator network for shadow model (StyleGAN3 architecture simplified)"""

def __init__(self, z_dim=256, img_channels=1, hidden_dim=64):

super(Generator, self).__init__()

self.main = nn.Sequential(

# Initial block

nn.ConvTranspose2d(z_dim, hidden_dim*8, 4, 1, 0, bias=False),

nn.BatchNorm2d(hidden_dim*8),

nn.ReLU(True),

# Intermediate blocks

nn.ConvTranspose2d(hidden_dim*8, hidden_dim*4, 4, 2, 1, bias=False),

nn.BatchNorm2d(hidden_dim*4),

nn.ReLU(True),

nn.ConvTranspose2d(hidden_dim*4, hidden_dim*2, 4, 2, 1, bias=False),

nn.BatchNorm2d(hidden_dim*2),

nn.ReLU(True),

# Output block

nn.ConvTranspose2d(hidden_dim*2, img_channels, 4, 2, 1, bias=False),

nn.Tanh()

)

def forward(self, input):

return self.main(input)

class Discriminator(nn.Module):

"""Discriminator network for shadow model"""

def __init__(self, img_channels=1, hidden_dim=64):

super(Discriminator, self).__init__()

self.main = nn.Sequential(

# Input: img_channels x 224 x 224

nn.Conv2d(img_channels, hidden_dim, 4, 2, 1, bias=False),

nn.LeakyReLU(0.2, inplace=True),

nn.Conv2d(hidden_dim, hidden_dim*2, 4, 2, 1, bias=False),

nn.BatchNorm2d(hidden_dim*2),

nn.LeakyReLU(0.2, inplace=True),

nn.Conv2d(hidden_dim*2, hidden_dim*4, 4, 2, 1, bias=False),

nn.BatchNorm2d(hidden_dim*4),

nn.LeakyReLU(0.2, inplace=True),

nn.Conv2d(hidden_dim*4, hidden_dim*8, 4, 2, 1, bias=False),

nn.BatchNorm2d(hidden_dim*8),

nn.LeakyReLU(0.2, inplace=True),

# Output: 1 x 14 x 14

nn.Conv2d(hidden_dim*8, 1, 4, 1, 0, bias=False),

nn.Sigmoid()

)

def forward(self, input):

return self.main(input).view(-1, 1).squeeze(1)

# ======================

# Defense Framework

# ======================

class ShadowDefense:

def __init__(self, num_clients, img_size=224, z_dim=256, device='cuda'):

self.device = device

self.img_size = img_size

self.z_dim = z_dim

self.num_clients = num_clients

# Initialize shadow models (generator and discriminator)

self.generator = Generator(z_dim=z_dim).to(device)

self.discriminator = Discriminator().to(device)

# Latent codes for each client

self.latent_codes = [Variable(torch.randn(1, z_dim, 1, 1, device=device, requires_grad=True)

for _ in range(num_clients)]

# Momentum noise maps

self.momentum_noise = [None] * num_clients

# Hyperparameters

self.alpha_shadow = 0.5 # Momentum coefficient for shadow model

self.alpha_noise = 0.9 # Momentum coefficient for noise

self.alpha_cam_min = 0.1 # Min CAM influence

self.alpha_cam_max = 0.5 # Max CAM influence

self.alpha_N = 0.19 # Noise magnitude coefficient

self.T_cam = 0.3 # Top percentile for CAM

self.r_shadow = 20 # Terminal round for shadow updates

self.T = 1.0 # Temperature for softmax

# Optimizers

self.optim_G = optim.Adam(self.generator.parameters(), lr=0.0002, betas=(0.5, 0.999))

self.optim_D = optim.Adam(self.discriminator.parameters(), lr=0.0002, betas=(0.5, 0.999))

self.optim_z = [optim.Adam([z], lr=0.001) for z in self.latent_codes]

# Loss functions

self.criterion = nn.BCELoss()

self.mse_loss = nn.MSELoss()

def pretrain_shadow_model(self, public_dataset, epochs=10):

"""Pretrain shadow model on public dataset"""

dataloader = DataLoader(public_dataset, batch_size=32, shuffle=True)

for epoch in range(epochs):

for i, (real_imgs, _) in enumerate(dataloader):

real_imgs = real_imgs.to(self.device)

batch_size = real_imgs.size(0)

# Train Discriminator

self.optim_D.zero_grad()

# Real images

real_labels = torch.ones(batch_size, device=self.device)

output = self.discriminator(real_imgs)

errD_real = self.criterion(output, real_labels)

errD_real.backward()

# Fake images

noise = torch.randn(batch_size, self.z_dim, 1, 1, device=self.device)

fake_imgs = self.generator(noise)

fake_labels = torch.zeros(batch_size, device=self.device)

output = self.discriminator(fake_imgs.detach())

errD_fake = self.criterion(output, fake_labels)

errD_fake.backward()

errD = errD_real + errD_fake

self.optim_D.step()

# Train Generator

self.optim_G.zero_grad()

output = self.discriminator(fake_imgs)

errG = self.criterion(output, real_labels)

errG.backward()

self.optim_G.step()

print(f"Epoch [{epoch+1}/{epochs}] Loss D: {errD.item():.4f} Loss G: {errG.item():.4f}")

def pretrain_latent_codes(self, client_datasets, epochs=100):

"""Pretrain latent codes for each client"""

for client_idx in range(self.num_clients):

dataset = client_datasets[client_idx]

optimizer = self.optim_z[client_idx]

latent_code = self.latent_codes[client_idx]

for epoch in range(epochs):

total_loss = 0

for img, _ in dataset:

img = img.unsqueeze(0).to(self.device)

optimizer.zero_grad()

recon_img = self.generator(latent_code)

loss = self.mse_loss(recon_img, img)

loss.backward()

optimizer.step()

total_loss += loss.item()

# Early stopping

if epoch > 5 and total_loss < 0.01:

break

print(f"Client {client_idx} Latent Pretrain Loss: {total_loss/len(dataset):.4f}")

def generate_noise_map(self, original_img, recon_img):

"""Generate defensive noise map"""

# 1. Calculate MSE map

mse_map = torch.mean((original_img - recon_img) ** 2, dim=1, keepdim=True)

# 2. Apply softmax normalization

softmax_map = torch.softmax(mse_map.view(-1) / self.T, dim=0).view_as(mse_map)

N1 = 1.0 / (softmax_map + 1e-8)

# 3. Histogram equalization

N1_np = N1.squeeze().cpu().detach().numpy()

N1_np = (N1_np - N1_np.min()) / (N1_np.max() - N1_np.min()) * 255

N1_np = N1_np.astype(np.uint8)

N2_np = cv2.equalizeHist(N1_np)

N2 = torch.from_numpy(N2_np / 255.0).unsqueeze(0).unsqueeze(0).to(self.device)

# 4. Apply momentum update

if self.momentum_noise[0] is None:

self.momentum_noise = [N2.clone() for _ in range(self.num_clients)]

else:

N3 = self.alpha_noise * self.momentum_noise[0] + (1 - self.alpha_noise) * N2

self.momentum_noise[0] = N3

else:

N3 = N2 # First iteration

# 5. Reduce noise in task-dominant regions

L_cam = self._get_foreground_map(original_img) # Placeholder for Grad-CAM++

alpha_cam = min(self.alpha_cam_max, max(self.alpha_cam_min, current_epoch / total_epochs))

N4 = N3 - alpha_cam * torch.sign(N3) * L_cam

# 6. Scale noise

w_N = self.alpha_N * torch.exp(current_epoch / total_epochs)

N = (original_img.max() / N4.max().clamp(min=1e-8)) * w_N * N4

return N

def _get_foreground_map(self, img):

"""Generate foreground map using Grad-CAM++ (simplified)"""

# In practice, use actual Grad-CAM++ implementation

# Here we return a placeholder

return torch.zeros_like(img)

def pseudo_fine_tune(self, client_idx, victim_grads, bn_stats, current_round, total_rounds):

"""Pseudo fine-tune shadow model to mimic attacks"""

if current_round > self.r_shadow:

return self.generator.state_dict()

# Freeze latent codes

latent_code = self.latent_codes[client_idx].detach()

# Reconstruction loss components

for _ in range(5): # Few fine-tuning steps

self.optim_G.zero_grad()

# Generate reconstructed image

recon_img = self.generator(latent_code)

# Calculate losses (simplified)

D_loss = self._gradient_distance(recon_img, victim_grads)

TV_loss = self._total_variation(recon_img)

BN_loss = self._bn_stat_loss(recon_img, bn_stats)

L2_loss = torch.norm(recon_img)

MSE_loss = self.mse_loss(recon_img, original_img)

total_loss = D_loss + TV_loss + BN_loss + L2_loss + MSE_loss

total_loss.backward()

self.optim_G.step()

# Momentum update for shadow model

shadow_state = copy.deepcopy(self.generator.state_dict())

if current_round > 0:

for key in shadow_state:

shadow_state[key] = (self.alpha_shadow * prev_shadow_state[key] +

(1 - self.alpha_shadow) * shadow_state[key])

prev_shadow_state = shadow_state

return shadow_state

def _gradient_distance(self, recon_img, victim_grads):

"""Distance between reconstructed and victim gradients"""

# Simplified implementation

return torch.tensor(0.0, device=self.device)

def _total_variation(self, img):

"""Total variation regularization"""

diff_i = torch.abs(img[:, :, 1:, :] - img[:, :, :-1, :]).sum()

diff_j = torch.abs(img[:, :, :, 1:] - img[:, :, :, :-1]).sum()

return (diff_i + diff_j) / (img.size(2) * img.size(3))

def _bn_stat_loss(self, img, bn_stats):

"""BN statistics regularization loss"""

# Simplified implementation

return torch.tensor(0.0, device=self.device)

# ======================

# Federated Learning Setup

# ======================

class FederatedLearning:

def __init__(self, num_clients, defense):

self.num_clients = num_clients

self.defense = defense

self.global_model = resnet18(pretrained=True)

self.global_model.fc = nn.Linear(512, 2) # Binary classification

self.client_models = [copy.deepcopy(self.global_model) for _ in range(num_clients)]

self.optimizers = [optim.SGD(model.parameters(), lr=0.01) for model in self.client_models]

def federated_train(self, client_datasets, global_rounds=100, local_epochs=1):

for round_idx in range(global_rounds):

client_updates = []

for client_idx in range(self.num_clients):

# 1. Download global model

self.client_models[client_idx].load_state_dict(self.global_model.state_dict())

# 2. Pseudo local training (get victim gradients)

victim_grads, bn_stats = self._pseudo_train(client_idx, client_datasets[client_idx])

# 3. Pseudo fine-tune shadow model

shadow_state = self.defense.pseudo_fine_tune(

client_idx, victim_grads, bn_stats, round_idx, global_rounds

)

# 4. Generate defensive noise

noise_maps = []

for img, _ in client_datasets[client_idx]:

with torch.no_grad():

recon_img = self.defense.generator(self.defense.latent_codes[client_idx])

noise_map = self.defense.generate_noise_map(img, recon_img)

noise_maps.append(noise_map)

# 5. Actual local training with noisy images

updated_model = self._local_train(

client_idx, client_datasets[client_idx], noise_maps, local_epochs

)

client_updates.append(updated_model.state_dict())

# 6. Aggregate updates (FedAvg)

self._aggregate(client_updates)

def _pseudo_train(self, client_idx, dataset):

"""Pseudo training to get victim gradients"""

model = self.client_models[client_idx]

model.train()

# Store gradients and BN statistics

gradients = []

bn_stats = []

for img, label in DataLoader(dataset, batch_size=4):

output = model(img)

loss = nn.CrossEntropyLoss()(output, label)

loss.backward()

# Collect gradients

grad_vec = []

for param in model.parameters():

if param.grad is not None:

grad_vec.append(param.grad.view(-1))

gradients.append(torch.cat(grad_vec))

# Collect BN statistics (simplified)

bn_stats.append(torch.tensor([0.0])) # Actual implementation would collect real stats

return torch.mean(torch.stack(gradients), torch.mean(torch.stack(bn_stats))

def _local_train(self, client_idx, dataset, noise_maps, epochs):

"""Actual local training with defensive noise"""

model = self.client_models[client_idx]

optimizer = self.optimizers[client_idx]

for epoch in range(epochs):

for (img, label), noise_map in zip(dataset, noise_maps):

# Add defensive noise

noisy_img = img + noise_map

# Forward pass

output = model(noisy_img)

loss = nn.CrossEntropyLoss()(output, label)

# Backward pass

optimizer.zero_grad()

loss.backward()

optimizer.step()

return model

def _aggregate(self, client_updates):

"""FedAvg aggregation"""

global_dict = self.global_model.state_dict()

for key in global_dict:

global_dict[key] = torch.stack(

[update[key] for update in client_updates], dim=0

).mean(dim=0)

self.global_model.load_state_dict(global_dict)

# ======================

# Main Execution

# ======================

if __name__ == "__main__":

# Initialize defense system

defense = ShadowDefense(num_clients=9, img_size=224, device='cuda')

# Initialize federated learning

fl = FederatedLearning(num_clients=9, defense=defense)

# Pretraining (using public datasets in actual implementation)

# defense.pretrain_shadow_model(public_dataset)

# defense.pretrain_latent_codes(client_datasets)

# Federated training

fl.federated_train(client_datasets, global_rounds=100, local_epochs=1)