Introduction: Unlocking the Secrets of Motion Perception

Understanding how the brain processes motion is not just a fascinating scientific endeavor—it’s crucial for fields ranging from neuroscience to artificial intelligence. A recent study titled “Energy efficiency and sensitivity benefits in a motion processing adaptive recurrent neural network” sheds light on how neural adaptation enhances motion processing, offering both theoretical and practical implications. This article dives deep into the findings of this study, exploring how adaptive mechanisms in neural networks can mimic biological systems, improve energy efficiency, and enhance sensitivity to environmental changes.

1. The Biological Basis of Motion Processing

Motion processing is a fundamental cognitive function that allows organisms to navigate and interact with their environment effectively. In primates, the primary visual cortex (V1) and the middle temporal area (V5/MT) play pivotal roles in this hierarchical process. V1 detects local motion, while MT integrates these signals to perceive global motion.

Key Concepts:

- V1 Neurons : Detect local motion and are sensitive to lower speeds.

- MT Neurons : Integrate local signals to compute global motion and are tuned to higher speeds.

The study found that both V1 and MT neurons in the networks developed response properties similar to their biological counterparts. This alignment validates the biological plausibility of the models used in the research.

2. The Role of Neural Adaptation in Motion Processing

Adaptation is a canonical function of sensory processing, allowing the brain to adjust to constant stimuli and remain sensitive to changes in the environment. One of the most well-known manifestations of motion adaptation is the motion aftereffect (MAE) , or the “waterfall illusion,” where prolonged exposure to motion in one direction leads to the perception of stationary stimuli as moving in the opposite direction.

Key Findings:

- Adaptation in Biological Systems : Enhances sensitivity to changes and conserves metabolic resources.

- Adaptation in Artificial Neural Networks : Mimics biological mechanisms, improving energy efficiency and responsiveness to motion changes.

In the study, the adaptive network AdaptNet successfully replicated the MAE phenomenon, providing a computational explanation for this perceptual illusion.

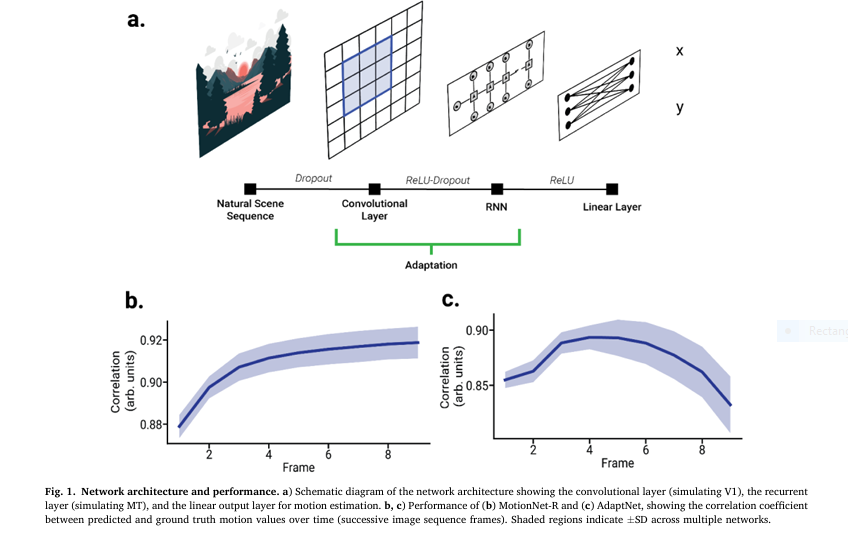

3. Building Adaptive Neural Networks: MotionNet-R vs. AdaptNet

To investigate the role of adaptation in motion processing, researchers developed two recurrent neural networks:

- MotionNet-R : A baseline model without adaptive mechanisms.

- AdaptNet : An adaptive model inspired by biological systems.

Both networks were trained on natural image sequences to estimate motion vectors, allowing researchers to compare their performance in terms of accuracy, efficiency, and sensitivity.

Network Architecture:

- Convolutional Layer (V1 Simulation) : Processes spatial information.

- Recurrent Layer (MT Simulation) : Analyzes temporal information.

- Linear Output Layer : Estimates motion velocity.

Equations:

The operation of the convolutional layer is defined as:

$$Y[i,j,t] = \sum_{m} \sum_{n} \sum_{k} K[m,n,k] \ast X[i+m,j+n,t+k]$$where Y is the output, X is the input, and K is the kernel.

The adaptation mechanism in AdaptNet is modeled using:

$$y_{\text{V1}}^t = \max(0, s^t – a_{\text{V1}}^{t-1})$$and

$$a_{\text{V1}}^t = (1 – \beta_{\text{V1}}) \, a_{\text{V1}}^{t-1}$$4. Performance Comparison: MotionNet-R vs. AdaptNet

The study revealed significant differences between the two networks in terms of performance, energy efficiency, and sensitivity.

Motion Estimation Accuracy:

- MotionNet-R : Improved accuracy over successive frames due to temporal integration.

- AdaptNet : Initially increased accuracy but later decreased due to adaptation-induced response reduction.

Energy Efficiency:

- AdaptNet : Showed reduced activation levels, indicating lower energy consumption.

- MotionNet-R : Maintained higher activation levels, leading to increased energy expenditure.

Sensitivity to Motion Changes:

- AdaptNet : Demonstrated superior sensitivity to motion changes, particularly in dynamic environments.

- MotionNet-R : More accurate in constant motion scenarios but less responsive to sudden changes.

5. The Motion Aftereffect: A Computational Explanation

One of the most compelling findings of the study was the replication of the motion aftereffect (MAE) in AdaptNet. When exposed to a sequence of moving stimuli followed by a stationary phase, AdaptNet exhibited an overshoot in motion estimation, predicting motion in the opposite direction—mirroring the waterfall illusion.

Mechanism Behind MAE:

- Adaptation-Induced Imbalance : Prolonged activation of motion-selective neurons leads to a temporary imbalance in mutual suppression.

- Recovery Dynamics : Units tuned to the adapted direction recover at different rates, resulting in the perception of illusory motion.

This finding aligns with previous hypotheses that MAE arises from the adaptation of motion-sensitive neurons, supporting the biological validity of the model.

6. Efficiency and Sensitivity: The Trade-Off in Neural Processing

The study highlights a fundamental trade-off between energy efficiency and sensitivity in neural processing. Adaptation allows the brain to conserve energy by reducing neuronal firing in response to constant stimuli while enhancing sensitivity to novel or changing inputs.

Key Insights:

- Metabolic Efficiency : Adaptation reduces overall neural activity, lowering energy consumption.

- Sensitivity to Change : Adapted neurons respond more robustly to new stimuli, improving detection of environmental changes.

These findings have implications for both biological and artificial systems, suggesting that adaptive mechanisms can optimize resource allocation and improve responsiveness.

7. Implications for Artificial Intelligence and Machine Learning

The study’s results have significant implications for the development of adaptive neural networks in artificial intelligence. By incorporating biological principles of adaptation, AI systems can achieve greater efficiency and responsiveness, particularly in dynamic environments.

Potential Applications:

- Autonomous Vehicles : Improved motion detection in changing traffic conditions.

- Robotics : Enhanced adaptability to new environments and tasks.

- Computer Vision : More efficient processing of visual information with reduced computational costs.

Future Directions:

- Spiking Neural Networks : Incorporating more biologically realistic neuron models.

- 3D Motion Processing : Extending the framework to handle complex visual stimuli.

- Cross-Modal Adaptation : Exploring how adaptation influences multisensory integration.

Conclusion: The Power of Adaptation in Motion Processing

The study provides compelling evidence that neural adaptation plays a crucial role in motion processing, enhancing both energy efficiency and sensitivity to environmental changes. By comparing adaptive and non-adaptive neural networks, researchers have demonstrated how biological principles can inform the design of more effective AI systems.

If you’re Interested in Event-Based Action Recognition based on deep learning, you may also find this article helpful: 7 Revolutionary Ways Event-Based Action Recognition is Changing AI (And Why It’s Not Perfect Yet)

Final Thoughts:

- Adaptation is Key : Enhances sensitivity to changes while conserving energy.

- Biological Inspiration : Offers valuable insights for developing adaptive AI.

- Future Potential : Adaptive networks could revolutionize fields like robotics and computer vision.

Call to Action: Stay Ahead in the World of AI and Neuroscience

If you’re fascinated by the intersection of neuroscience and artificial intelligence, don’t miss out on the latest research and insights. Subscribe to our newsletter to receive updates on cutting-edge studies, AI breakthroughs, and practical applications of adaptive neural networks. Whether you’re a researcher, developer, or tech enthusiast, staying informed will keep you ahead of the curve.

Ready to dive deeper? Explore the full study on ScienceDirect and discover how adaptation is shaping the future of intelligent systems.

Below is a complete, self-contained PyTorch implementation of the two models described in the paper—MotionNet-R (baseline) and AdaptNet (with adaptation).

import torch

import torch.nn as nn

import torch.nn.functional as F

class MotionNet_R(nn.Module):

"""

Baseline recurrent model without adaptation.

Architecture:

V1 : 2D conv layer (16 kernels 6×6, stride 1)

MT : Recurrent ConvLSTM-like layer (hidden_size = 32)

Out : Linear read-out (x,y velocity)

"""

def __init__(self):

super().__init__()

self.v1 = nn.Conv2d(2, 16, kernel_size=6, stride=1, padding=0) # 2 channels: t and t-1

self.mt = nn.Conv2d(16, 32, kernel_size=3, padding=1) # recurrent: same spatial dims

self.out = nn.Linear(32, 2) # vx, vy

def forward(self, x, h=None):

"""

x : (B,2,H,W) two-frame input

h : (B,32,H,W) hidden state from previous time-step

returns (vx,vy), new_h

"""

v1 = F.relu(self.v1(x)) # (B,16,H-5,W-5)

mt_in = v1 if h is None else v1 + h # simple additive recurrence

mt = F.relu(self.mt(mt_in)) # (B,32,H-5,W-5) after padding

# global average pooling → (B,32)

pooled = F.adaptive_avg_pool2d(mt, 1).flatten(1)

vel = self.out(pooled) # (B,2)

return vel, mt # return mt as next hidden state

class AdaptNet(nn.Module):

"""

MotionNet-R + adaptation variables in V1 and MT layers.

Adaptation equations (simplified from paper):

a_t = (1-β)*a_{t-1} + α*y_t

y_t = ReLU(s_t - a_{t-1}) # adapted output

"""

def __init__(self, α_v1=0.1, β_v1=0.1,

α_mt=0.2, β_mt=0.1):

super().__init__()

self.v1_conv = nn.Conv2d(2, 16, 6, stride=1, padding=0)

self.mt_conv = nn.Conv2d(16, 32, 3, padding=1)

self.out = nn.Linear(32, 2)

# adaptation hyper-params

self.α_v1, self.β_v1 = α_v1, β_v1

self.α_mt, self.β_mt = α_mt, β_mt

def init_state(self, B, C, H, W, device):

"""return zeroed adaptation tensors"""

return (torch.zeros(B, C, H, W, device=device),

torch.zeros(B, 32, H, W, device=device)) # v1_a, mt_a

def forward(self, x, states):

v1_a, mt_a = states # previous adaptation vars

B, _, H, W = x.shape

# ----- V1 adaptation -----

s_v1 = self.v1_conv(x) # pre-activation

y_v1 = F.relu(s_v1 - v1_a) # adapted activity

v1_a = (1 - self.β_v1) * v1_a + self.α_v1 * y_v1

# ----- MT adaptation -----

mt_in = y_v1 + (mt_a if mt_a.shape[-1] == y_v1.shape[-1] else 0)

s_mt = self.mt_conv(mt_in)

y_mt = F.relu(s_mt - mt_a)

mt_a = (1 - self.β_mt) * mt_a + self.α_mt * y_mt

# global average pooling

pooled = F.adaptive_avg_pool2d(y_mt, 1).flatten(1)

vel = self.out(pooled)

return vel, (v1_a, mt_a)

B, H, W = 4, 32, 32

x = torch.randn(B, 2, H, W)

# --- MotionNet-R ---

net_r = MotionNet_R()

vel_r, h_r = net_r(x)

print("MotionNet-R output:", vel_r.shape) # (B,2)

# --- AdaptNet ---

net_a = AdaptNet()

v1_a, mt_a = net_a.init_state(B, 16, H-5, W-5, x.device)

vel_a, (v1_a, mt_a) = net_a(x, (v1_a, mt_a))

print("AdaptNet output:", vel_a.shape) # (B,2)import torch.optim as optim

criterion = nn.MSELoss()

optimizer = optim.Adam(net_a.parameters(), lr=1e-3)

# dummy ground-truth velocities

target = torch.randn(B, 2)

# forward + backward

pred, states = net_a(x, (v1_a, mt_a))

loss = criterion(pred, target)

optimizer.zero_grad()

loss.backward()

optimizer.step()