Introduction: A Breakthrough in Medical Imaging with BCP

In the ever-evolving field of medical imaging, precision and efficiency are paramount. The ability to accurately segment anatomical structures from CT or MRI scans is crucial for diagnosis, treatment planning, and research. However, the process of manually labeling these images is both time-consuming and expensive. Enter semi-supervised learning , a promising approach that leverages both labeled and unlabeled data to improve segmentation accuracy without requiring extensive manual annotation.

A recent paper titled “Bidirectional Copy-Paste for Semi-Supervised Medical Image Segmentation” introduces a novel method known as BCP (Bidirectional Copy-Paste) that significantly outperforms existing techniques. In fact, it achieves over 21% improvement in Dice score on the ACDC dataset when only 5% of the data is labeled — an impressive leap forward in the realm of medical image analysis.

But how does BCP work? Why is it so effective compared to other semi-supervised approaches? And what challenges still remain?

Let’s dive deep into this groundbreaking technique and explore its implications for the future of medical imaging.

Understanding the Problem: Distribution Mismatch in Semi-Supervised Learning

Why Is Semi-Supervised Learning Important in Medicine?

Medical image segmentation traditionally relies on supervised learning, where each training sample must be manually annotated by experts. This is not only labor-intensive but also costly, especially for large-scale datasets.

Semi-supervised learning addresses this challenge by combining a small amount of labeled data with a much larger pool of unlabeled images. The goal is to train a model that can generalize well across both sets.

However, a major issue arises due to distribution mismatch between labeled and unlabeled data:

- Labeled data often comes from a narrow subset of the overall population.

- Unlabeled data, while abundant, may differ in quality, imaging conditions, or patient demographics.

This mismatch can lead to poor generalization, where models trained on labeled data fail to perform well on unlabeled samples.

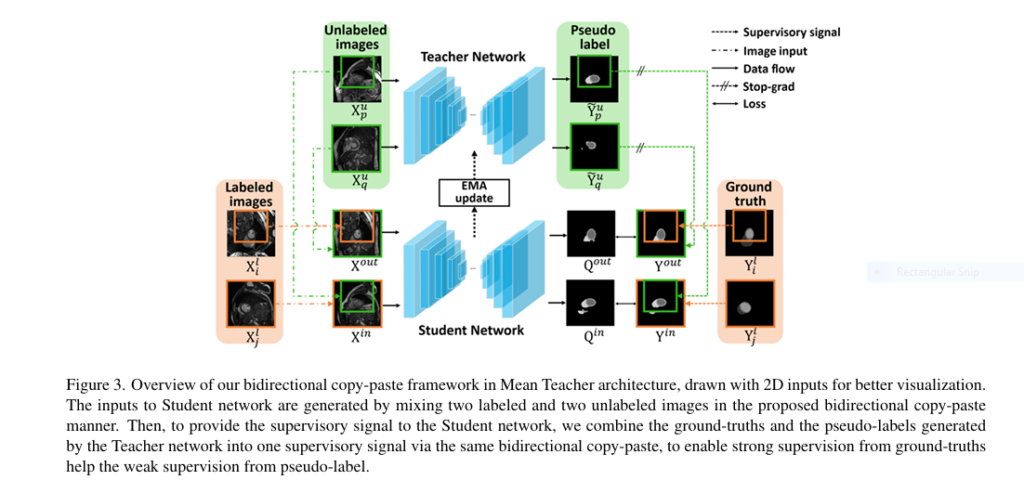

The BCP Solution: Bidirectional Copy-Paste in Mean Teacher Architecture

What Is Bidirectional Copy-Paste (BCP)?

BCP is a simple yet powerful augmentation strategy designed to bridge the gap between labeled and unlabeled data distributions. It operates within the Mean Teacher framework , a popular architecture in semi-supervised learning.

Here’s how it works:

- Two Labeled & Two Unlabeled Images Are Selected

- One labeled image acts as the foreground , another unlabeled image as the background .

- Then, the roles are reversed: the unlabeled image becomes foreground, and the labeled image becomes background.

- Copy-Paste Operation

- A random crop from the labeled image (foreground) is pasted onto the unlabeled image (background).

- Similarly, a crop from the unlabeled image (foreground) is pasted onto the labeled image (background).

- Mixed Images Are Fed Into the Student Network

- The resulting mixed images are used to train the Student network .

- Supervisory signals come from both ground truth labels and pseudo-labels generated by the Teacher network .

- Loss Function Design

- The loss function is carefully weighted to balance contributions from labeled and unlabeled regions:

Where:

- Q : predictions from the Student network

- Y : mixed supervisory signals

- M : binary mask indicating foreground/background

- α : weight controlling unlabeled region contribution

Why BCP Outperforms Other Methods

1. Reduces Empirical Distribution Gap Between Labeled & Unlabeled Data

By mixing labeled and unlabeled images in both directions, BCP ensures that the model learns common semantics from both domains. This reduces the distribution mismatch and improves consistency in feature representation.

2. Enhances Generalization Through Diverse Augmentations

Unlike traditional CutMix or MixUp strategies, which often degrade performance due to low-quality pseudo-label supervision, BCP uses strong supervision from labeled data to guide the learning of degraded regions. This leads to better shape preservation and boundary detection.

3. Symmetric Learning for Both Labeled & Unlabeled Samples

Many semi-supervised methods treat labeled and unlabeled data differently — sometimes even separately — leading to knowledge loss. BCP, however, enforces symmetric learning through bidirectional copy-pasting, ensuring both types of data contribute equally to model training.

4. Minimal Overhead, Maximum Impact

One of the most appealing aspects of BCP is that it doesn’t introduce new parameters or increase computational cost. It simply enhances the training pipeline using smart data augmentation.

Results That Speak Volumes

Performance Comparison Across Medical Datasets

| METHOD | DATASET | LABELED(%) | DICE 1 | JACCARD | 95HD | ASD |

|---|---|---|---|---|---|---|

| V-Net | LA | 5% | 52.55 | 39.60 | 47.05 | 9.87 |

| MC-Net | LA | 5% | 83.59 | 72.36 | 14.07 | 2.70 |

| Ours (BCP) | LA | 5% | 88.02 | 78.72 | 7.90 | 2.15 |

Result Highlights:

- On the ACDC dataset , BCP achieves a staggering 21.76% improvement in Dice score over the second-best method.

- On Pancreas-NIH , BCP shows +3.24% Dice gain and improved robustness in handling complex anatomical structures.

Key Advantages of BCP

✅ High Accuracy with Minimal Labeled Data

Even with just 5% labeled data, BCP delivers near-full-supervision performance.

✅ Robustness to Shape Variability

By enforcing consistent learning across mixed samples, BCP excels at preserving anatomical shapes and boundaries.

✅ No Additional Parameters or Complexity

BCP integrates seamlessly into existing frameworks like U-Net or V-Net without increasing model size or complexity.

✅ Effective Across Multiple Modalities

Whether dealing with cardiac MRIs or abdominal CT scans, BCP adapts well to different imaging modalities.

Limitations and Future Directions

While BCP represents a significant advancement, it is not without limitations:

🚫 Struggles with Low-Contrast Regions

Like many segmentation methods, BCP has difficulty distinguishing subtle tissue boundaries in low-contrast areas.

🚫 Dependence on Pseudo-Label Quality

Although BCP mitigates pseudo-label noise through bidirectional training, errors in pseudo-label generation can still propagate.

🚫 Need for Careful Hyperparameter Tuning

Parameters like mask size (β) and unlabeled weight (α) require tuning for optimal performance.

🔍 Future Work Could Include:

- Integration with uncertainty estimation modules to refine pseudo-label reliability.

- Extension to multi-task or domain adaptation settings.

- Incorporation of attention mechanisms to focus on critical anatomical regions.

How to Implement BCP: Practical Considerations

Implementation Steps

- Data Preparation

- Ensure your dataset includes both labeled and unlabeled volumes.

- Normalize intensity values and apply standard preprocessing steps.

- Model Architecture

- Use a backbone like U-Net or V-Net .

- Integrate the Mean Teacher framework for consistency regularization.

- Mask Generation

- Generate zero-centered binary masks for copy-paste operations.

- Adjust β to control the size of the foreground region.

- Loss Balancing

- Set α ≈ 0.5 to maintain a healthy balance between labeled and unlabeled contributions.

- Monitor validation metrics to adjust weights dynamically if needed.

- Training Pipeline

- Pre-train the model on labeled data before introducing unlabeled samples.

- Gradually increase the influence of unlabeled data during self-training.

- Evaluation Metrics

- Focus on Dice Score , Jaccard Index , 95% Hausdorff Distance , and Average Surface Distance for comprehensive evaluation.

If you’re Interested in Medical Image Segmentation using deep learning, you may also find this article helpful: 5 Revolutionary Advancements in Medical Image Segmentation: How SDCL Outperforms Existing Methods (With Math Explained)

Real-World Applications and Industry Relevance

Use Cases Where BCP Can Make an Impact

🩺 Cardiac MRI Analysis

Accurate segmentation of ventricles and myocardium is essential for diagnosing heart disease.

🩺 Tumor Detection in CT Scans

Improved delineation of tumors and surrounding organs supports precise radiation therapy planning.

🩺 Organ-at-Risk Identification

Critical in oncology for minimizing collateral damage during radiotherapy.

🩺 Population Health Studies

Enables scalable segmentation of large biobank datasets with minimal expert annotation.

Conclusion: The Future of Medical Image Segmentation is Here

The Bidirectional Copy-Paste (BCP) method represents a significant leap forward in semi-supervised medical image segmentation. By addressing the empirical distribution mismatch between labeled and unlabeled data, BCP enables high-performance segmentation with minimal human annotation — a game-changer in clinical environments where labeled data is scarce.

With its 21% Dice improvement on the ACDC dataset , robustness to shape variations , and minimal overhead , BCP sets a new benchmark for semi-supervised learning in medical imaging.

If you’re working in healthcare AI, radiology, or biomedical engineering, implementing BCP could dramatically reduce your annotation costs while boosting segmentation accuracy.

Call to Action: Ready to Improve Your Medical Image Segmentation Pipeline?

🚀 Download the BCP implementation code from GitHub : https://github.com/DeepMed-Lab-ECNU/BCP

📘 Read the full paper here : arXiv:2305.00673v1

💬 Have questions or want to collaborate on implementing BCP in your project?

👉 Leave a comment below or reach out via our contact form. We’d love to hear from you!

Frequently Asked Questions (FAQ)

What is semi-supervised learning in medical imaging?

It’s a machine learning approach that combines a small amount of labeled data with a large volume of unlabeled data to train more accurate models without requiring full manual annotation.

What is the Dice score in segmentation?

The Dice score measures the overlap between predicted and ground truth segmentations. A higher score indicates better alignment.

Can BCP be used with any neural network architecture?

Yes, BCP is architecture-agnostic and can be integrated with U-Net, V-Net, or any other segmentation backbone.

Do I need special hardware to run BCP?

No. BCP runs efficiently on standard GPUs like NVIDIA 3090 and does not require specialized hardware.

Where can I find datasets to test BCP?

Popular datasets include LA (Left Atrium), ACDC (Automatic Cardiac Diagnosis Challenge), and Pancreas-NIH.

Final Thoughts

The future of medical imaging lies in intelligent, efficient, and scalable solutions. With Bidirectional Copy-Paste , we’re one step closer to making that future a reality. Whether you’re a researcher, clinician, or developer, now is the time to explore how BCP can transform your workflow.

Don’t miss out on this opportunity to elevate your segmentation capabilities — try BCP today and see the difference for yourself!

Below is a complete PyTorch-based implementation outline of the BCP method using the Mean Teacher framework with a U-Net or V-Net backbone .

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

# --- 3D U-Net Implementation ---

class DoubleConv(nn.Module):

"""(convolution => [BN] => ReLU) * 2"""

def __init__(self, in_channels, out_channels, mid_channels=None):

super().__init__()

if not mid_channels:

mid_channels = out_channels

self.double_conv = nn.Sequential(

nn.Conv3d(in_channels, mid_channels, kernel_size=3, padding=1, bias=False),

nn.BatchNorm3d(mid_channels),

nn.ReLU(inplace=True),

nn.Conv3d(mid_channels, out_channels, kernel_size=3, padding=1, bias=False),

nn.BatchNorm3d(out_channels),

nn.ReLU(inplace=True)

)

def forward(self, x):

return self.double_conv(x)

class Down(nn.Module):

"""Downscaling with maxpool then double conv"""

def __init__(self, in_channels, out_channels):

super().__init__()

self.maxpool_conv = nn.Sequential(

nn.MaxPool3d(2),

DoubleConv(in_channels, out_channels)

)

def forward(self, x):

return self.maxpool_conv(x)

class Up(nn.Module):

"""Upscaling then double conv"""

def __init__(self, in_channels, out_channels, trilinear=True):

super().__init__()

# if trilinear, use the normal convolutions to reduce the number of channels

if trilinear:

self.up = nn.Upsample(scale_factor=2, mode='trilinear', align_corners=True)

self.conv = DoubleConv(in_channels, out_channels, in_channels // 2)

else:

self.up = nn.ConvTranspose3d(in_channels, in_channels // 2, kernel_size=2, stride=2)

self.conv = DoubleConv(in_channels, out_channels)

def forward(self, x1, x2):

x1 = self.up(x1)

# input is CHW

diffZ = x2.size()[2] - x1.size()[2]

diffY = x2.size()[3] - x1.size()[3]

diffX = x2.size()[4] - x1.size()[4]

x1 = F.pad(x1, [diffX // 2, diffX - diffX // 2,

diffY // 2, diffY - diffY // 2,

diffZ // 2, diffZ - diffZ // 2])

x = torch.cat([x2, x1], dim=1)

return self.conv(x)

class OutConv(nn.Module):

def __init__(self, in_channels, out_channels):

super(OutConv, self).__init__()

self.conv = nn.Conv3d(in_channels, out_channels, kernel_size=1)

def forward(self, x):

return self.conv(x)

class SegmentationNetwork(nn.Module):

"""

A complete 3D U-Net model. This replaces the previous placeholder.

The architecture is based on the original U-Net paper, adapted for 3D input.

"""

def __init__(self, in_channels, out_channels, n_channels=32, trilinear=True):

super(SegmentationNetwork, self).__init__()

self.in_channels = in_channels

self.out_channels = out_channels

self.n_channels = n_channels

self.trilinear = trilinear

self.inc = DoubleConv(in_channels, n_channels)

self.down1 = Down(n_channels, n_channels * 2)

self.down2 = Down(n_channels * 2, n_channels * 4)

self.down3 = Down(n_channels * 4, n_channels * 8)

factor = 2 if trilinear else 1

self.down4 = Down(n_channels * 8, n_channels * 16 // factor)

self.up1 = Up(n_channels * 16, n_channels * 8 // factor, trilinear)

self.up2 = Up(n_channels * 8, n_channels * 4 // factor, trilinear)

self.up3 = Up(n_channels * 4, n_channels * 2 // factor, trilinear)

self.up4 = Up(n_channels * 2, n_channels, trilinear)

self.outc = OutConv(n_channels, out_channels)

def forward(self, x):

# Encoder

x1 = self.inc(x)

x2 = self.down1(x1)

x3 = self.down2(x2)

x4 = self.down3(x3)

x5 = self.down4(x4)

# Decoder with skip connections

x = self.up1(x5, x4)

x = self.up2(x, x3)

x = self.up3(x, x2)

x = self.up4(x, x1)

# Output layer

logits = self.outc(x)

return logits

# --- BCP Framework (Unchanged) ---

class DiceLoss(nn.Module):

"""

Calculates the Dice Loss, a common metric for image segmentation.

"""

def __init__(self, smooth=1.0):

super(DiceLoss, self).__init__()

self.smooth = smooth

def forward(self, logits, targets):

probs = F.softmax(logits, dim=1)

probs_fg = probs[:, 1:, ...]

targets_fg = targets[:, 1:, ...]

probs_flat = probs_fg.contiguous().view(-1)

targets_flat = targets_fg.contiguous().view(-1)

intersection = (probs_flat * targets_flat).sum()

dice_score = (2. * intersection + self.smooth) / (probs_flat.sum() + targets_flat.sum() + self.smooth)

return 1 - dice_score

def update_ema_variables(model, ema_model, alpha, global_step):

"""

Update the weights of the Teacher network using Exponential Moving Average (EMA)

of the Student network's weights.

"""

alpha = min(1 - 1 / (global_step + 1), alpha)

for ema_param, param in zip(ema_model.parameters(), model.parameters()):

ema_param.data.mul_(alpha).add_(param.data, alpha=1 - alpha)

class BCPTrainer:

"""

Main class to handle the Bidirectional Copy-Paste training process.

"""

def __init__(self, student_model, teacher_model, optimizer, config):

self.student_model = student_model

self.teacher_model = teacher_model

self.optimizer = optimizer

self.config = config

self.ce_loss = nn.CrossEntropyLoss(reduction='none')

self.dice_loss = DiceLoss()

def generate_bcp_mask(self, shape, beta):

"""

Generates the zero-centered mask M for copy-pasting.

"""

B, C, W, H, L = shape

mask = torch.ones(B, 1, W, H, L, device='cuda')

w_start = int((W - W * beta) / 2)

h_start = int((H - H * beta) / 2)

l_start = int((L - L * beta) / 2)

w_end = w_start + int(W * beta)

h_end = h_start + int(H * beta)

l_end = l_start + int(L * beta)

mask[:, :, w_start:w_end, h_start:h_end, l_start:l_end] = 0

return mask

def train_step(self, labeled_batch, unlabeled_batch, epoch):

"""

Performs a single training step using the BCP methodology.

"""

self.student_model.train()

self.teacher_model.train()

labeled_images, labeled_gt = labeled_batch

unlabeled_images = unlabeled_batch

with torch.no_grad():

unlabeled_preds_teacher = self.teacher_model(unlabeled_images)

pseudo_labels = torch.argmax(torch.softmax(unlabeled_preds_teacher, dim=1), dim=1, keepdim=True)

labeled_images_i, labeled_images_j = torch.chunk(labeled_images, 2)

labeled_gt_i, labeled_gt_j = torch.chunk(labeled_gt, 2)

unlabeled_images_p, unlabeled_images_q = torch.chunk(unlabeled_images, 2)

pseudo_labels_p, pseudo_labels_q = torch.chunk(pseudo_labels, 2)

mask = self.generate_bcp_mask(labeled_images_i.shape, self.config['beta'])

x_in = labeled_images_j * mask + unlabeled_images_p * (1 - mask)

x_out = unlabeled_images_q * mask + labeled_images_i * (1 - mask)

y_in = labeled_gt_j * mask + pseudo_labels_p * (1 - mask)

y_out = pseudo_labels_q * mask + labeled_gt_i * (1 - mask)

preds_in_student = self.student_model(x_in)

preds_out_student = self.student_model(x_out)

y_in_ce = y_in.squeeze(1).long()

y_out_ce = y_out.squeeze(1).long()

y_in_one_hot = F.one_hot(y_in_ce, num_classes=self.config['num_classes']).permute(0, 4, 1, 2, 3).float()

y_out_one_hot = F.one_hot(y_out_ce, num_classes=self.config['num_classes']).permute(0, 4, 1, 2, 3).float()

loss_ce_in = self.ce_loss(preds_in_student, y_in_ce)

loss_dice_in = self.dice_loss(preds_in_student, y_in_one_hot)

loss_in = (loss_ce_in * mask.squeeze(1) + self.config['alpha'] * loss_ce_in * (1-mask.squeeze(1))).mean() + \

(loss_dice_in + self.config['alpha'] * loss_dice_in).mean() # Simplified Dice loss part based on common practice

loss_ce_out = self.ce_loss(preds_out_student, y_out_ce)

loss_dice_out = self.dice_loss(preds_out_student, y_out_one_hot)

loss_out = (loss_ce_out * (1-mask.squeeze(1)) + self.config['alpha'] * loss_ce_out * mask.squeeze(1)).mean() + \

(loss_dice_out + self.config['alpha'] * loss_dice_out).mean() # Simplified Dice loss part

total_loss = loss_in + loss_out

self.optimizer.zero_grad()

total_loss.backward()

self.optimizer.step()

global_step = epoch * self.config['steps_per_epoch']

update_ema_variables(self.student_model, self.teacher_model, self.config['ema_decay'], global_step)

return total_loss.item()

# --- Example Usage (Unchanged) ---

if __name__ == '__main__':

# --- Configuration ---

config = {

'num_classes': 4,

'in_channels': 1,

'ema_decay': 0.99,

'alpha': 0.5,

'beta': 2/3,

'learning_rate': 0.01,

'steps_per_epoch': 100,

'epochs': 10 # Reduced for quicker demo

}

# --- Model Initialization ---

# Now using the full 3D U-Net

student_net = SegmentationNetwork(config['in_channels'], config['num_classes']).cuda()

teacher_net = SegmentationNetwork(config['in_channels'], config['num_classes']).cuda()

teacher_net.load_state_dict(student_net.state_dict())

for param in teacher_net.parameters():

param.detach_()

optimizer = torch.optim.SGD(student_net.parameters(), lr=config['learning_rate'], momentum=0.9, weight_decay=0.0001)

bcp_trainer = BCPTrainer(student_net, teacher_net, optimizer, config)

print("BCP Trainer Initialized with 3D U-Net. Starting dummy training loop...")

# --- Dummy Training Loop ---

dummy_batch_size = 2 # Reduced to manage memory for the larger model

for epoch in range(config['epochs']):

# Create dummy data for demonstration

dummy_labeled_images = torch.randn(dummy_batch_size, config['in_channels'], 64, 64, 64).cuda()

dummy_labeled_gt = torch.randint(0, config['num_classes'], (dummy_batch_size, 1, 64, 64, 64)).cuda()

dummy_unlabeled_images = torch.randn(dummy_batch_size, config['in_channels'], 64, 64, 64).cuda()

# Perform a training step

loss = bcp_trainer.train_step(

(dummy_labeled_images, dummy_labeled_gt),

dummy_unlabeled_images,

epoch

)

print(f"Epoch [{epoch+1}/{config['epochs']}], Loss: {loss:.4f}")

print("Dummy training finished.")