7 Astonishing Ways DIOR-ViT Transforms Cancer Grading (Avoiding Common Pitfalls)

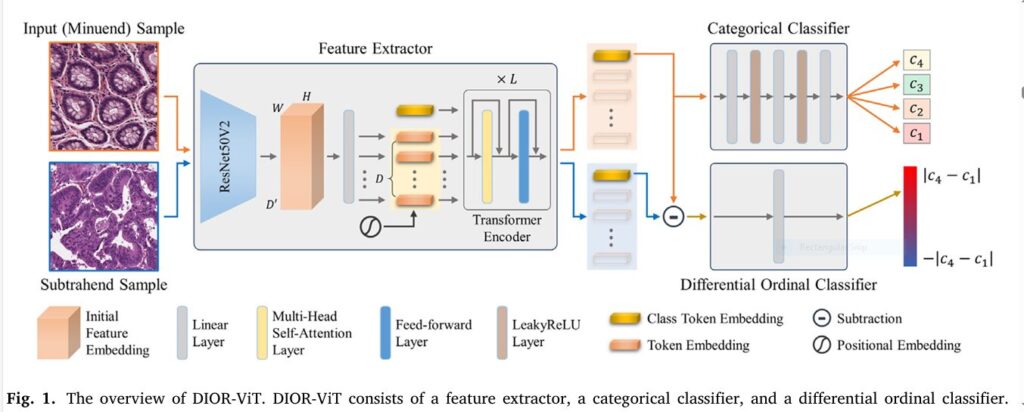

Cancer grading in pathology images is both an art and a science—and it’s riddled with subjectivity, inter-observer variability, and technical roadblocks. Enter DIOR-ViT, a groundbreaking differential ordinal learning Vision Transformer that shatters conventions and delivers robust, high-accuracy cancer classification across multiple tissue types. In this deep-dive SEO-optimized guide, we unpack the seven game-changing innovations behind […]

7 Astonishing Ways DIOR-ViT Transforms Cancer Grading (Avoiding Common Pitfalls) Read More »