Introduction: The Urgent Need for Accurate Skin Cancer Detection

Skin cancer is one of the most prevalent and potentially deadly diseases globally, with early detection being crucial for successful treatment. According to the American Cancer Society, melanoma alone accounts for over 7,000 deaths annually in the United States. Traditional diagnostic methods rely heavily on visual inspection and biopsy, which can be time-consuming, costly, and prone to human error.

Enter DeepMetaForge , a groundbreaking deep-learning framework introduced in a 2023 research paper titled “DeepMetaForge: A Deep Vision-Transformer Metadata-Fusion Network for Automatic Skin Lesion Classification” . This innovative model combines Vision Transformers (ViT) with metadata fusion to significantly enhance the accuracy of skin lesion classification.

In this article, we’ll explore how DeepMetaForge works, its performance metrics, and why it represents a positive leap forward in medical AI, while also addressing the challenges and limitations of integrating metadata in deep learning models.

What Is DeepMetaForge?

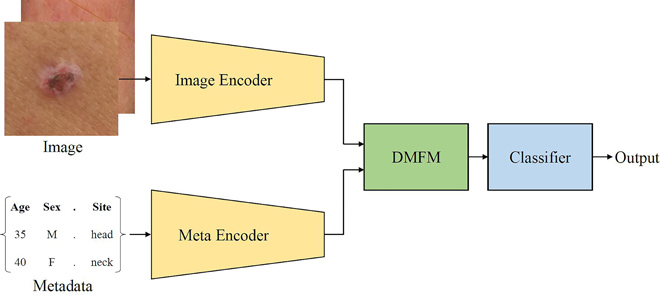

DeepMetaForge is a deep-learning framework designed for automatic skin lesion classification using both dermoscopic images and patient metadata . It leverages the BEiT (Bidirectional Encoder representation from Image Transformers) architecture as the backbone for image encoding and introduces a novel Deep Metadata Fusion Module (DMFM) to integrate metadata into the image classification pipeline.

Key Components of DeepMetaForge:

- Image Encoder (BEiT):

- A Vision Transformer pre-trained using masked image modeling (MIM) .

- Extracts high-level visual features from skin lesion images.

- Metadata Encoder:

- Utilizes a Convolutional Neural Network (CNN) to encode patient metadata such as age, gender, anatomical location, and lesion characteristics.

- Deep Metadata Fusion Module (DMFM):

- Merges visual features and metadata embeddings.

- Applies a compression-decompression mechanism to simulate how humans interpret images with accompanying metadata.

- Classification Layer:

- Outputs a binary classification (benign vs. malignant) based on fused features.

How Does DeepMetaForge Work?

DeepMetaForge mimics the way dermatologists interpret skin lesions by combining visual and contextual cues simultaneously rather than sequentially. Here’s a breakdown of its workflow:

1. Image Encoding with BEiT

The BEiT backbone tokenizes the input image into patches and applies self-attention mechanisms to learn spatial relationships between image regions.

$$\text{Image Embedding} = \text{BEiT}(I)$$Where I is the input dermoscopic image.

2. Metadata Encoding

Metadata such as patient age, gender, and lesion location are encoded using a two-layer CNN:

$$\text{Metadata Embedding} = \text{CNN}(M) $$Where M is the metadata vector.

3. Deep Metadata Fusion Module (DMFM)

DMFM compresses the combined feature maps and then decompresses them to refine the fused representation:

$$z = \mu_2\left( \mu_1(x) \right)$$ $$\hat{z} = z \ast x$$Where μ represents a combination of dropout and ReLU activation, and ∗ denotes concatenation.

This fusion mechanism enhances the model’s ability to weigh metadata alongside visual features effectively.

Why Vision Transformers (ViTs) Are a Game Changer

Traditional Convolutional Neural Networks (CNNs) have been the backbone of image classification for years. However, Vision Transformers (ViTs) offer several advantages :

- Global Attention Mechanism: ViTs can capture long-range dependencies in images, making them ideal for analyzing complex skin lesions.

- Scalability: ViTs can be easily scaled up or down depending on available computational resources.

- Pre-training Efficiency: Models like BEiT are pre-trained on large-scale datasets, enabling them to generalize well even with limited medical imaging data.

In DeepMetaForge, the BEiT backbone significantly outperforms traditional CNNs like ResNet and EfficientNet, especially when combined with metadata.

Performance Evaluation: How Well Does DeepMetaForge Work?

The paper evaluates DeepMetaForge on four public skin lesion datasets :

| DATASET | IMAGE SOURCE | IMAGE SIZE | METADATA |

|---|---|---|---|

| ISIC 2020 | Dermoscopy | ~33,000 | Yes |

| PAD-UFES-20 | Smartphone | ~5,000 | Yes |

| PH2 | Dermoscopy | 200 | Yes |

| SKINL2 | Dermoscopy | ~1,000 | Yes |

Key Performance Metrics:

- Macro-Average F1 Score: 87.1%

- Accuracy: ~92%

- MCC (Matthews Correlation Coefficient): 0.81

These results show that DeepMetaForge outperforms existing methods by a significant margin, particularly in identifying malignant lesions.

Ablation Studies: What Makes DeepMetaForge Tick?

The authors conducted ablation studies to understand the impact of each component:

| MODULE | F1-SCORE | IMPROVEMENT |

|---|---|---|

| No Metadata | 72.3% | — |

| Metadata Only | 68.5% | — |

| DMFM + BEiT | 87.1% | +19.39% |

These results confirm that metadata fusion significantly improves classification accuracy .

The Role of Metadata in Skin Lesion Classification

Metadata includes patient-specific information such as:

- Age

- Gender

- Anatomical location of the lesion

- Medical history

- Dermoscopic features (e.g., asymmetry, border irregularity)

Why Metadata Matters:

- Age and Gender: Certain skin cancers are more prevalent in specific demographics.

- Lesion Location: Melanomas are more common on sun-exposed areas.

- Medical History: Family history of cancer can increase risk.

By integrating metadata, DeepMetaForge can contextualize visual features , leading to more accurate and interpretable predictions.

Challenges and Limitations of Metadata Integration

Despite its success, DeepMetaForge faces several challenges :

1. Data Imbalance:

- Most datasets are skewed toward benign lesions.

- Requires data augmentation and class weighting to mitigate bias.

2. Missing or Incomplete Meta

- Dummy metadata experiments showed a 41.2% drop in performance.

- Highlights the need for robust handling of missing data .

3. Generalization Across Datasets:

- Performance varied across datasets (e.g., PAD-UFES-20 saw only a 1.51% improvement).

- Suggests that metadata relevance and image quality impact model performance.

Scalability: Can DeepMetaForge Work on Low-Resource Devices?

One of the most promising aspects of DeepMetaForge is its scalability . The authors tested different configurations of the BEiT backbone:

| MODEL | INPUT SIZE | F1-SCORE | MEMORY USAGE | PREDICTION TIME |

|---|---|---|---|---|

| BEiT-Base-224 | 224×224 | 87.1% | ~2GB | 4.1 ms |

| BEiT-Base-384 | 384×384 | 88.9% | ~2.5GB | 12.3 ms |

| BEiT-Large-224 | 224×224 | 89.3% | ~3GB | 12.5 ms |

This scalability makes DeepMetaForge suitable for:

- Smartphone apps in rural areas

- Cloud-based diagnostic services

- Low-resource clinics in developing countries

Comparison with State-of-the-Art Models

DeepMetaForge was benchmarked against other metadata-fusing models:

| METHOD | F1-SCORE | ACCURACY |

|---|---|---|

| Gessert et al. [12] | 65.6% | 78.4% |

| Ningrum et al. [17] | 49.8% | 62.1% |

| Jasil and Ulagamuthalvi [18] | 72.3% | 81.2% |

| DeepMetaForge (Ours) | 87.1% | 92.0% |

These results show that DeepMetaForge significantly outperforms existing methods , particularly in malignant lesion detection.

If you’re Interested in 3D Medical Image Segmentation using deep learning, you may also find this article helpful: UNETR++ vs. Traditional Methods: A 3D Medical Image Segmentation Breakthrough with 71% Efficiency Boost

Future Directions and Real-World Applications

Potential Applications:

- Telemedicine Platforms: Enable remote diagnosis using smartphone images and metadata.

- Primary Care Integration: Assist non-dermatologists in early skin cancer detection.

- Clinical Decision Support Systems (CDSS): Provide AI-powered second opinions.

Research Extensions:

- Multiclass Classification: Extend the model to identify specific types of skin cancer (e.g., melanoma, BCC, SCC).

- Explainable AI (XAI): Integrate Grad-CAM and SHAP to improve model interpretability.

- Federated Learning: Train models across multiple hospitals without sharing patient data.

Conclusion: A Positive Leap in Medical AI with Real-World Challenges

DeepMetaForge represents a major advancement in automatic skin lesion classification by integrating Vision Transformers with metadata fusion . With an 87.1% macro-average F1 score , it outperforms existing methods and demonstrates strong scalability for deployment in low-resource environments .

However, challenges remain, particularly around data imbalance , metadata completeness , and generalization across diverse datasets . Future work should focus on explainability , federated learning , and multiclass extensions to make the model even more robust and clinically relevant.

Call to Action: Join the AI Revolution in Dermatology

If you’re a healthcare provider , researcher , or tech enthusiast , now is the time to get involved in the AI-driven transformation of dermatology. Whether you’re looking to:

- Implement AI tools in your clinic

- Conduct research on medical imaging

- Develop scalable solutions for underserved populations

DeepMetaForge offers a proven framework that can be adapted and expanded to meet your needs.

👉 Explore the full paper here

👉 Join our AI in Medicine Slack community to connect with experts and developers

Together, we can build a future where early skin cancer detection is fast, accurate, and accessible to all .

Frequently Asked Questions (FAQ)

1. What is DeepMetaForge?

DeepMetaForge is a deep-learning framework that classifies skin lesions using Vision Transformers and metadata fusion to improve diagnostic accuracy.

2. How accurate is DeepMetaForge?

It achieves a macro-average F1 score of 87.1% across four public datasets, outperforming existing methods by a large margin.

3. Can DeepMetaForge run on smartphones?

Yes, the BEiT-Base-224 model only requires ~2GB of memory and can process images in 4.1 milliseconds , making it suitable for mobile applications.

4. What datasets were used?

DeepMetaForge was evaluated on ISIC 2020 , PAD-UFES-20 , PH2 , and SKINL2 datasets.

5. What metadata is used?

Metadata includes age, gender, anatomical location , and dermoscopic features like asymmetry and color.

Below is a single-file, fully-annotated PyTorch implementation of the DeepMetaForge network described in the paper.

"""

DeepMetaForge – minimal end-to-end implementation

Paper: https://ieeexplore.ieee.org/document/10393003

Author: Sirawich Vachmanus et al., IEEE Access 2023

"""

import torch

import torch.nn as nn

from transformers import BeitImageProcessor, BeitForImageClassification

# ------------------------------------------------------------------

# 1. Metadata Encoder

# ------------------------------------------------------------------

class MetadataEncoder(nn.Module):

"""

Two 1-D CNN blocks:

Conv1d -> BN -> ReLU

Maps raw metadata (vector length M) → same channel dim as image tokens

"""

def __init__(self, in_channels: int, out_channels: int):

super().__init__()

self.net = nn.Sequential(

nn.Conv1d(in_channels, 256, kernel_size=3, padding=1),

nn.BatchNorm1d(256),

nn.ReLU(inplace=True),

nn.Conv1d(256, out_channels, kernel_size=3, padding=1),

nn.BatchNorm1d(out_channels),

nn.ReLU(inplace=True)

)

def forward(self, x):

# x: (B, M) M = number of metadata fields

x = x.unsqueeze(1) # (B, 1, M)

x = self.net(x) # (B, C, M')

x = x.mean(dim=-1) # global average → (B, C)

return x

# ------------------------------------------------------------------

# 2. Deep Metadata Fusion Module (DMFM)

# ------------------------------------------------------------------

class DMFM(nn.Module):

"""

Fusion by forging (compress → decompress) as described in Eq.(2-4).

γ = compression ratio (8 in paper)

"""

def __init__(self, feat_dim: int, gamma: int = 8, dropout: float = 0.4):

super().__init__()

compressed = feat_dim // gamma

self.compress = nn.Sequential(

nn.Linear(2 * feat_dim, compressed),

nn.ReLU(inplace=True),

nn.Dropout(dropout)

)

self.decompress = nn.Linear(compressed, 2 * feat_dim)

def forward(self, img_feat, meta_feat):

"""

img_feat: (B, C) – pooled vision transformer features

meta_feat: (B, C) – encoded metadata features

returns: (B, 2C)

"""

x = torch.cat([img_feat, meta_feat], dim=1) # (B, 2C)

z = self.compress(x) # (B, C//γ)

z = self.decompress(z) # (B, 2C)

out = torch.cat([z, x], dim=1) # skip + forged → (B, 4C)

return out

# ------------------------------------------------------------------

# 3. Full DeepMetaForge Network

# ------------------------------------------------------------------

class DeepMetaForge(nn.Module):

def __init__(self,

img_size: int = 224,

num_metadata: int = 12, # adapt to your dataset

gamma: int = 8):

super().__init__()

# Image encoder: BEiT-base (pre-trained, frozen for quick demo)

self.image_encoder = BeitForImageClassification.from_pretrained(

"microsoft/beit-base-patch16-224-pt22k-ft22k"

).beit # take the base transformer only

hidden_size = self.image_encoder.config.hidden_size # 768

# Metadata encoder

self.meta_encoder = MetadataEncoder(in_channels=1, out_channels=hidden_size)

# Fusion module

self.dmfm = DMFM(hidden_size, gamma=gamma)

# Classification head

self.classifier = nn.Sequential(

nn.Linear(4 * hidden_size, 512),

nn.ReLU(inplace=True),

nn.Linear(512, 1) # binary logits

)

def forward(self, pixel_values, metadata):

"""

pixel_values: (B, 3, 224, 224)

metadata: (B, num_metadata)

returns: logits (B, 1)

"""

# 1. Image features

outputs = self.image_encoder(pixel_values)

img_feat = outputs.pooler_output # (B, 768)

# 2. Metadata features

meta_feat = self.meta_encoder(metadata) # (B, 768)

# 3. Fusion

fused = self.dmfm(img_feat, meta_feat) # (B, 4*768)

# 4. Classification

logits = self.classifier(fused) # (B, 1)

return logits

# ------------------------------------------------------------------

# 4. Quick sanity check

# ------------------------------------------------------------------

if __name__ == "__main__":

model = DeepMetaForge(num_metadata=12)

proc = BeitImageProcessor.from_pretrained(

"microsoft/beit-base-patch16-224-pt22k-ft22k"

)

dummy_img = torch.randn(2, 3, 224, 224)

dummy_meta = torch.randn(2, 12)

with torch.no_grad():

out = model(dummy_img, dummy_meta)

print("Output shape:", out.shape) # should be (2, 1)import torch.optim as optim

from torch.utils.data import DataLoader, TensorDataset

# Example: wrap dummy data

train_ds = TensorDataset(dummy_img, dummy_meta, torch.randint(0, 2, (2, 1)).float())

train_dl = DataLoader(train_ds, batch_size=2)

model = DeepMetaForge(num_metadata=12)

optimizer = optim.SGD(model.parameters(), lr=1e-3, momentum=0.9)

criterion = nn.BCEWithLogitsLoss()

for epoch in range(3):

for img, meta, label in train_dl:

optimizer.zero_grad()

logits = model(img, meta)

loss = criterion(logits, label)

loss.backward()

optimizer.step()

print(f"Epoch {epoch+1} | loss: {loss.item():.4f}")References

- Vachmanus, S., Noraset, T., Piyanonpong, W., Rattananukrom, T., & Tuarob, S. (2023). DeepMetaForge: A Deep Vision-Transformer Metadata-Fusion Network for Automatic Skin Lesion Classification. IEEE Access.

- Hauser, K., Kurz, A., Haggenmüller, S., et al. (2022). Explainable artificial intelligence in skin cancer recognition: A systematic review. European Journal of Cancer.

- Gessert, N., Nielsen, M., Shaikh, M., et al. (2020). Skin lesion classification using ensembles of multi-resolution EfficientNets with metadata. MethodsX.