Medical imaging has long been a cornerstone of modern healthcare, enabling clinicians to diagnose, treat, and monitor a wide range of conditions. However, the manual segmentation of structures in medical images remains a time-consuming and expertise-intensive task. With the advent of deep learning and foundation models like the Segment Anything Model (SAM), there is growing potential to automate this process. Yet, challenges such as reliance on high-quality manual prompts and domain-specific limitations have hindered widespread adoption—until now.

In this article, we explore Sam2Rad , an innovative framework developed by researchers at the University of Alberta and McGill University. Designed specifically for medical image segmentation, Sam2Rad eliminates the need for manual prompts while delivering exceptional accuracy across diverse datasets. Whether you’re a radiologist, researcher, or AI enthusiast, this breakthrough promises to reshape how we approach medical imaging workflows.

The Challenge of Medical Image Segmentation

Medical image segmentation—the process of identifying and delineating specific regions of interest (ROIs) within an image—is critical for tasks like tumor detection, fracture analysis, and organ mapping. Traditional approaches, such as UNet and Mask R-CNN, rely heavily on specialized networks trained for individual tasks. While effective, these methods require extensive labeled data and struggle to generalize across different imaging modalities.

Foundation models like SAM have shown promise in addressing these limitations by leveraging vast amounts of pre-training data. SAM’s ability to handle various segmentation prompts—points, bounding boxes, or masks—makes it highly versatile. However, its performance in medical imaging, particularly ultrasound, often falters due to:

- Domain Shift : Ultrasound images exhibit unique artifacts, such as speckle noise, which differ significantly from natural images.

- Prompt Dependency : SAM relies on high-quality manual prompts, which are not always feasible without expert input.

- Zero-Shot Limitations : Even fine-tuned variants like MedSAM fail to generalize effectively to challenging datasets like shoulder ultrasound.

To bridge these gaps, the research team behind Sam2Rad proposes a novel solution that combines prompt learning with SAM’s robust architecture.

Introducing Sam2Rad: The Future of Automated Segmentation

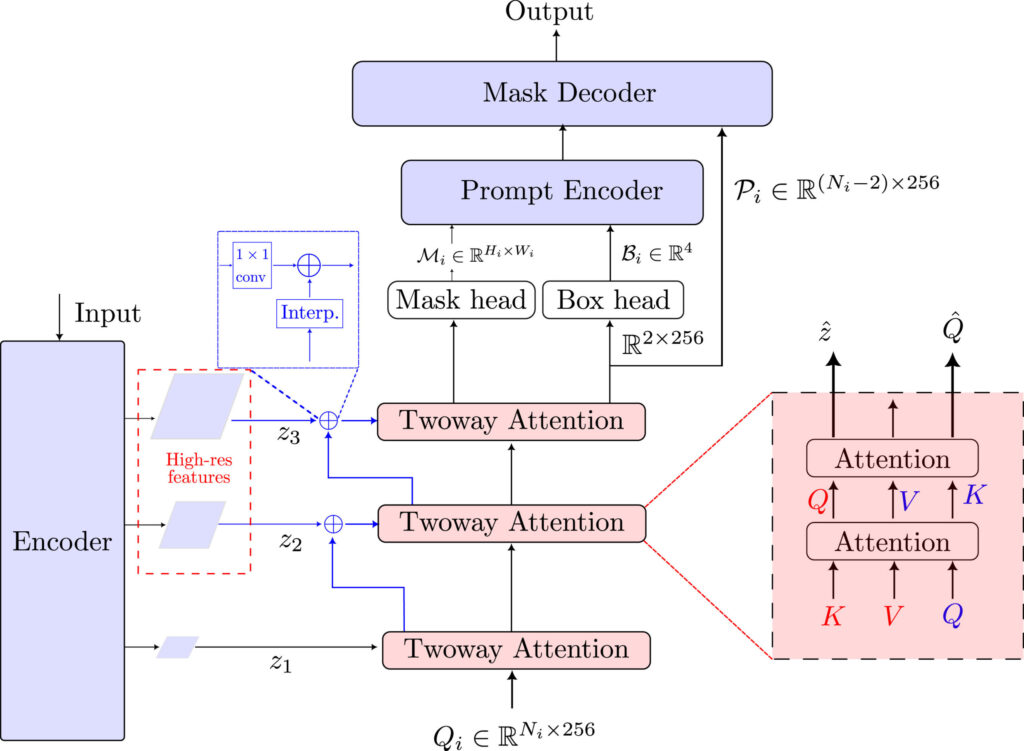

Sam2Rad, short for “Segment Anything Model 2 for Radiology,” introduces a lightweight yet powerful mechanism called the Prompt Predictor Network (PPN) . This network generates high-quality prompts automatically, eliminating the need for manual intervention. Here’s how it works:

How Sam2Rad Works

- Feature Extraction : Sam2Rad leverages hierarchical feature maps extracted from SAM’s pretrained image encoder. These features capture multiscale representations, ranging from high-level semantic information to low-level spatial details.

- Cross-Attention Mechanism : Using a two-way cross-attention mechanism, PPN refines learnable object queries to predict bounding box coordinates, mask prompts, and high-dimensional embeddings.

- Prompt Integration : The predicted prompts are fed into SAM’s mask decoder, producing accurate segmentation masks without requiring human input.

By freezing all SAM modules and training only the PPN, Sam2Rad ensures compatibility with SAM’s original architecture while maintaining its generalization capabilities. This parameter-efficient approach minimizes computational overhead and enhances performance even in low-data scenarios.

Key Features and Benefits of Sam2Rad

- No Manual Prompts: Fully automated workflow saves time and reduces dependency on experts.

- Few-Shot Learning: Achieves robust performance with as few as 10 labeled images.

- Multi-Class Segmentation: Handles complex tasks like isolating bones, tendons, and pathology.

- Compatibility: Works with any SAM variant (e.g., SAM2-Tiny, SAM2-Large).

Performance and Results: Outperforming SAM Variants

Sam2Rad was tested on three musculoskeletal ultrasound datasets:

| Dataset | SAM2 (Dice/IoU) | Sam2Rad (Dice/IoU) | Improvement |

|---|---|---|---|

| Hip | 75.1%/61.2% | 85.5%/75.2% | +10.4%/+14% |

| Wrist | 63.5%/47.9% | 85.1%/75.2% | +21.6%/+27.3% |

| Shoulder | 25.3%/15.1% | 77.6%/65.3% | +51.3%/+50.2% |

Notably, Sam2Rad achieved a 76.5% Dice score on shoulder data with SAM2-Large, a 51.3% improvement over baseline.

Applications in Clinical Practice

The implications of Sam2Rad extend far beyond research labs. In clinical settings, this technology can streamline numerous diagnostic and treatment-planning processes:

- Ultrasound Imaging : Accurate segmentation of bony structures and soft tissues enables faster identification of fractures, joint abnormalities, and other pathologies.

- Data Labeling : Sam2Rad’s autonomous mode accelerates the creation of annotated datasets, reducing the burden on radiologists.

- Interactive Refinement : For cases requiring higher precision, Sam2Rad supports semi-autonomous workflows, allowing users to iteratively refine results through additional prompts.

Ablation Studies and Performance Metrics

To validate Sam2Rad’s effectiveness, the authors conducted extensive experiments comparing various prompt configurations and hierarchical feature integrations. Key findings include:

- Combined Prompts Outperform Individual Configurations : Using bounding boxes alongside high-dimensional embeddings yielded Dice scores above 82%, demonstrating the value of complementary contextual information.

- Hierarchical Features Show Marginal Gains : Incorporating multiscale features improved performance slightly but highlighted the importance of combining different prompt types.

- Comparison to Non-SAM Models : Sam2Rad achieves comparable or superior results to specialized models like UNet and UNet++, offering greater flexibility and adaptability.

Future Directions and Broader Impact

While Sam2Rad currently focuses on 2D ultrasound images, future iterations aim to expand its applicability to other modalities, including CT, MRI, and X-rays. Potential advancements include:

- 3D Convolutional Layers : To handle volumetric data, enabling more comprehensive analyses of complex anatomical structures.

- Real-Time Applications : Distilling the model into smaller, task-specific versions could facilitate real-time use in clinical environments.

- Integration with Existing Workflows : Seamless compatibility with SAM’s architecture ensures easy adoption across diverse clinical settings.

Why Sam2Rad Matters for Healthcare Professionals

For radiologists, clinicians, and AI developers, Sam2Rad represents a paradigm shift in medical image segmentation. By automating what was once a labor-intensive process, it empowers healthcare providers to focus on patient care rather than tedious manual tasks. Moreover, its ability to operate in low-data regimes makes it accessible even for under-resourced institutions.

Call to Action: Join the Revolution in Medical Imaging

Are you ready to harness the power of Sam2Rad for your projects? The code is available on GitHub at https://github.com/aswahd/SamRadiology . Explore the possibilities, contribute to ongoing research, and help shape the future of AI-driven radiology.

If you found this article insightful, share it with colleagues who might benefit from understanding the transformative potential of Sam2Rad. Together, let’s accelerate innovation in medical imaging and improve outcomes for patients worldwide.

Conclusion: A New Era for Medical Imaging

Sam2Rad is more than just a technical innovation—it’s a step toward making AI in healthcare more accessible and effective. By automating medical image segmentation, it saves time, boosts accuracy, and empowers clinicians to focus on what matters most: patient care. Whether it’s detecting a wrist fracture or analyzing a shoulder ultrasound, Sam2Rad delivers results that could redefine how we approach diagnostics.

Want to dive deeper into this cutting-edge technology? Check out the full research paper in Computers in Biology and Medicine (2025) for a detailed look at Sam2Rad’s methodology and results. For more insights on how AI is transforming healthcare, subscribe to our newsletter or explore related articles on ultrasound image analysis and segmentation models. Join the conversation—how do you see AI shaping the future of medicine?

Pingback: Transforming Keratitis Diagnosis with Vision Transformers

Pingback: Medical Image Segmentation: Adapting SAM for Healthcare